TL;DR

Most memory systems hoard instances (queries, answers, snippets). ArcMemo instead distills concepts—compact, reusable abstractions of what a model learned while solving a problem. Those concepts are retrieved and recombined for new problems. On ARC‑AGI, this design beats strong no‑memory baselines and continues to scale with retries, showing a credible path to lifelong, test‑time learning without finetuning.

Why this paper matters

The status quo of “inference‑time scaling” is a treadmill: longer chains of thought today, amnesia tomorrow. Enterprises building agentic systems—customer ops copilots, finance/excel agents, or low‑code RPA flows—need their agents to keep what they learn and apply it later without weight updates. ArcMemo’s move from instance‑level to concept‑level memory is the right abstraction boundary:

- Generalize: Concepts are portable beyond the original prompt.

- Compose: Concepts plug together like LEGO—making new solves out of old insights.

- Continually learn: Memory grows with experience and helps on the very next pass.

This is the difference between an assistant that remembers “the answer to that ticket” vs. one that remembers how it solved the ticket—and can reuse that method next time, even in a different domain.

What ArcMemo actually does

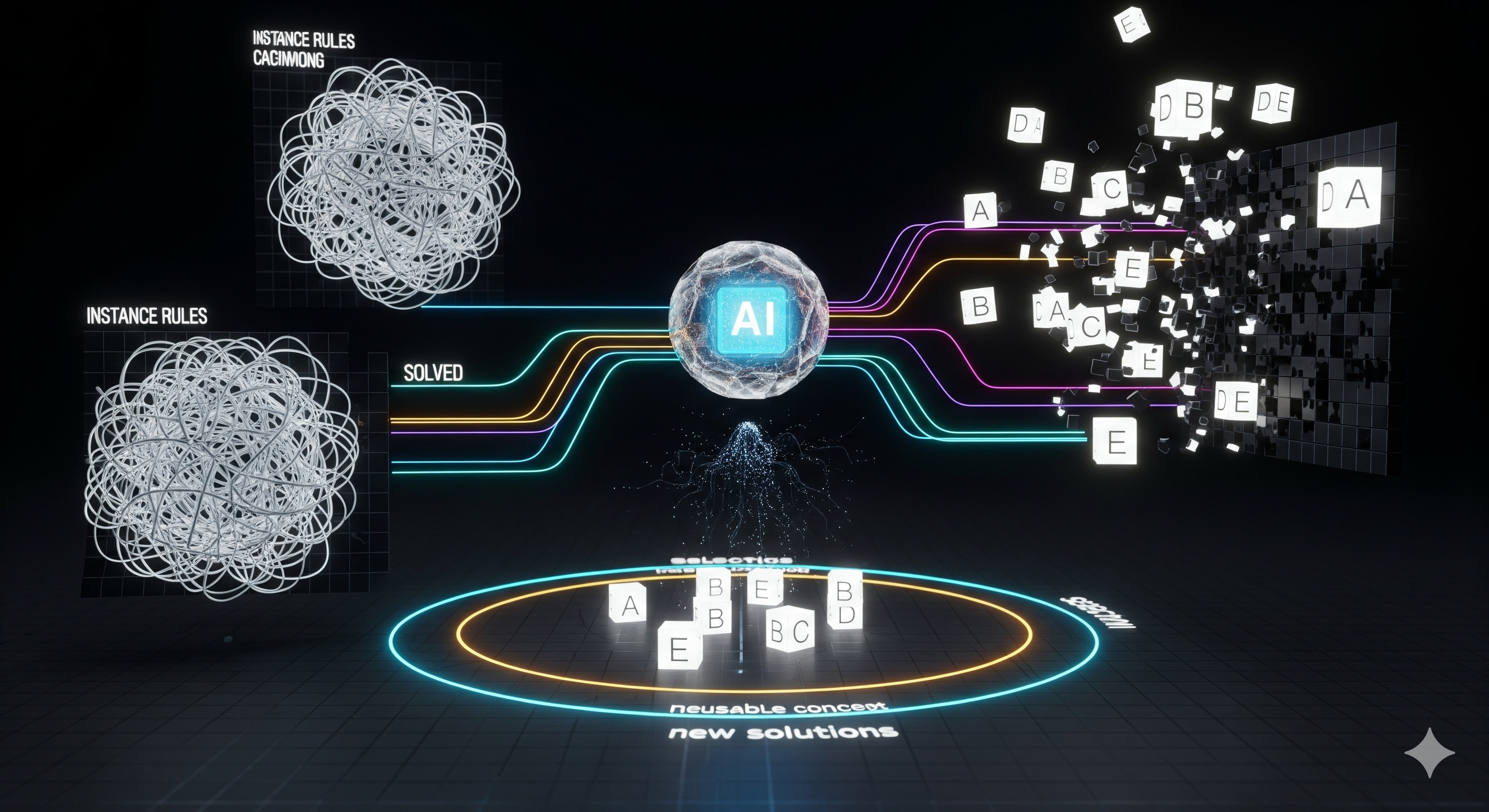

ArcMemo designs the memory system around three operations:

- Write: After solving, abstract the concepts from traces (not the whole trace).

- Read/Select: For a new task, select only relevant concepts (not the entire stash).

- Use/Compose: Inject those concepts into the prompt to guide new reasoning.

Crucially, ArcMemo explores two concept formats and two selection styles:

| Dimension | Open‑Ended (OE) | Program‑Synthesis (PS) |

|---|---|---|

| Memory format | Natural‑language situation → suggestion pairs | Typed, parameterized concepts (types/structures/routines), allow higher‑order routines |

| Abstraction goal | Minimal constraints; fast to write; broad applicability | Strong modularity; composable building blocks; less overspecification |

| Selection | Pre‑caption puzzle, match situations (lightweight) | Reasoning‑based exploration finds relevant concepts, fills parameters |

| Intended benefit | Cheap, flexible; good first step | More reusable and scalable; best when problems vary widely |

Key design choices:

- Separate the conditions (when applicable) from the method (what to do).

- Favor parameterization (values & sub‑routines) so a single concept covers many cases.

- Use reasoning‑based retrieval when embedding similarity breaks on abstraction.

Results that matter to builders

- Better than no‑memory baselines at official ARC‑AGI scoring; the PS formulation is the most consistent winner and scales with retries (i.e., more compute → more reuse → more solves).

- Selection helps twice: it improves scores and reduces token waste versus dumping the whole memory bank into context.

- Continual updates during evaluation improve downstream solves—evidence of real test‑time lifelong learning rather than mere caching.

Translation for practitioners: “If your agents keep small, typed, recomposable skills—and pick only the ones that fit the next task—they’ll get better as they go, even without retraining.”

Why concept memory beats instance memory (with a concrete analogy)

Imagine a SupportCopilot that once resolved a ‘multi‑tenant billing proration’ issue:

- Instance memory stores that ticket’s entire steps, then struggles on a new case mixing proration with a different entitlement rule.

- Concept memory stores reusable skills: “identify tenant scope,” “infer proration window,” “reconcile entitlement vs. invoice,” each parameterized by schema, dates, and exceptions. Next ticket? Compose these skills in a new order with new parameters.

This mirrors ARC‑AGI’s finding: tasks are compositions of smaller ideas. Storing the pieces wins.

A mini‑playbook you can copy

1) Choose a concept schema

- Title: succinct name

- Kind: type / structure / routine

- Parameters: values and callable sub‑routines allowed

- Output type: what this routine returns

- Relevance cues: when to use it (signals, patterns)

- Implementation notes: pragmatic hints, pitfalls

2) Scaffold writing (abstraction) prompts

- Convert raw traces to pseudocode first (prevents memorizing low‑level quirks).

- Ask the model to update existing concepts: merge, refine parameters, add a higher‑order variant.

- Keep memory flat at first; introduce consolidation later.

3) Build two selectors

- Fast selector (cheap): parse inputs into a structured description (captioning, schema sketch); match by relevance cues.

- Deep selector (expensive): run a short reasoning explore‑and‑backtrack pass that proposes candidate concepts and fills parameters; cap with top‑k.

4) Guardrails & hygiene

- Only write concepts when you have feedback (tests, eval rules) to avoid memorializing mistakes.

- Log provenance (which problems birthed/updated which concepts) for audits.

- Track token budgets; selection is there to keep prompts small.

Limits & open problems (and how to mitigate)

- Order sensitivity: Memories reflect the order you see tasks. Mitigation: process in shuffled mini‑batches; add light curriculum; schedule periodic consolidation passes to re‑abstract across many experiences.

- Abstraction tension: Too specific retrieves well but doesn’t transfer; too abstract transfers but becomes vague. Mitigation: allow families of the same concept at different specificity levels and let selection pick among them.

- Token overhead: Memory can spur more exploration (more tokens). Mitigation: stricter top‑k, relevance scoring, and occasional pruning/merging.

Where to apply this tomorrow

- Ops copilots: learn reusable troubleshooting flows (e.g., auth cascades, pagination bugs) that transfer across customers.

- FinOps/Excel agents: retain reconciliation patterns (e.g., FX rounding, accrual roll‑forwards) and re‑compose them for new ledgers.

- Quant research bots: keep reusable analysis motifs (e.g., regime split → feature test → nested CV) that assemble into new studies—without touching weights.

If your agents repeat any non‑trivial reasoning, ArcMemo’s approach will compound.

The bottom line

ArcMemo shows that abstraction + modularity + selective reuse meaningfully lifts test‑time reasoning—and keeps improving as agents attempt more tasks. In enterprise terms: it’s a credible blueprint for agents that actually learn on the job.

Cognaptus: Automate the Present, Incubate the Future