Pipes by Prompt, DAGs by Design: Why Hybrid Beats Hero Prompts

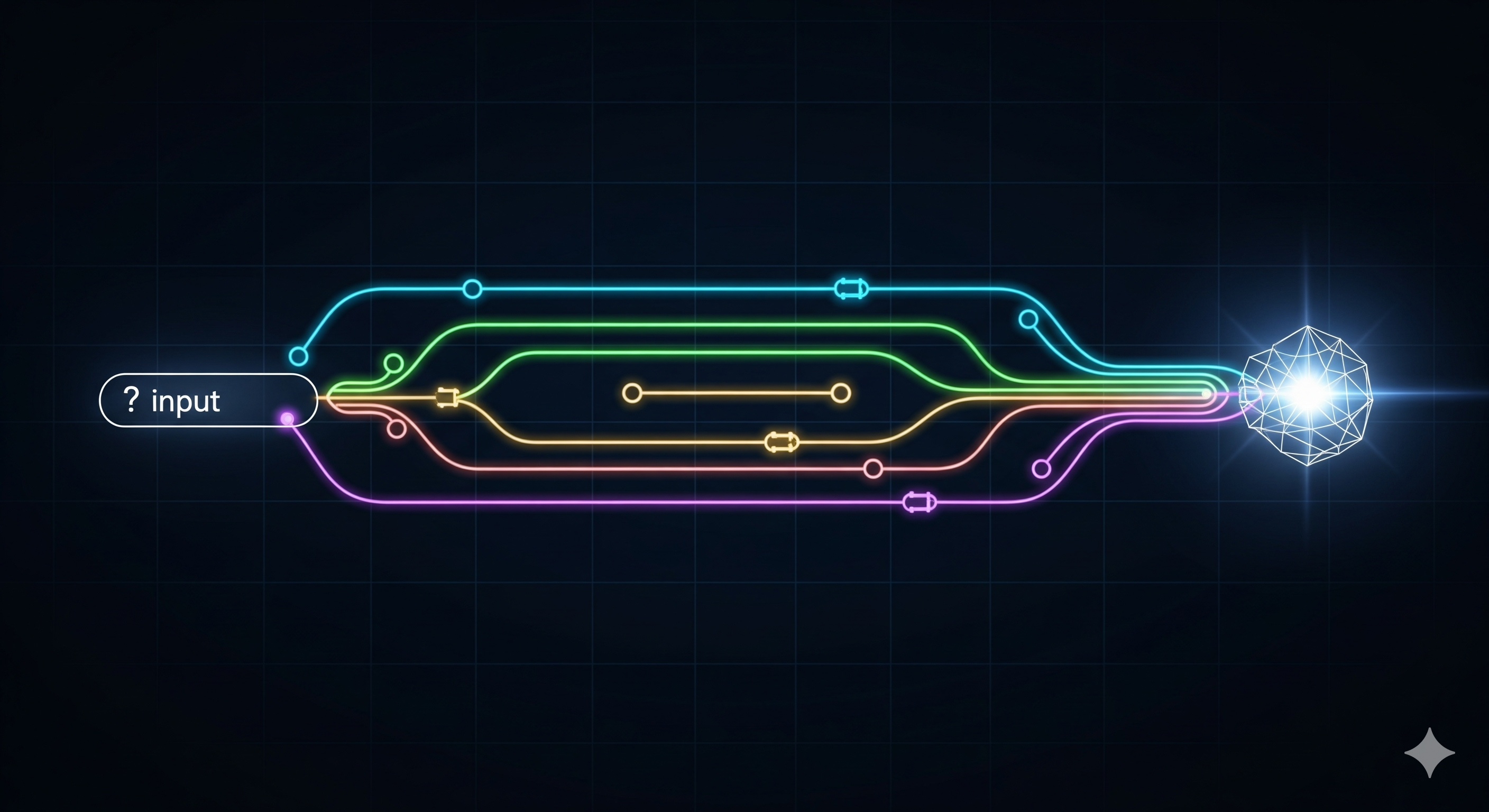

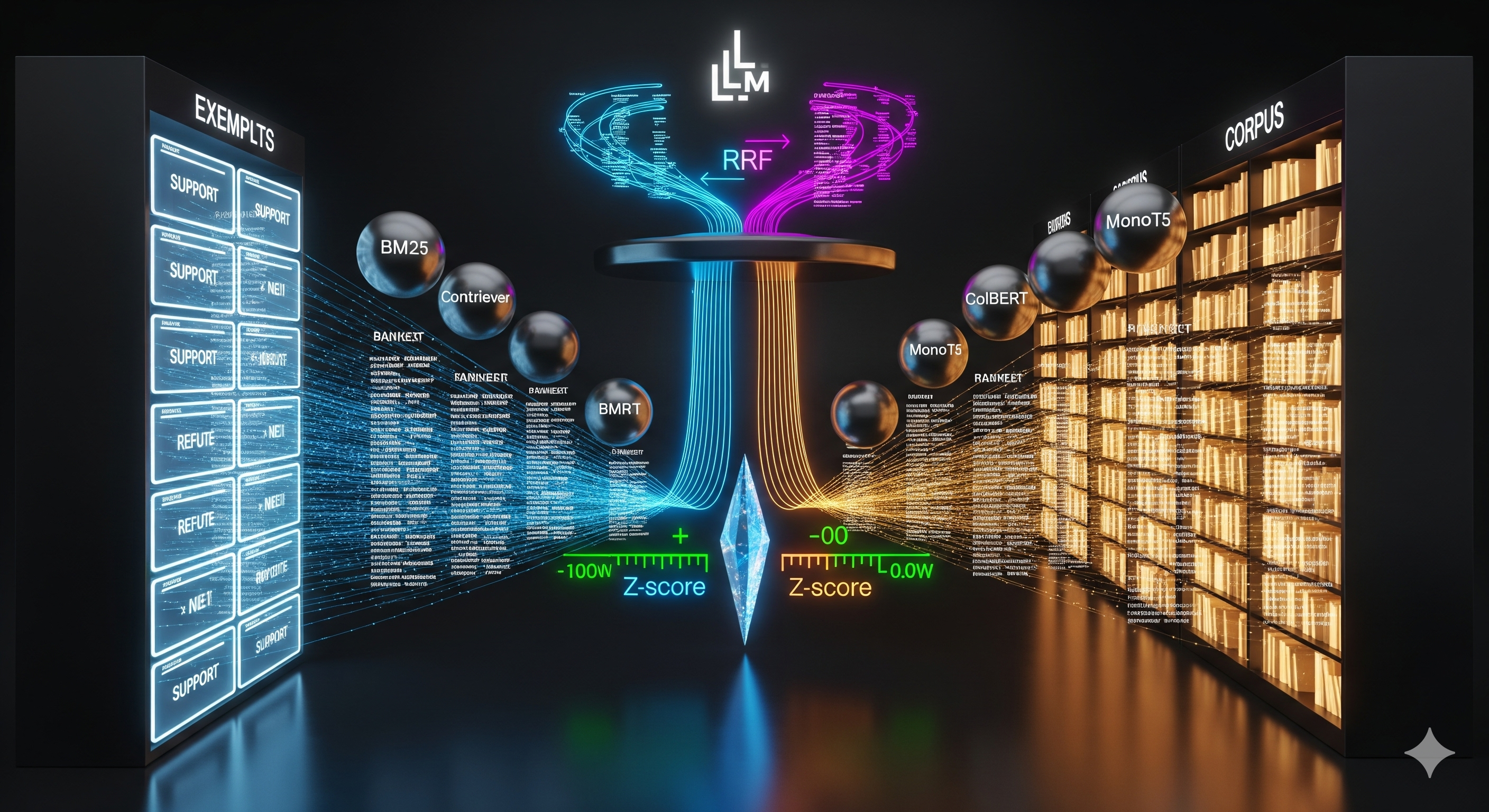

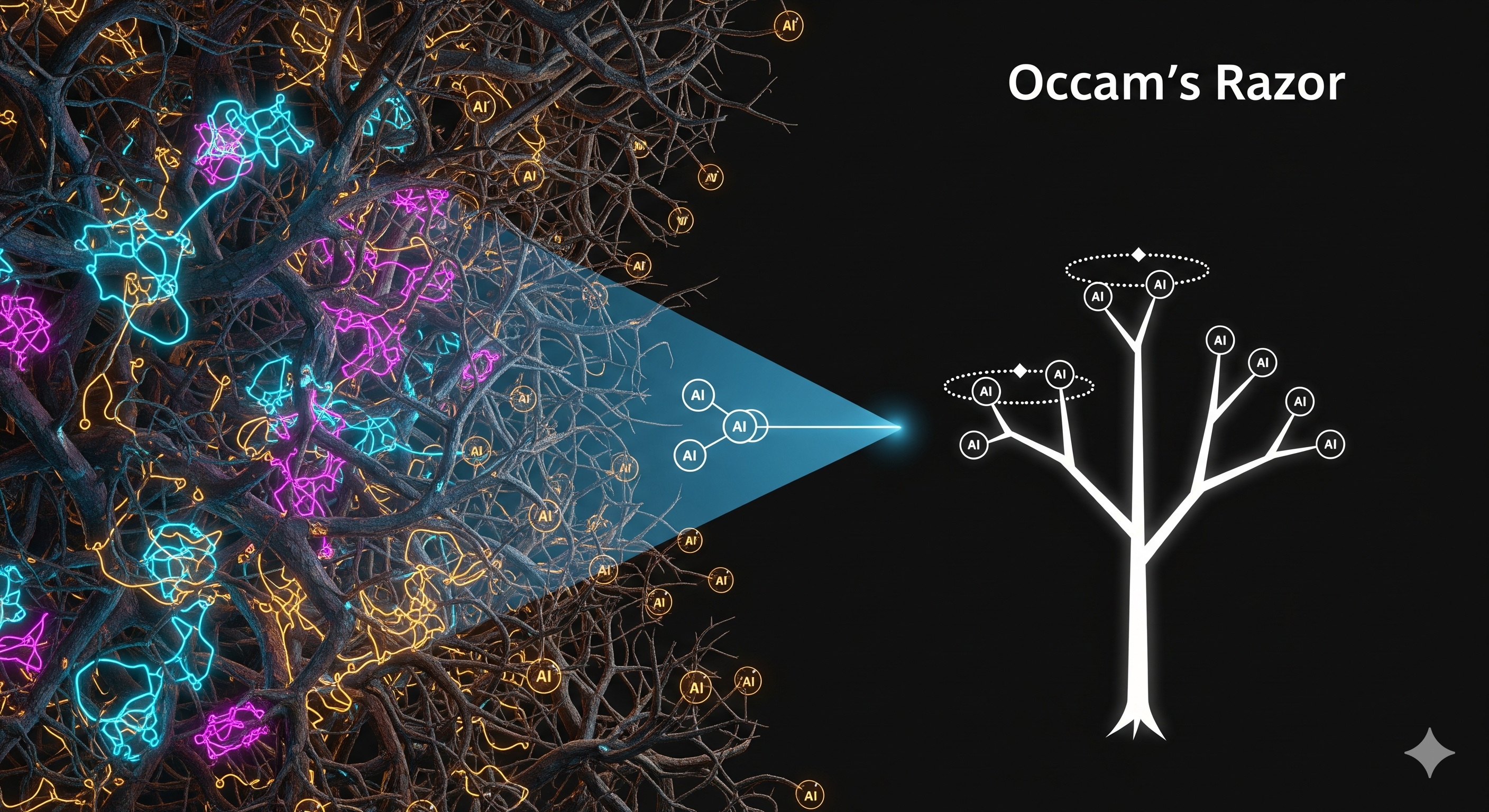

TL;DR Turning natural‑language specs into production Airflow DAGs works best when you split the task into stages and let templates carry the structural load. In Prompt2DAG’s 260‑run study, a Hybrid approach (structured analysis → workflow spec → template‑guided code) delivered ~79% success and top quality scores, handily beating Direct one‑shot prompting (~29%) and LLM‑only generation (~66%). Deterministic Templated code hit ~92% but at the price of up‑front template curation. What’s new here Most discussions about “LLMs writing pipelines” stop at demo‑ware. Prompt2DAG treats pipeline generation like software engineering, not magic: 1) analyze requirements into a typed JSON, 2) convert to a neutral YAML workflow spec, 3) compile to Airflow DAGs either by deterministic templates or by LLMs guided by those templates, 4) auto‑evaluate for style, structure, and executability. The result is a repeatable path from English to a runnable DAG. ...