Rollouts, Not GPUs: Why AWorld’s 14.6× Speedup Rewires Agent Training

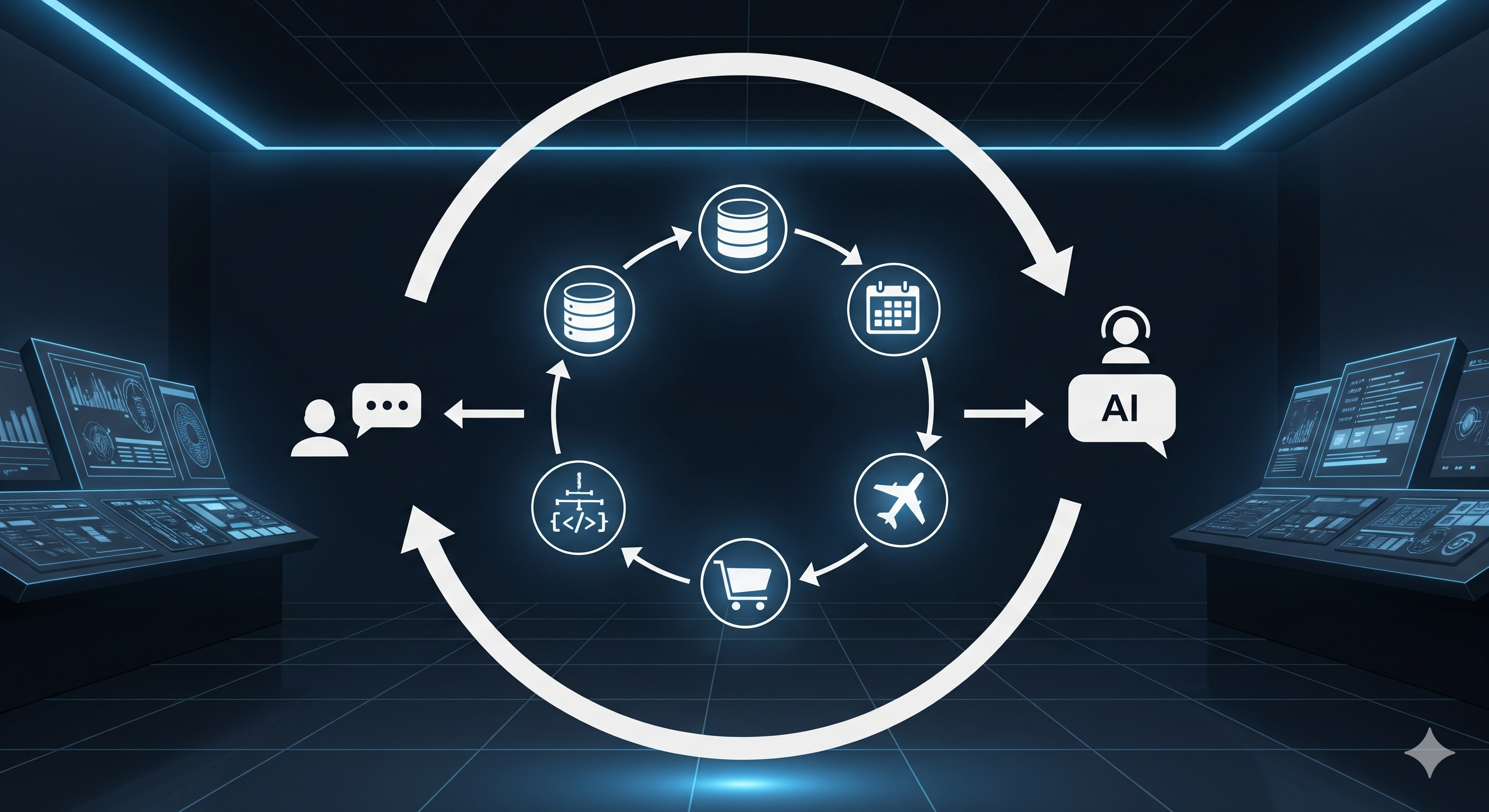

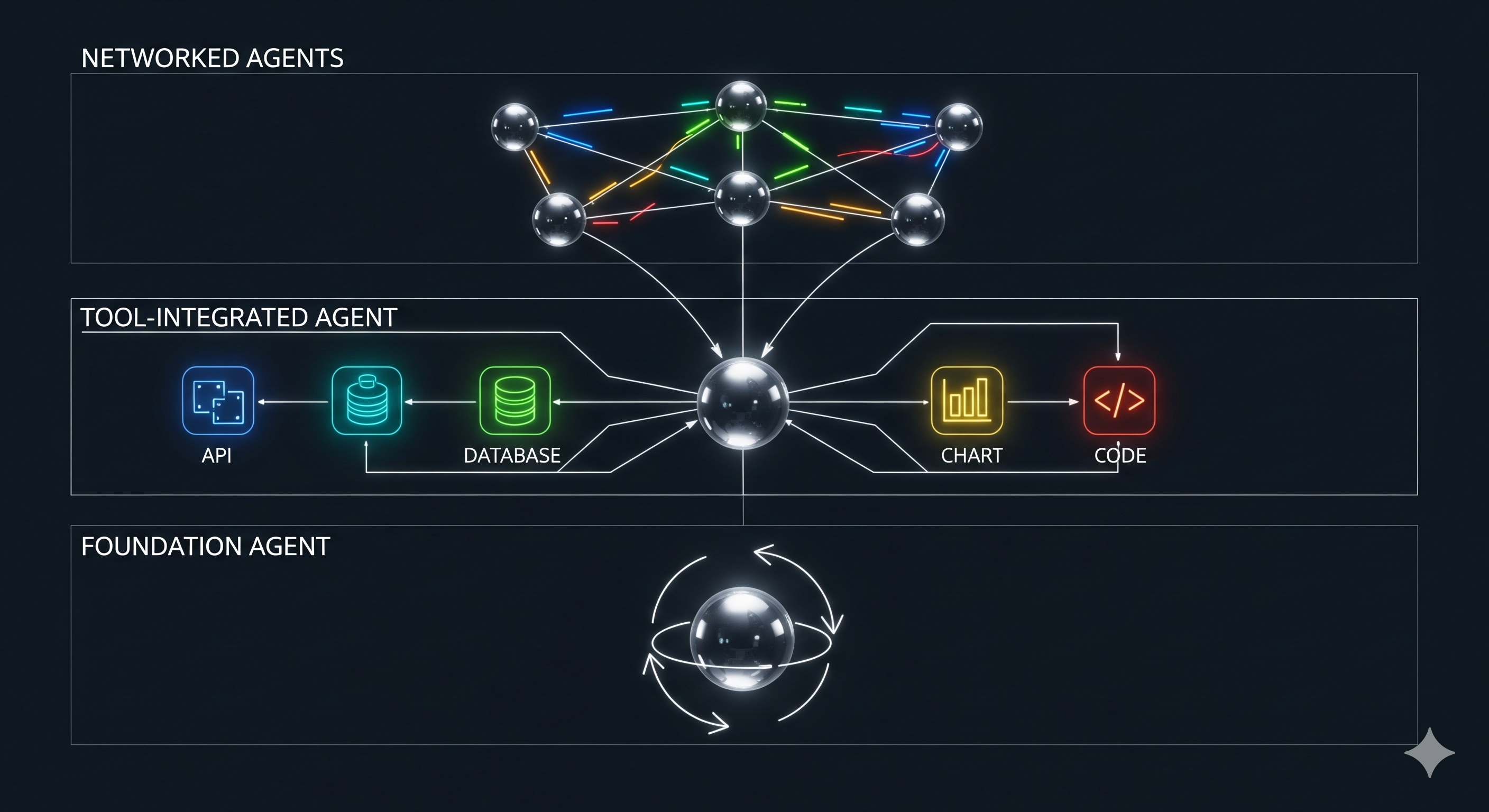

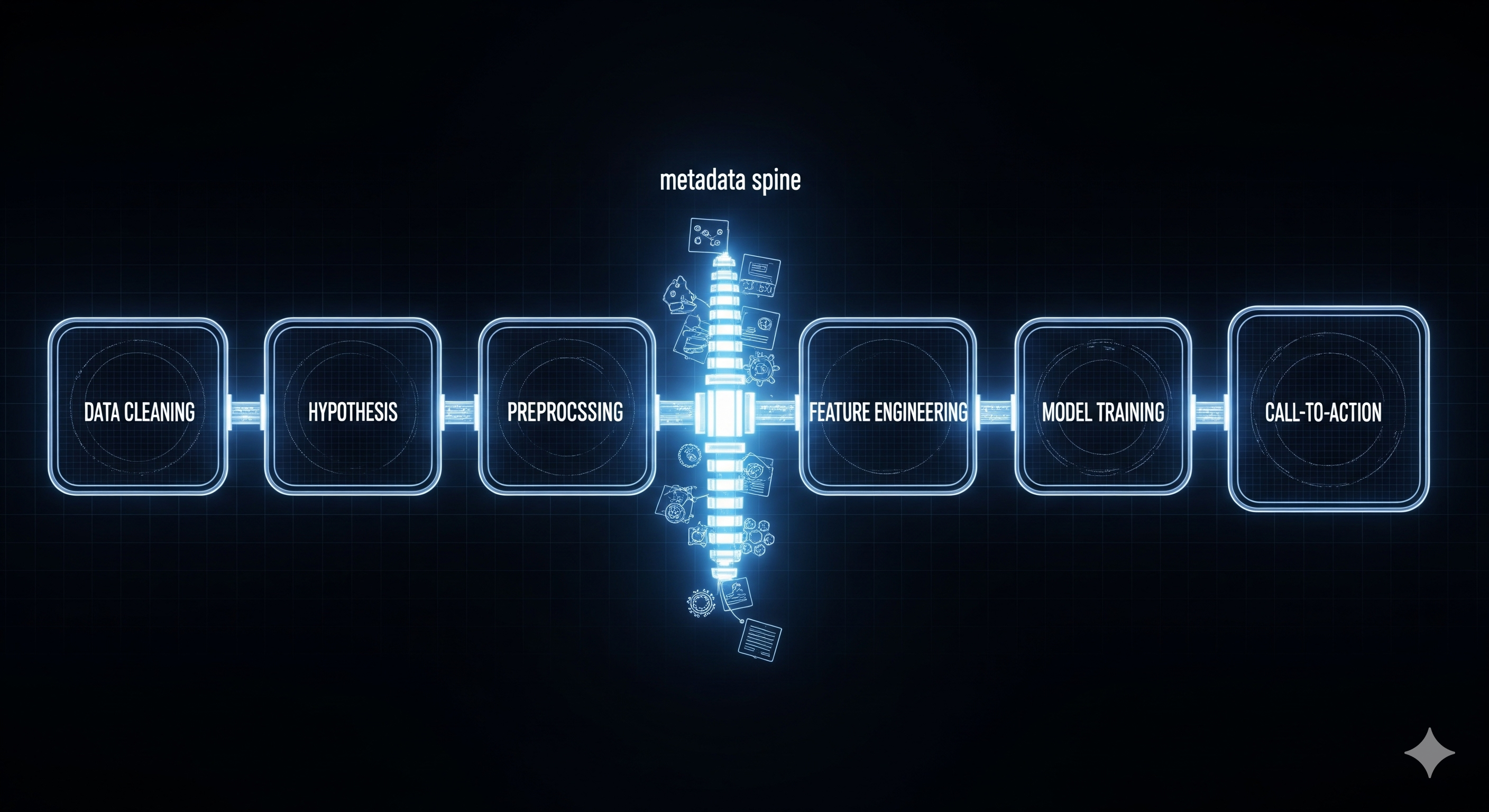

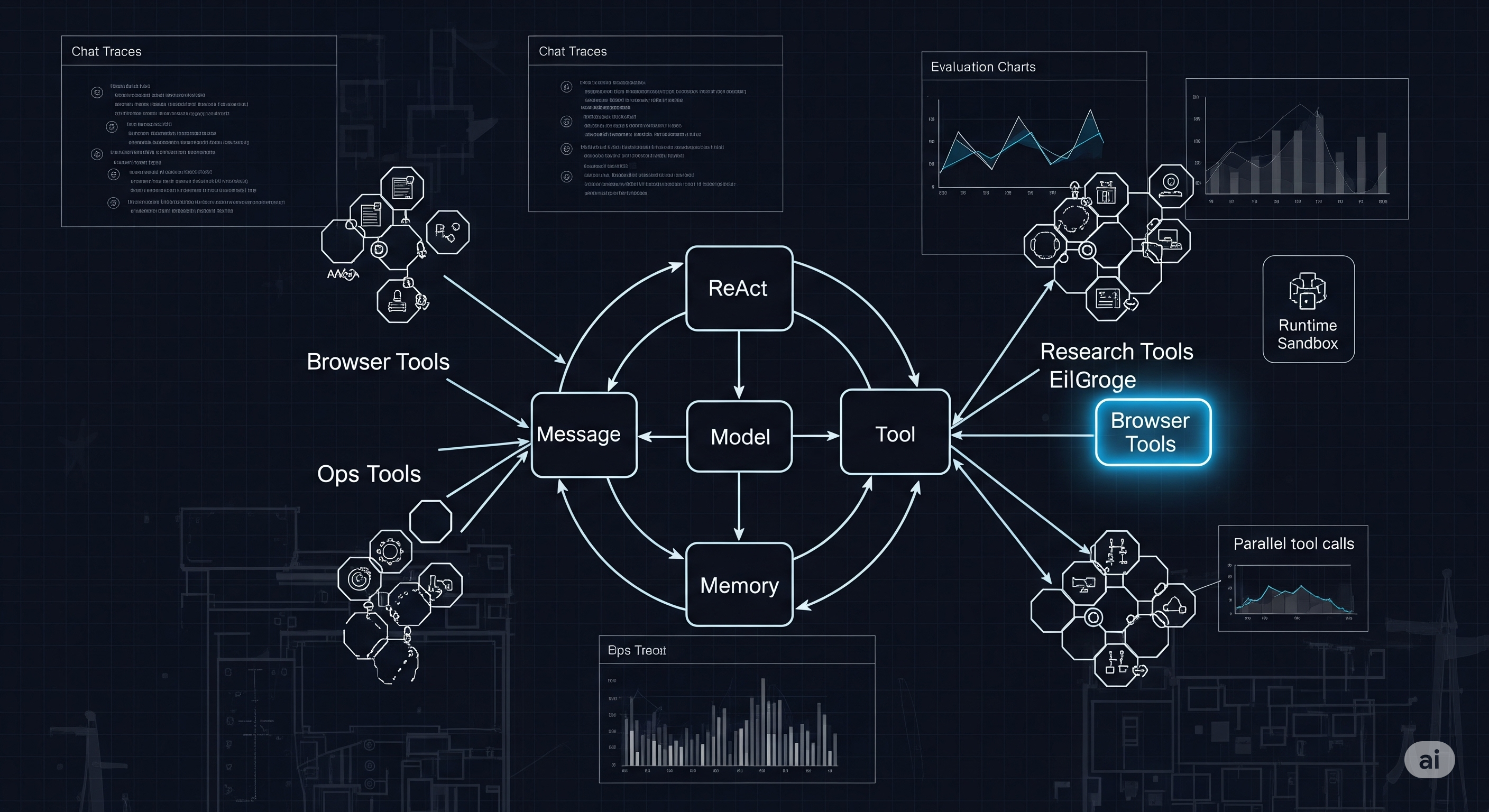

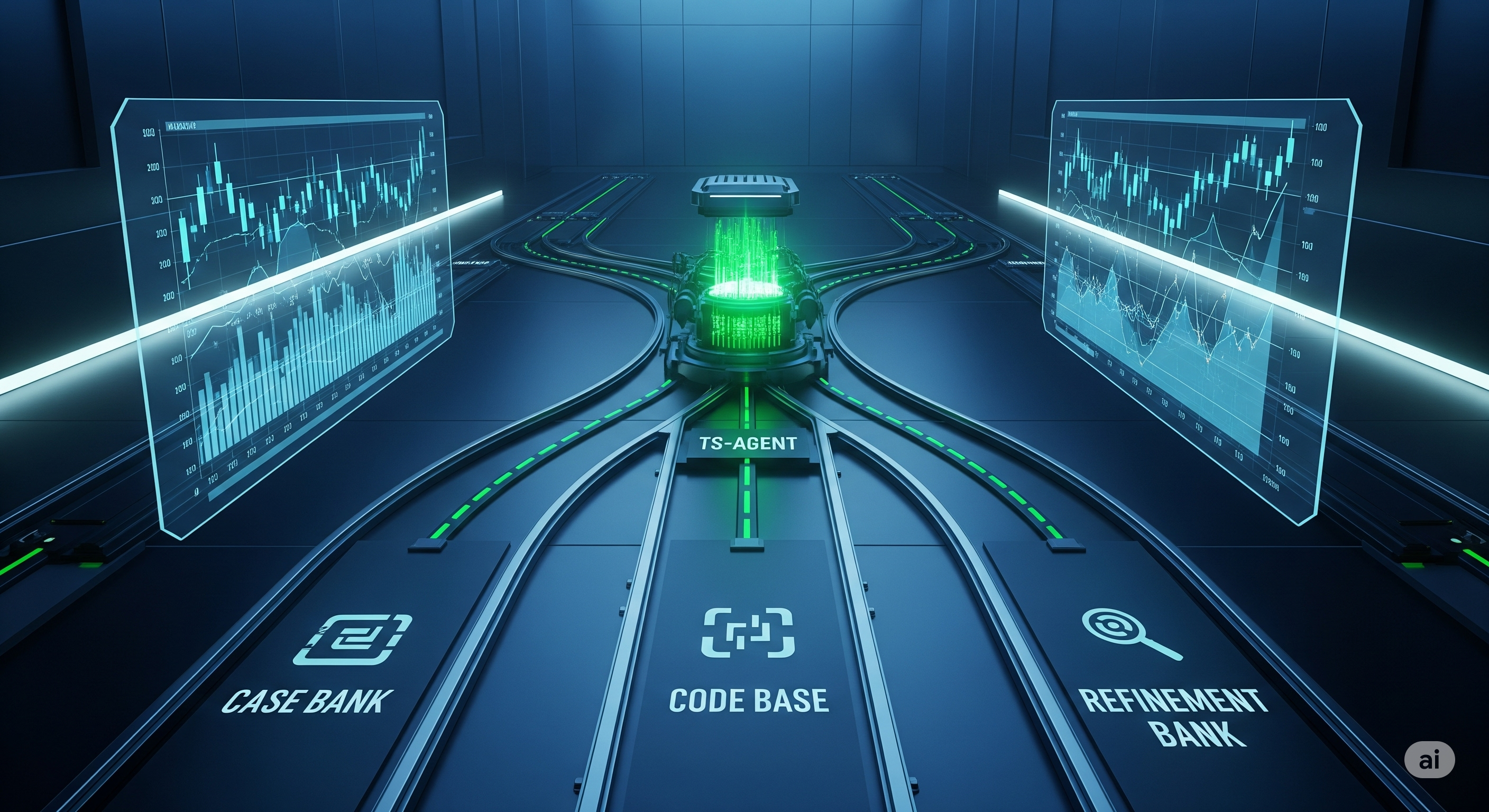

Thesis: In agentic AI, the rate-limiting step isn’t backprop—it’s rollouts. AWorld (from Inclusion AI) turns the crank on experience generation with a distributed executor that accelerates rollouts 14.6×, enabling practical reinforcement learning on complex environments like GAIA and yielding double‑digit pass@1 gains on a 32B model. TL;DR for operators The bottleneck has moved: On GAIA‑style tasks, training time is constant; interaction time dominates. AWorld cuts the rollout phase from 7,695s → 525s per cycle (total cycle 7,839s → 669s). That’s a ~92% reduction in wall‑clock. Performance follows scale of attempts: More attempts per task (up to 32 rollouts/q) materially raises pass@k across frontier models—evidence that success hinges on finding wins to learn from. Proof on GAIA: Fine‑tuning + RL with AWorld elevates Qwen3‑32B from 21.59% → 32.23% pass@1 overall and 4.08% → 16.33% on Level‑3 (hardest) questions—competitive with or surpassing strong proprietary baselines at the top difficulty. Why this matters for business Most “AI agent” pilots stall in browsers, spreadsheets, and internal CRMs—not because the model can’t reason, but because the loop (tool use → observation → next step) runs too slowly to harvest enough positive trajectories for improvement. AWorld’s contribution is operational: treat rollouts as a first‑class distributed workload (Kubernetes pods, sandboxed tools, message‑bus protocols) so your agents can practice at scale and your RL can learn from those successes. ...