Batch of Thought, Not Chain of Thought: Why LLMs Reason Better Together

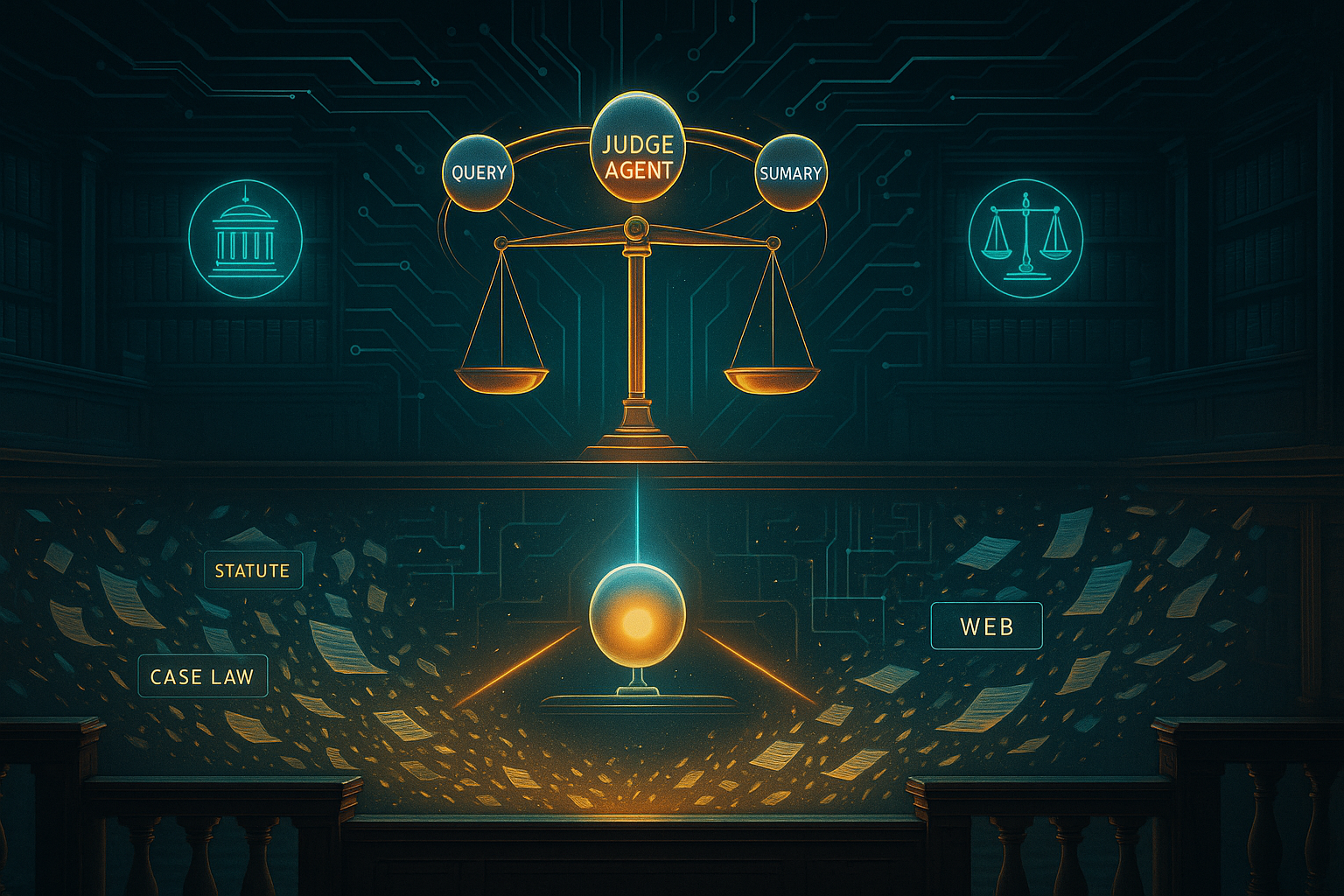

Opening — Why this matters now Large Language Models have learned to think out loud. Unfortunately, they still think alone. Most modern reasoning techniques—Chain-of-Thought, ReAct, self-reflection, debate—treat each query as a sealed container. The model reasons, critiques itself, revises, and moves on. This is computationally tidy. It is also statistically wasteful. In real decision systems—fraud detection, medical triage, compliance review—we never evaluate one case in isolation. We compare. We look for outliers. We ask why one answer feels less convincing than the rest. ...