Skip or Split? How LLMs Can Make Old-School Planners Run Circles Around Complexity

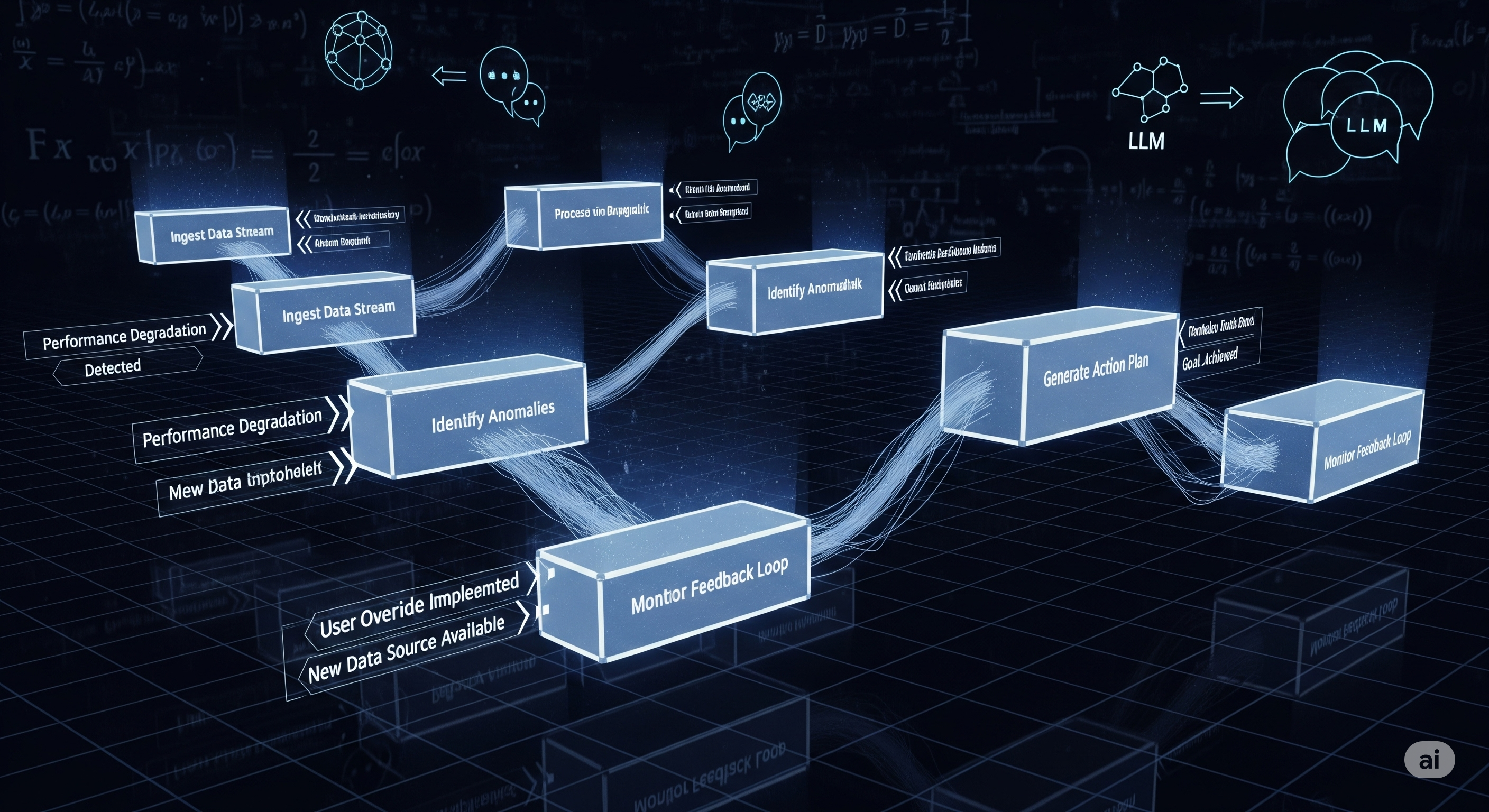

TL;DR Classical planners crack under scale. You can rescue them with LLMs in two ways: (1) Inspire the next action, or (2) Predict an intermediate state and split the search. On diverse benchmarks (Blocks, Logistics, Depot, Mystery), the Predict route generally solves more cases with fewer LLM calls, except when domain semantics are opaque. For enterprise automation, this points to a practical recipe: decompose → predict key waypoints → verify with a trusted solver—and only fall back to “inspire” when your domain model is thin. ...