Benchmarks with Benefits: What DeepScholar-Bench Really Measures

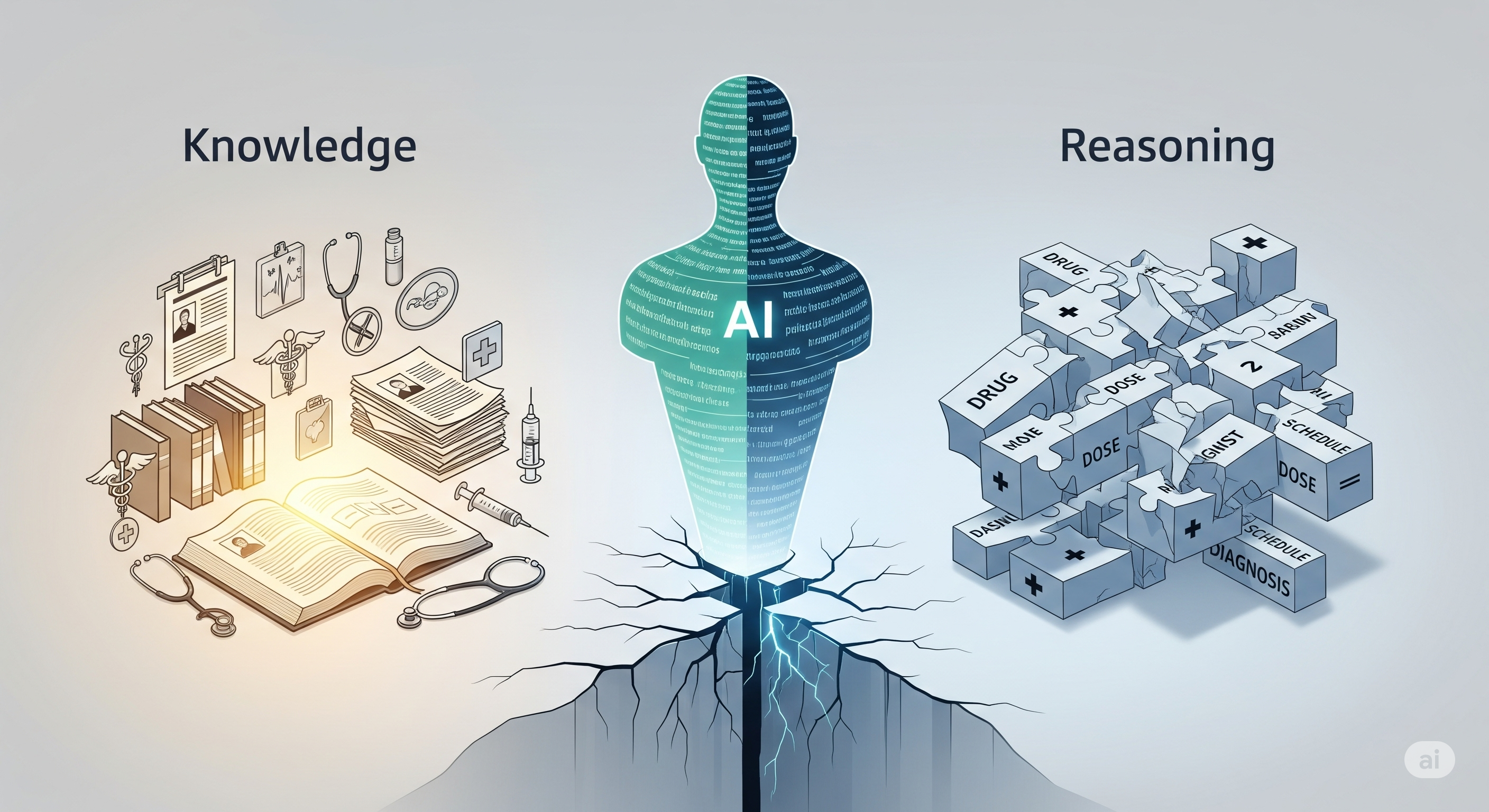

TL;DR DeepScholar-Bench introduces a live (continuously refreshable) benchmark and a holistic automated evaluation for generative research synthesis. Its reference pipeline, DeepScholar‑base, is simple yet competitive. The headline: today’s best systems organize text well but miss key facts, under-retrieve important sources, and fail verifiability at scale. That’s not a death knell—it’s a roadmap. Why this matters for business readers Enterprise “research copilots” promise to digest the live web, summarize options, and provide auditable citations. In practice, three gaps keep showing up: ...