Mirror, Signal, Maneuver: How 'Self' Labels Nudge LLM Cooperation

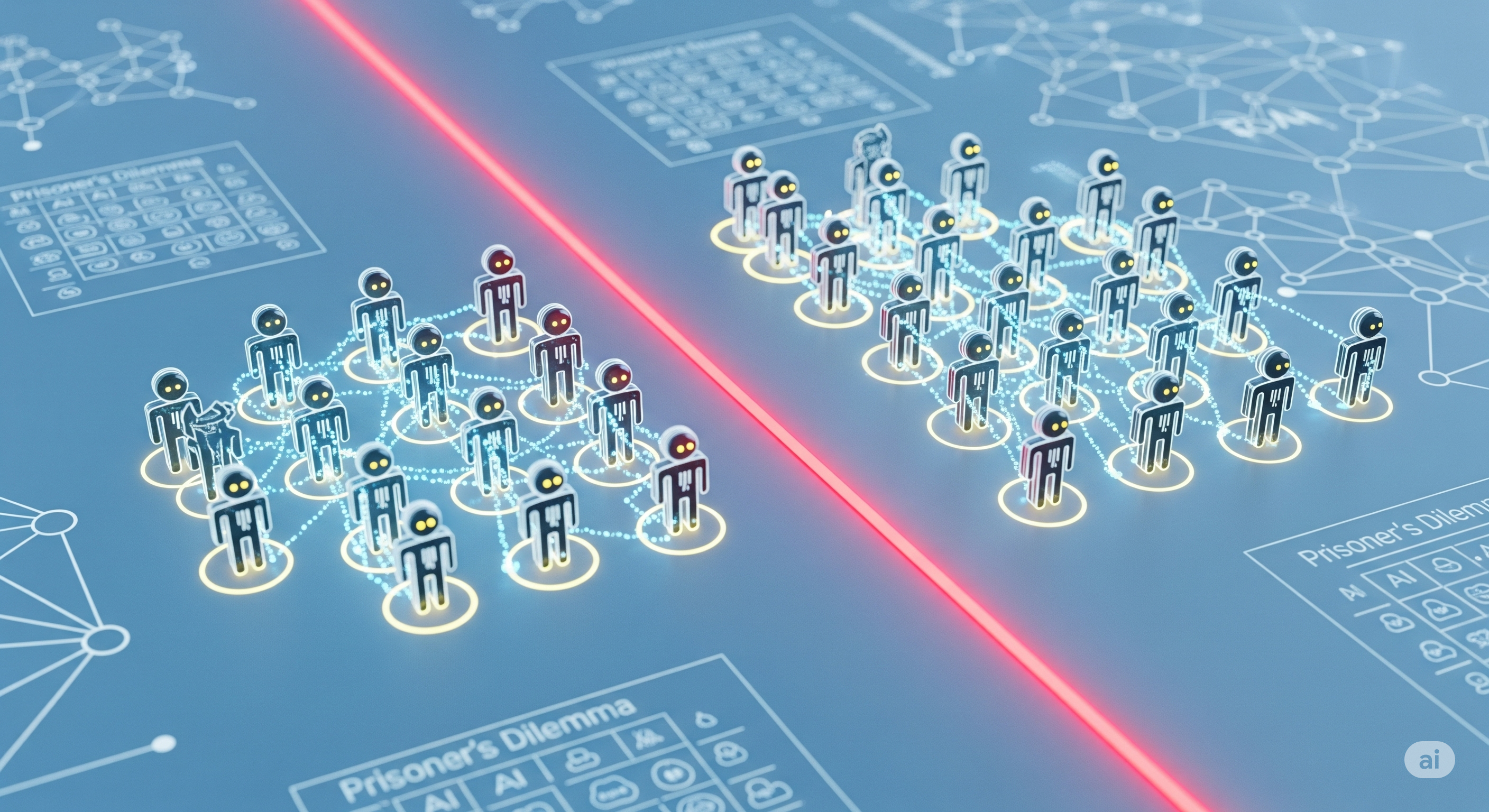

When an agent thinks it sees itself in the mirror, it doesn’t necessarily smile—it sometimes clutches its wallet. TL;DR In an iterated public‑goods game (20 rounds, 10 tokens per round, 1.6 multiplier), telling models they’re playing “another AI” versus “themselves” shifts contributions by up to ~4 points in some settings. Direction of the shift depends on the prompt persona: with collective prompts, “self” labels often reduced contributions; with selfish prompts, “self” labels sometimes increased matching/cooperation. Effects persist under rephrased prompts and when reasoning traces aren’t requested, and they appear even in four‑agent self‑play variants. For enterprise multi‑agent AI, identity cues are levers. Manage them like you manage feature flags: test, monitor, and standardize. What the authors tested (and why it’s clever) Game mechanics. Two (and later four) LLM agents repeatedly choose how much to contribute (0–10) to a common pool each round. Pool is multiplied by 1.6 and split evenly; keeping more is privately optimal, but coordinated contribution yields higher joint payoffs. ...