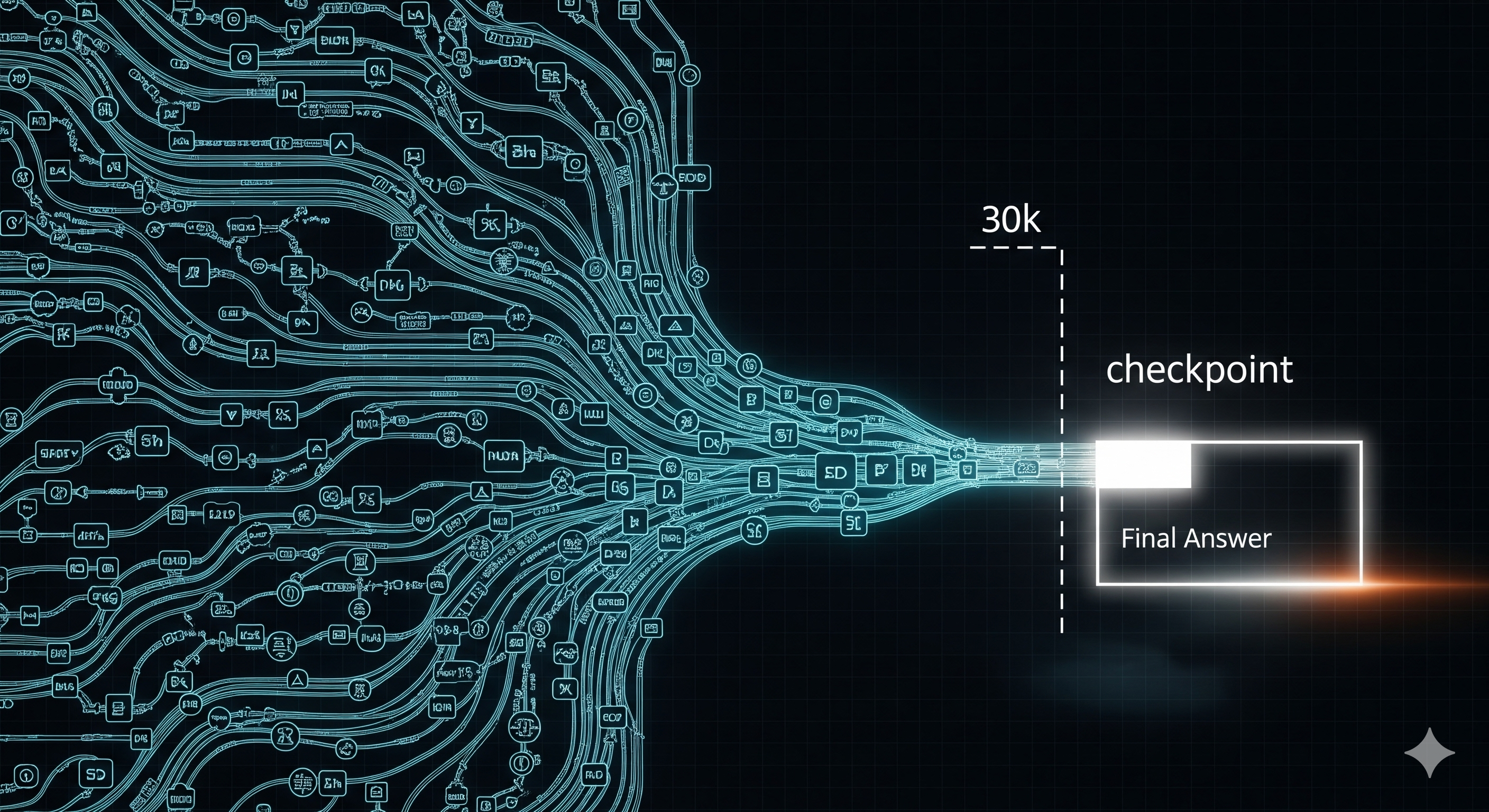

Stop at 30k: How Hermes 4 Turns Long Chains of Thought into Shorter Time‑to‑Value

TL;DR Hermes 4 is an open‑weight “hybrid reasoner” that marries huge synthetic reasoning corpora with carefully engineered post‑training and evaluation. The headline for operators isn’t just benchmark wins—it’s control: control of format, schema, and especially when the model stops thinking. That last bit matters for latency, cost, and reliability. Why this matters for business readers If you’re piloting agentic or “think‑step” LLMs, two pains dominate: Unbounded reasoning length → blow‑ups in latency and context costs. Messy outputs → brittle downstream integrations. Hermes 4 addresses both with: (a) rejection‑sampled, verifier‑backed reasoning traces to raise answer quality, and (b) explicit output‑format and schema adherence training plus length‑control fine‑tuning to bound variance. That combo is exactly what production teams need. ...