Breaking the Tempo: How TempoBench Reframes AI’s Struggle with Time and Causality

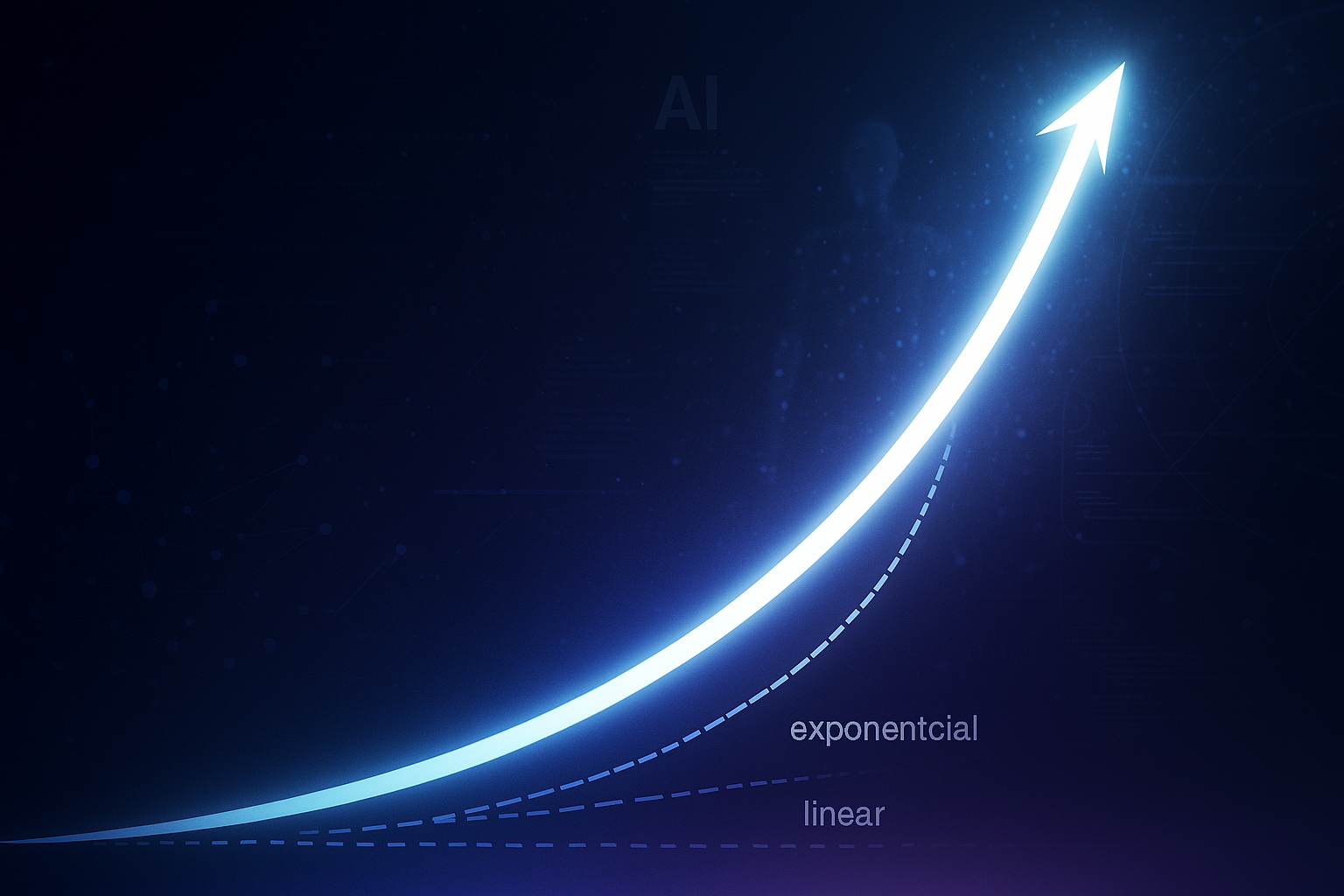

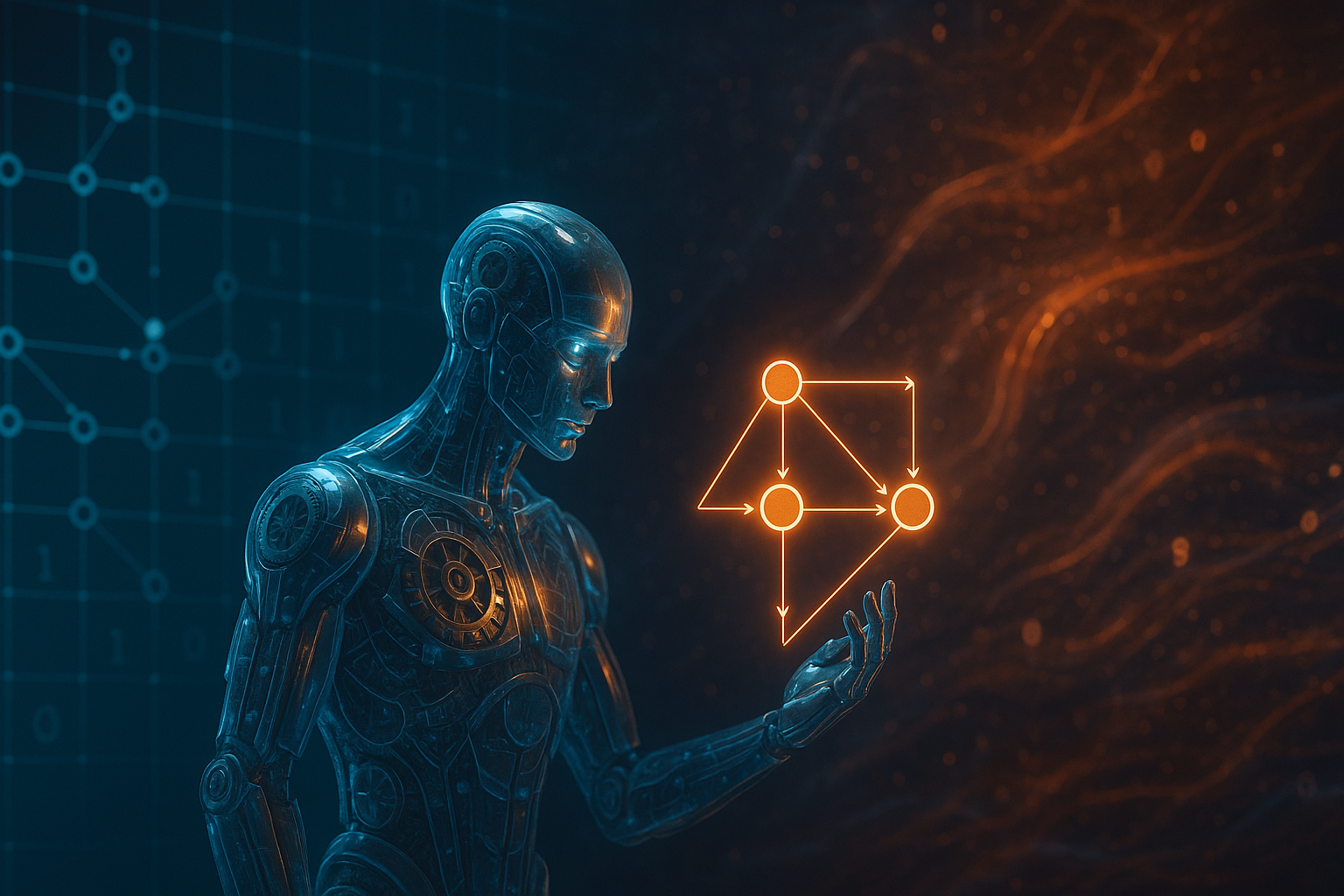

Opening — Why this matters now The age of “smart” AI models has reached an uncomfortable truth: they can ace your math exam but fail your workflow. While frontier systems like GPT‑4o and Claude‑Sonnet solve increasingly complex symbolic puzzles, they stumble when asked to reason through time—to connect what happened, what’s happening, and what must happen next. In a world shifting toward autonomous agents and decision‑chain AI, this isn’t a minor bug—it’s a systemic limitation. ...