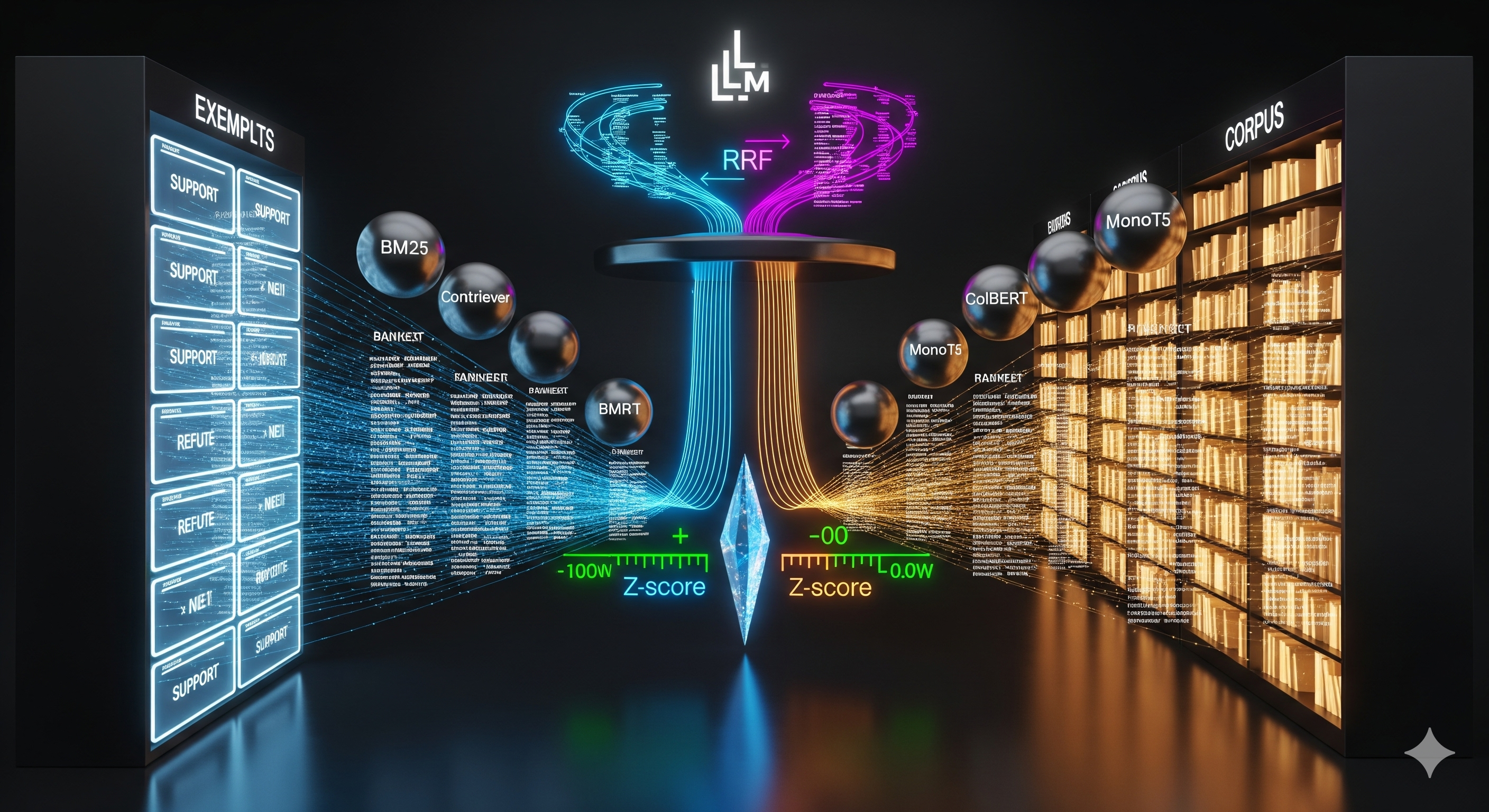

Retrieval‑augmented generation tends to pick a side: either lean on labeled exemplars (ICL/L‑RAG) that encode task semantics, or on unlabeled corpora (U‑RAG) that provide broad knowledge. HF‑RAG argues we shouldn’t choose. Instead, it proposes a hierarchical fusion: (1) fuse multiple rankers within each source, then (2) fuse across sources by putting scores on a common scale. The result is a simple, training‑free recipe that improves fact verification and, crucially, generalizes better out‑of‑domain.

TL;DR (for busy execs)

- Problem: L‑RAG and U‑RAG offer complementary value but their scores aren’t directly comparable; single‑ranker retrieval under‑utilizes signal diversity.

- Idea: Fuse rankers per source with Reciprocal Rank Fusion (RRF), then fuse across sources using z‑score standardization of fused scores.

- Why it works: RRF reduces single‑model brittleness; z‑scores remove source‑dependent score bias so evidence from labels and from the open corpus can be fairly compared.

- Results: Consistent macro‑F1 gains vs. best single ranker/source; stronger OOD performance (e.g., scientific claims) and stable performance across context sizes.

- So what: For production RAG, replace “pick one retriever + one source” with many‑rankers × two‑sources + standardize. It’s low‑risk, training‑free, and usually an upgrade.

What HF‑RAG actually does

Stage 1 — Intra‑source fusion (diversity before depth):

- For each source (Labeled FEVER train; Unlabeled Wikipedia), retrieve top‑k with multiple rankers: BM25 (sparse), Contriever (bi‑encoder), ColBERT (late interaction), MonoT5 (cross‑encoder reranker).

- Fuse the k‑lists into one per source using RRF, which sums reciprocals of ranks so documents that appear high across lists rise to the top.

Stage 2 — Inter‑source fusion (apples to apples):

- The two fused lists use different scoring scales.

- Put RRF scores from each source on a standard normal via z‑score $(s - \mu)/\sigma$, then merge and take top‑k for the final context fed to the LLM.

Why not just take a fixed mix (e.g., 70% unlabeled, 30% labeled)?

- HF‑RAG tried that too (LU‑RAG‑α). Z‑scores win because the best mix is claim‑dependent and the distributions shift by domain—standardization adapts automatically.

How this differs from popular alternatives

- RAG‑Fusion / query diversification: modifies the query to induce diverse lists, then merges. HF‑RAG focuses on ranker diversity and source comparability without changing queries.

- FiD / Fusion‑in‑Decoder: merges at decoding/training time. HF‑RAG is inference‑only—no new training loops or fine‑tuning.

Results that matter for practitioners

- Beats the “best single combo” bound. HF‑RAG outperforms the oracle that picks the best one of {4 rankers × 2 sources} on the test set, showing real complementarity.

- OOD generalization: Gains are largest on SciFact (scientific claims), a domain far from FEVER’s training style—evidence that z‑score cross‑source fusion counters overfitting to labeled exemplars.

- Stability to context size: Performance stays strong as you vary k (e.g., 5→20), reducing the tuning burden for production.

- Adaptive source proportion: On Climate‑FEVER (closer to FEVER), the final context leans more on labeled exemplars; on SciFact, it leans more on unlabeled Wikipedia—without any manual knob‑twiddling.

A compact scorecard

| Setting | Takeaway for teams |

|---|---|

| In‑domain (FEVER) | HF‑RAG > single‑ranker L‑/U‑RAG and > per‑source RRF; easy wins without training. |

| OOD (Climate‑FEVER) | Similar‑style claims: HF‑RAG taps labeled exemplars more heavily; sustained gains. |

| OOD (SciFact) | Style/lexicon shift: HF‑RAG relies more on unlabeled corpora; biggest relative gains. |

| Ablation (LU‑RAG‑α) | Fixed mix underperforms z‑scores—distribution shift makes fixed α brittle. |

Where this plugs into your stack

-

Keep your retrievers plural. Run at least one sparse (BM25), one dense (bi‑encoder), one late‑interaction (ColBERT), and a cross‑encoder reranker. Cost can be managed by using rerankers only on the union of top‑N from the fast models.

-

Maintain two indices:

- Labeled index (ICL memories): claim–evidence–label tuples (or exemplar snippets) from your supervised datasets.

- Unlabeled index (knowledge): your wiki/docsite/data‑lake.

-

Fuse per source with RRF, then z‑score across sources. Ship the top‑k merged items to the generator with a slim, role‑aware prompt: labeled items provide schema/label priors; unlabeled items provide factual support.

-

Log per‑request proportions. Track how many labeled vs unlabeled items land in final contexts; it’s a great drift signal.

Implementation notes & edge cases

- Score standardization: If a source’s fused list has <3 items, fall back to robust scaling (median/IQR) or z‑scores with a small‑sample variance correction; otherwise you risk extreme z‑values.

- De‑duplication across sources: Different sources may contain near‑duplicates; apply MinHash/SimHash to avoid wasting context tokens.

- Guardrails in fact‑checking: Because labeled exemplars carry labels (support/refute/NEI), ensure prompts separate evidence from verdict—make the LLM argue from evidence first, then decide.

- Latency budget: Parallelize retrievers; use async fan‑out → union → rerank; cache per‑claim uuids for hot queries.

Strategic take: what this implies for enterprise RAG

- Stop chasing one perfect retriever. Diversity + fusion is the new baseline; think ensemble IR.

- Treat labeled and unlabeled as complementary views. Labeled gives task semantics; unlabeled gives world context. Standardization is the missing handshake.

- Better OOD = lower maintenance. If your domains rotate (compliance today, science tomorrow), HF‑RAG’s adaptive mix is insurance against rebuild churn.

Cognaptus: Automate the Present, Incubate the Future