A personal health agent shouldn’t just chat about sleep; it should compute it, contextualize it, and coach you through changing it.

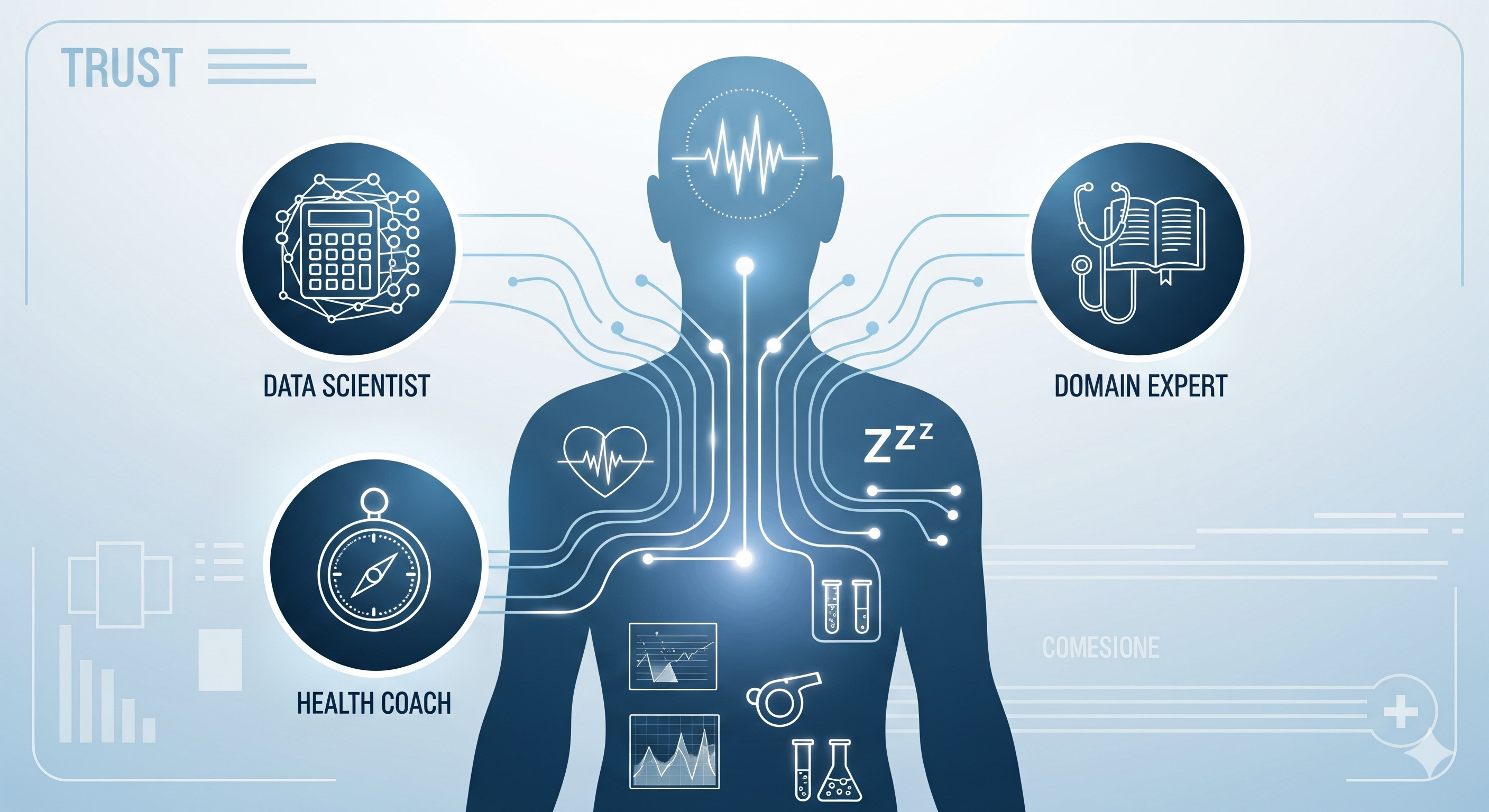

The paper we review today—The Anatomy of a Personal Health Agent (PHA)—is the most structured attempt I’ve seen to turn scattered “AI wellness tips” into a modular, evaluable system: three specialized sub‑agents (Data Science, Domain Expert, Health Coach) orchestrated to answer real consumer queries, grounded in multimodal data (wearables, surveys, labs). It reads like a playbook for product leaders who want evidence‑backed consumer health AI rather than vibe‑based advice.

Why this matters for operators

Most health chatbots fail for three reasons: (1) they hand‑wave the math on time‑series data, (2) they give generic medical boilerplate without your context, and (3) coaching collapses into pep talk. PHA proposes division of labor to fix each failure while keeping an orchestrator on top. For builders of AI‑powered wellness products, the architecture is not just cleaner—it’s measurably better across expert and end‑user studies.

The System in One Glance

| Role | Core job | Typical inputs | “North‑star” output |

|---|---|---|---|

| DS Agent | Turn open‑ended questions into statistical plans + code over personal and population time‑series | Daily summaries, activities, population percentiles | Numerically sound deltas, trends, and comparisons (e.g., “RHR down 4.2 bpm vs. your 3‑month baseline; likely linked to +18% steps.”) |

| DE Agent | Medical reasoning that blends personal context with vetted sources | Wearables + labs + history; literature; population stats | Accurate, personalized explanations with citations, cautions, and thresholds |

| HC Agent | Behavior change via structured conversation (MI‑style), goal‑setting, and follow‑through | DS + DE outputs; user goals and constraints | SMART plans, adherence nudges, progress reviews |

Evidence that the split works

- DS Agent treats analysis as a two‑stage pipeline—plan → code—improving both planning quality and unit‑test pass rate versus a strong base model. Translation: fewer “charting by hallucination” moments, more reproducible numerics.

- DE Agent scores higher on specialist MCQs, better top‑k differential diagnosis, and—crucially—more trusted personalization when age/sex/comorbidities matter. That’s the difference between “Google with empathy” and risk‑aware guidance.

- HC Agent wins on coaching style and actionability, though it’s weaker on long‑horizon progress tracking—a realistic gap we’d address with calendar‑ and sensor‑linked adherence loops.

- Integrated PHA outperforms parallel or naïve multi‑agent variants in expert side‑by‑sides on overall quality and usefulness—evidence that orchestration (decomposition, routing, and synthesis) is not optional glue but a first‑class capability.

What’s genuinely new

-

User‑centered taxonomy → agent design. The team starts from real consumer queries (general knowledge, personal data insights, wellness advice, and symptom assessment), then maps capabilities to agent roles—avoiding the all‑too‑common “we built a tool, now find a use.”

-

Real‑world data backbone. Evaluation leans on a large wearables + labs study (WEAR‑ME), with IRB oversight and explicit consent, letting agents reason across daily Fitbit signals, questionnaires, and blood biomarkers—not toy CSVs. That’s a bar most product teams still don’t clear.

-

Benchmarks that reflect the job. The DS agent is graded on plans and code execution; the DE agent on MCQs, DDx lists, personalization, and multimodal summaries reviewed by clinicians; the HC agent on end‑user satisfaction and expert fidelity. It’s notable how often industry ships a coach without proving it can count or cite.

Product takeaways (buildtrack)

- Make math explicit. Separate what to compute from how to compute (plan → code). Cache schemas, validate data sufficiency, and unit‑test transforms before charting. This alone lifts your reliability.

- Context is a requirement, not a feature. Strengthen retrieval for labs, meds, demographics, and environment; force every recommendation through a contraindication gate.

- Coach last, not first. Coaching that leads with cheerleading erodes trust. Orchestrate: DS → DE → HC, then surface only three actionable, time‑bound commitments.

- Progress is the missing KPI. The paper’s own limitation (progress tracking) is where product UX can shine: week‑over‑week reviews, relapse‑aware goal scaling, and “if‑this‑then‑that” plan edits tied to sensor data.

Strategic lens for incumbents vs. upstarts

- EHR vendors / payers can embed PHA as a member‑facing layer that triages lifestyle interventions before expensive care pathways. The DE agent’s safety/citation bias is regulatory‑friendly.

- Wearable OEMs gain defensibility by merging population percentiles + personal trends into proactive coaching. Think “closed‑loop” sleep/cardio programs with real adherence outcomes.

- Startups should resist building “one big agent.” Specialize stacks per role, then orchestrate. It’s easier to hit audits and run A/Bs when competencies are separately testable.

What I still want to see

-

Costed orchestration. Token, latency, and memory budgets per role; graceful degradation under edge‑connectivity.

-

Safety under distribution shift. How do agents behave when wearables drop days, labs arrive late, or comorbidity priors change?

-

Causal coaching. Can HC estimate counterfactual adherence effects (e.g., bedtime shift vs. caffeine cut) using DS‑estimated uplift models?

-

Human‑loop design debt. Clear escalation patterns to clinicians; red‑flag libraries that are locality‑aware; and post‑hoc audit summaries a nurse can scan in two minutes.

Bottom line

PHA is a serious blueprint: modular roles, real multimodal data, and evaluation that looks like the job. For builders, the lesson is simple—separate compute, context, and coaching, then make the handoffs auditable. That’s how consumer health AI graduates from vibes to vitals.

Cognaptus: Automate the Present, Incubate the Future.