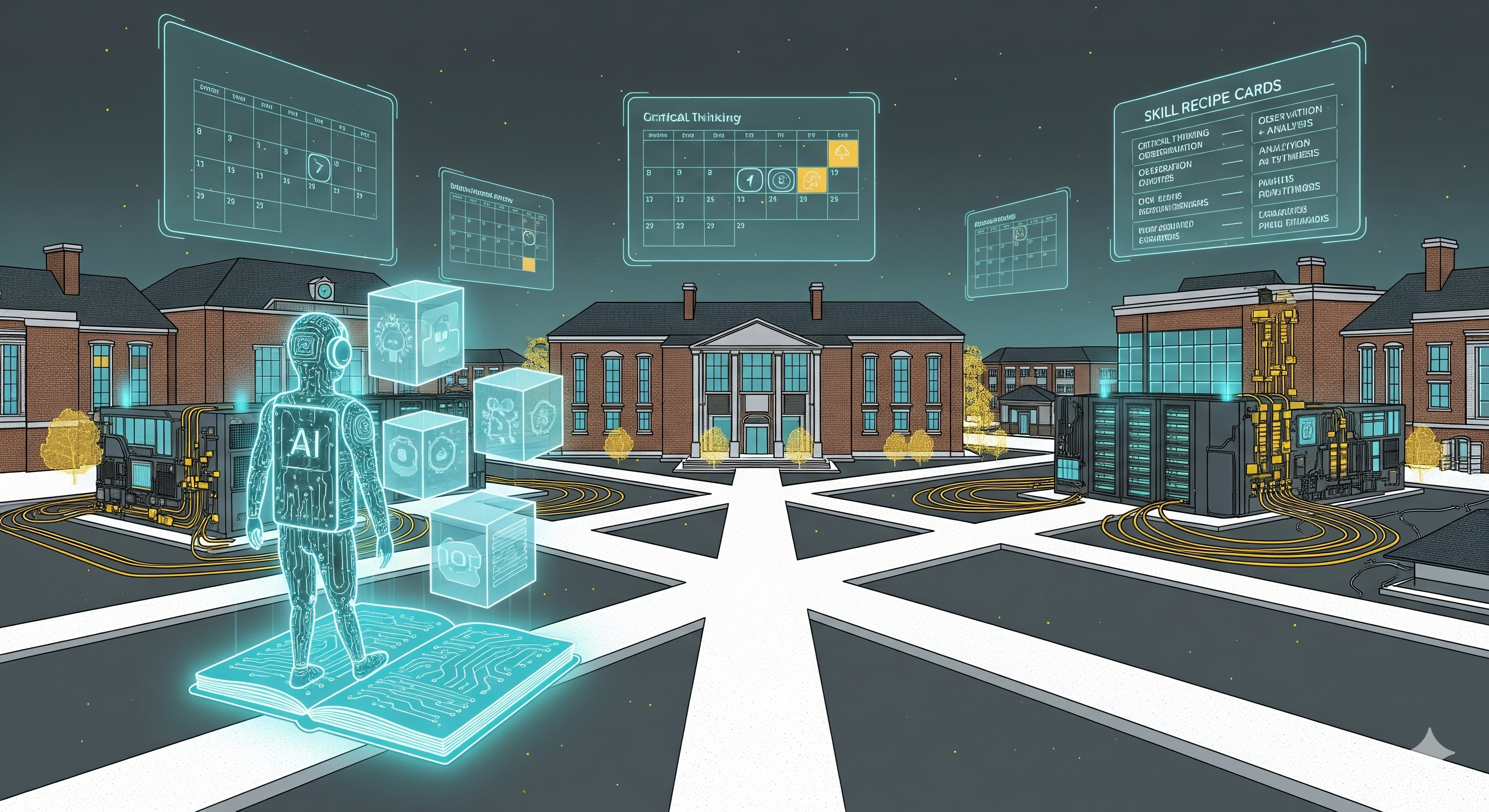

Large models are passing tests, but they’re not yet passing life. A new paper proposes Experience‑driven Lifelong Learning (ELL) and introduces StuLife, a collegiate “life sim” that forces agents to remember, reuse, and self‑start across weeks of interdependent tasks. The punchline: today’s best models stumble, not because they’re too small, but because they don’t live with their own memories, skills, and goals.

Why this matters now

Enterprise buyers don’t want parlor tricks; they want agents that schedule, follow through, and improve. The current stack—stateless calls, long prompts—fakes continuity. ELL reframes the problem: build agents that accumulate experience, organize it as memory + skills, and act proactively when the clock or context demands it. This aligns with what we’ve seen in real deployments: token context ≠ memory; chain‑of‑thought ≠ skill; cron jobs ≠ initiative.

The ELL blueprint (decoded for builders)

ELL is a loop: interact → distill → refine → validate. Concretely, you need two first‑class artifacts:

-

Memory (episodic + semantic):

- Trajectory memory (what actually happened)

- Declarative knowledge (facts, rules, requirements)

- Structural knowledge (relations, prerequisites, graphs)

-

Skills (procedural + meta):

- Procedures (how to do X end‑to‑end)

- Meta‑knowledge (how to choose/compose skills, plan, learn)

- Heuristics (rules‑of‑thumb under uncertainty)

On top, run CRUD for knowledge: Add new memories/skills, Update when evidence changes, Delete stale entries, Combine near‑duplicates. This keeps the agent’s brain tidy enough to be useful.

From prompt‑fitting to knowledge upkeep

A practical rewrite of the ELL loop for product teams:

-

Interaction: every task writes a trajectory (inputs, tools, outputs, timestamps, rationales).

-

Abstraction: compress trajectories into:

- time‑indexed facts (retrieval‑ready),

- graph edges (who/what depends on what),

- promotable skills (recipes with preconditions, steps, post‑conditions).

-

Refinement: schedule a background janitor that prunes, merges, and re‑scores memories/skills by freshness and utility.

-

Validation: track whether prior memories/skills actually improve outcomes on new tasks; demote or retrain the ones that don’t.

StuLife: a stress test for real‑world agency

StuLife simulates a student’s term with 1,284 tasks across 10 scenarios in three modules:

| Module | Scenarios (examples) | #Tasks | LTM‑heavy? | Proactive? |

|---|---|---|---|---|

| In‑Class | Regulations, Core Courses (8 tracks) | 486 | 152 | 486 |

| Daily Campus | Map/explore, course selection, planning, advisor outreach, library use, clubs | 637 | 242 | 142 |

| Exams | Midterm (in‑class logistics) & Final (online) | 160 | 160 | 0 |

| Total | 1,284 | 554 | 628 |

Two design choices make it brutal (and realistic): interdependence (early choices constrain later options) and time (agents must show up for scheduled events). This flips the agent from reactive Q&A to prospective memory + initiative.

The uncomfortable results

Even frontier models falter when memory and initiative are required. Under a stateless baseline—no persistent memory unless the agent explicitly saves and retrieves—top systems barely clear low double‑digit success. A composite StuGPA (weighted by exams, attendance, daily life) makes the pain visible. The why is instructive: models don’t routinely check calendars, surface prior commitments, or recall last week’s facts unless scaffolded.

Takeaway: Scale helps, structure matters more. Without memory, skills, and self‑starting loops, you’re shipping a strong autocomplete, not an agent.

Build sheet: turning ELL into product wins

Below is a mapping you can implement this week—no retraining required.

| ELL Principle | What to ship | Minimal design | Upgrade path |

|---|---|---|---|

| From Context → Memory | Append‑only Episodic Log + Facts DB | JSONL per task; store Q→A, tools, results; extract facts nightly | Vector/RAG over typed memories + TTL/decay + confidence |

| From Imitation → Skills | Skill recipes with preconditions & post‑checks | YAML recipes (e.g., “Register a course”, “Book a room”) | Learned options library with success‑weighted routing |

| From Passive → Proactive | Agenda watcher + prospective triggers | Cron that queries calendar and drafts next actions hourly | Event‑driven orchestrator with affordance detection & priority cues |

| Knowledge CRUD | Janitor job | Dedup near‑dupes; mark stale; merge variants | Policy‑driven consolidation + periodic distillation (internalization) |

| Validation | Forward/Backward transfer KPIs | Track deltas vs. no‑memory baseline | Promotion/demotion of skills based on causal lifts |

KPIs that actually move the needle

- LTRR (long‑term retention rate): success on tasks that require week‑old info.

- PIS (proactive initiative score): success when the agent must self‑initiate on time.

- AvgTurns per success: interaction efficiency, not just accuracy.

- StuGPA‑style composite: exams (outcomes), attendance (discipline), campus life (responsibility).

What this changes for Cognaptus clients

- Customer Ops: Replace “always‑on chat” with prospective agents that nudge renewals, follow safety SOPs on schedule, and remember prior exceptions.

- Finance Ops: Skillize reconciliations; persist exception patterns; surface them at period‑close without being asked.

- IT & Data: Treat memory as a first‑class service (schema + governance), not a prompt appendix.

The deeper bet: internalization over time

Not every skill should stay explicit. As usage hardens, distill frequent recipes into low‑latency policies (few‑shot reducers, small adapters). Keep them explainable and swappable. That’s how agents get faster and more trustworthy.

Cognaptus: Automate the Present, Incubate the Future