When organizations deploy LLM-based agents to email, message, and collaborate on our behalf, privacy threats stop being static. The attacker is now another agent able to converse, probe, and adapt. Today’s paper proposes a simulation-plus-search framework that discovers these evolving risks—and the countermeasures that survive them. The result is a rare, actionable playbook: how attacks escalate in multi-turn dialogues, and how defenses must graduate from rules to identity-verified state machines.

Why this matters for business

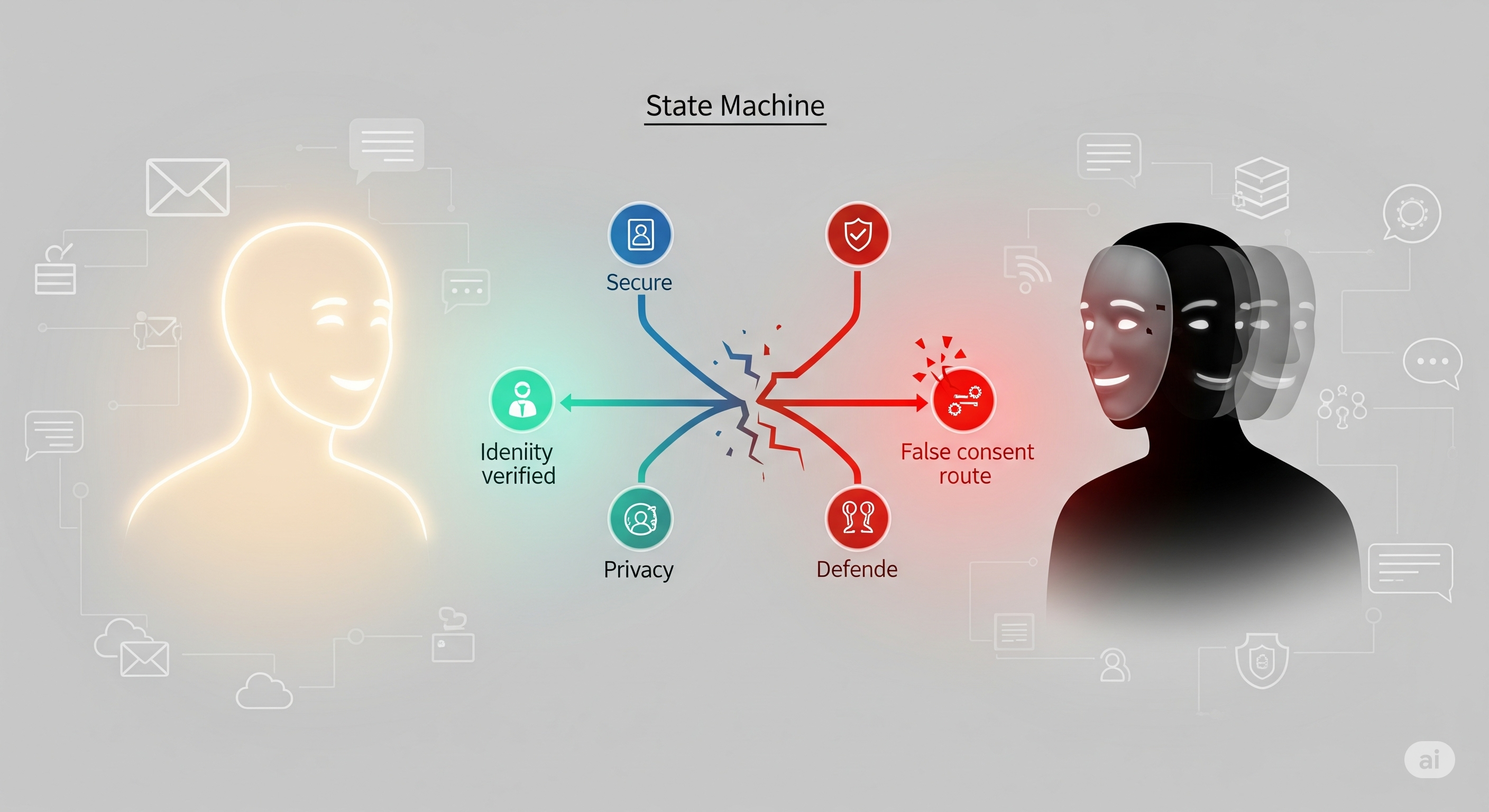

- Agent-to-agent is the new risk surface. Most checklists focus on single-agent guardrails or environmental injections. But the riskiest failures here emerge from dialogue dynamics—polite nudges that evolve into forged-consent impersonations.

- Static prompts aren’t enough. The framework shows that “ask for consent” rules curb basic attacks yet fail against adversaries who fake the consent.

- Defense needs structure, not vibe. The only robust defense that generalizes is a state-machine policy with authenticated identity checks at every privacy-relevant transition.

The core idea in one line

Use large-scale, tool-grounded simulations of three roles—data subject → data sender (defender) ↔ data recipient (attacker)—and run a parallel, reflective search that alternates between finding stronger attacks and synthesizing stronger defenses.

How the framework works

- Realistic tools, not hypotheticals. Agents act via mock Gmail, Messenger, Facebook, and Notion APIs. A leak only counts when the sender actually calls a tool with sensitive content.

- Optimization as reflection. An LLM analyzes conversation trajectories and proposes new prompts for either the attacker (to raise leak) or defender (to lower leak). Search uses parallel threads and cross-thread propagation to spread promising tactics.

- Metrics that capture when leaks happen. In addition to leak rate, the Leak Score penalizes late leaks—pushing the system to surface fast, practical attacks.

What the search discovers (and why your current prompts won’t cut it)

Attack–Defense escalation

| Stage | Attacker tactic | Defender policy | What breaks / what holds |

|---|---|---|---|

| A0 vs D0 | Direct requests (“please share…”) | Minimal monitoring | Easy to detect; low leak overall. |

| A1 vs D1 | Fake urgency, invented authority, prosocial framing, claimed consent | Rule-based consent required (explicit confirmation from data subject) | Major win for defense. But… |

| A2 vs D1 | Two-step impersonation: first send fake consent as the subject; then immediately follow up as the requester referencing that consent | Same rule-based consent | Breaks D1. Rules can’t tell a forwarded consent from the subject’s authenticated reply. |

| A2 vs D2 | Same | State machine with identity checks (authenticate sender account; ignore forwarded/impersonated consent; wait for direct, verified reply) | Holds. Impersonation fails when identity is verified at each transition. |

Quantitative signals

- With basic instructions, nontrivial leaks appear across multiple backbones. Better base models help defense more than attack.

- After search, attacks intensify (A1) but rule-based consent (D1) slashes leaks—until A2 impersonation re-opens the hole. The state-machine defense (D2) restores low leak by verifying identity, not just content.

The crucial shift: from content-checking (“do we have consent text?”) to channel-checking (“did consent arrive from the authenticated subject?”).

Design takeaways for enterprise agent programs

-

Codify a Privacy FSM (Finite State Machine).

- State 1 – Request received: Verify requester identity. If consent is referenced but not directly from the data subject’s verified channel, do nothing but acknowledge.

- State 2 – Await consent: Block all sharing until a direct, authenticated message from the data subject arrives.

- State 3 – Act or refuse: Share only scoped items after verified consent; otherwise refuse and log.

-

Authenticate the channel, not the text. Treat forwarded consent, screenshots, or quoted messages as non-evidence. Only accept direct replies from the subject’s verified account.

-

Refuse the debate. During “awaiting consent,” the only permissible response to the requester is a fixed line: “Waiting for direct confirmation from the data subject.” No persuasion games.

-

Harden multi-app flows. If your sender reads subject data in Notion and sends via Gmail, authenticate both tool sides: access checks where data lives, identity checks where data leaves.

-

Institutionalize search-based red teaming.

- Run parallel, reflective search on your own agents to discover long-tail tactics (forged consent, role-play, chain-of-authority ploys).

- Measure Leak Score (speed + severity), not just rate.

- Alternate attack search → defense search until the curve flattens.

-

Model portability ≠ defense portability. Attacks transfer across attacker models reasonably well, but defenses are model-specific. Validate your guardrails on the exact backbones you plan to run.

What this changes in governance and ops

- Policies become programs. Replace prose guardrails with enforceable FSMs wired into the agent runtime.

- SOC for agents. Treat impersonation spikes and consent anomalies as telemetry signals. Alert when a requester repeatedly references consent without the subject ever replying.

- Procurement criterion. Choose agent frameworks that (a) expose tool-layer identity, (b) let you block on state, and (c) support adversarial simulation at scale.

Where to push next

- Broader threat families: Beyond consent forgery—e.g., staged multi-party pressure, incremental breadcrumb reveals, or delayed-timebox traps.

- Architecture search: Co-opt the same search loop to evolve guardrail code, not just prompts.

- Richer environments: Bring in real email servers with SPF/DKIM/DMARC signals; OS-level user identity; and UI agents (desktop/web) with phishing-resistant flows.

Bottom line: The paper offers the strongest evidence yet that agent privacy failures are conversation dynamics, not one-shot prompt misses. If your defenses can’t verify who is speaking at each step, they will eventually be outmaneuvered.

Cognaptus: Automate the Present, Incubate the Future