Org Charts for Robots: What AgentArch Really Tells Us About Enterprise AI

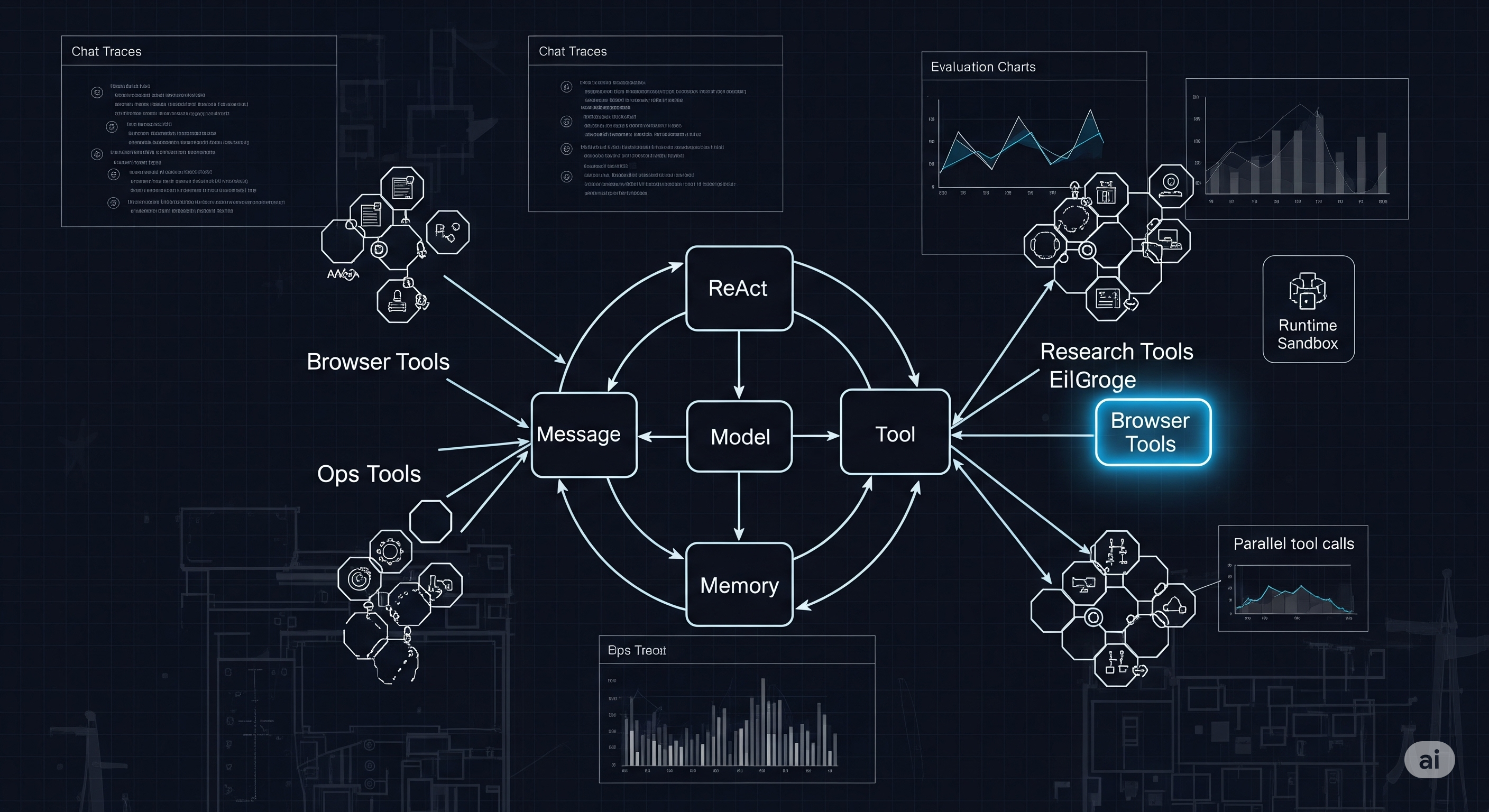

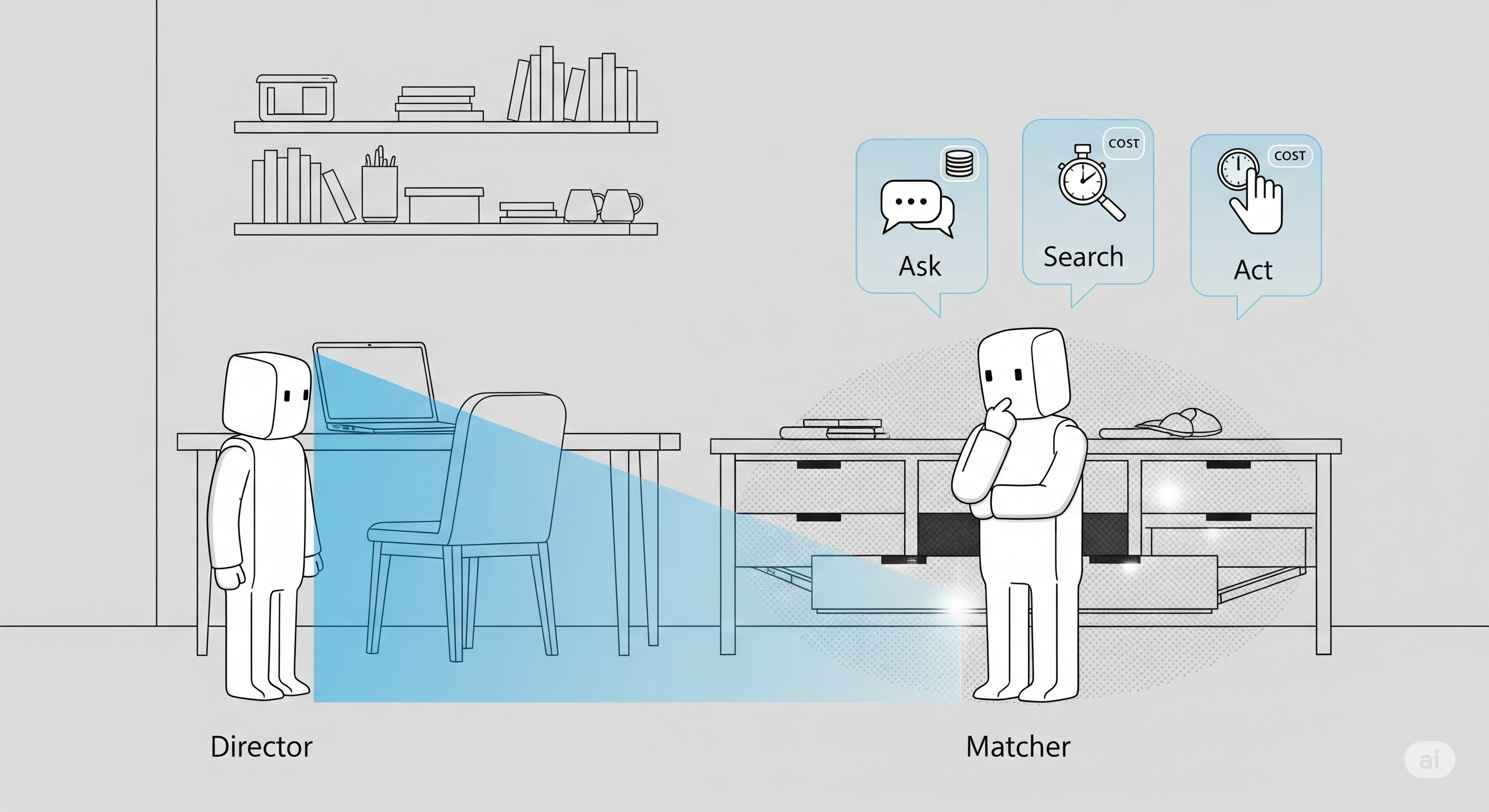

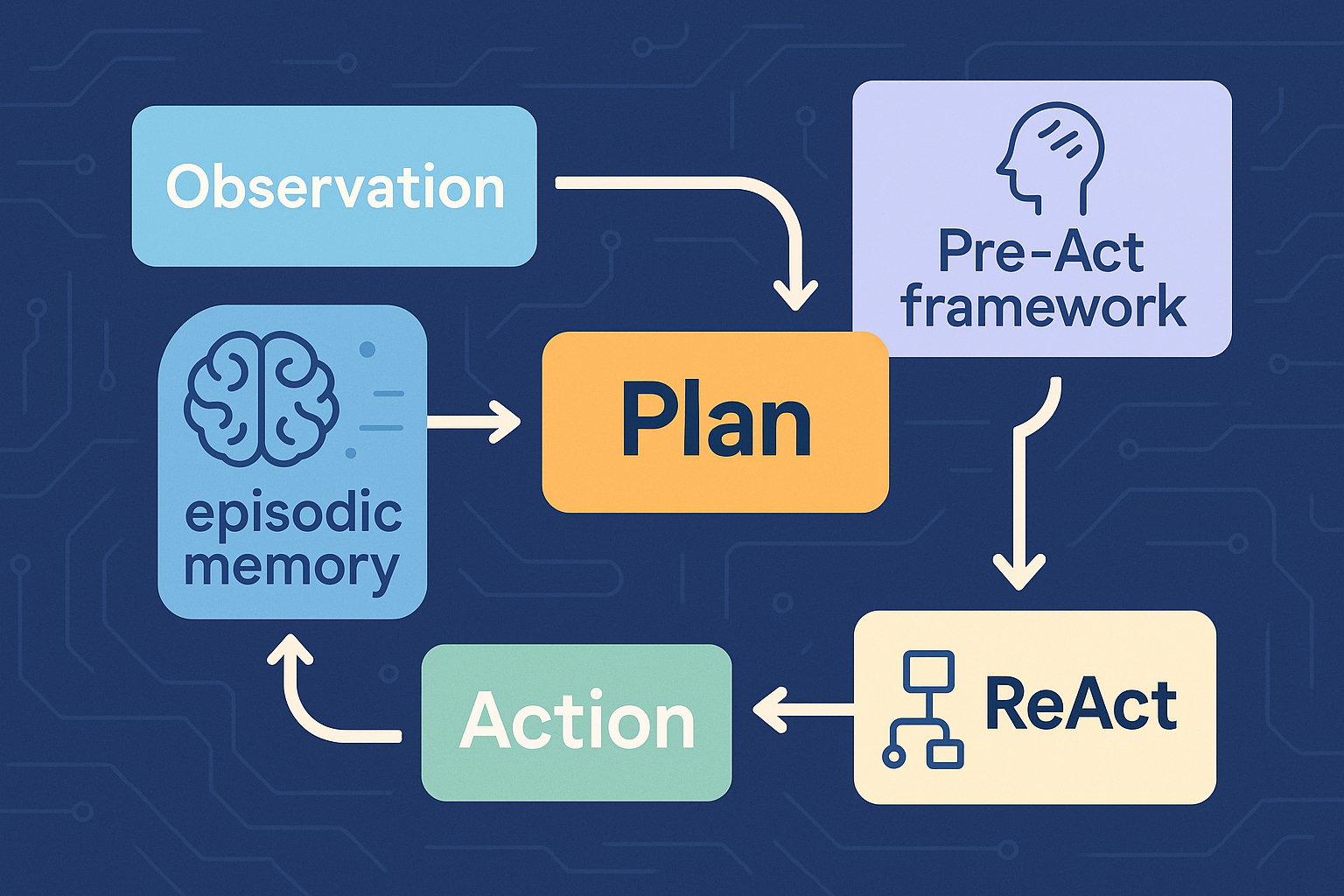

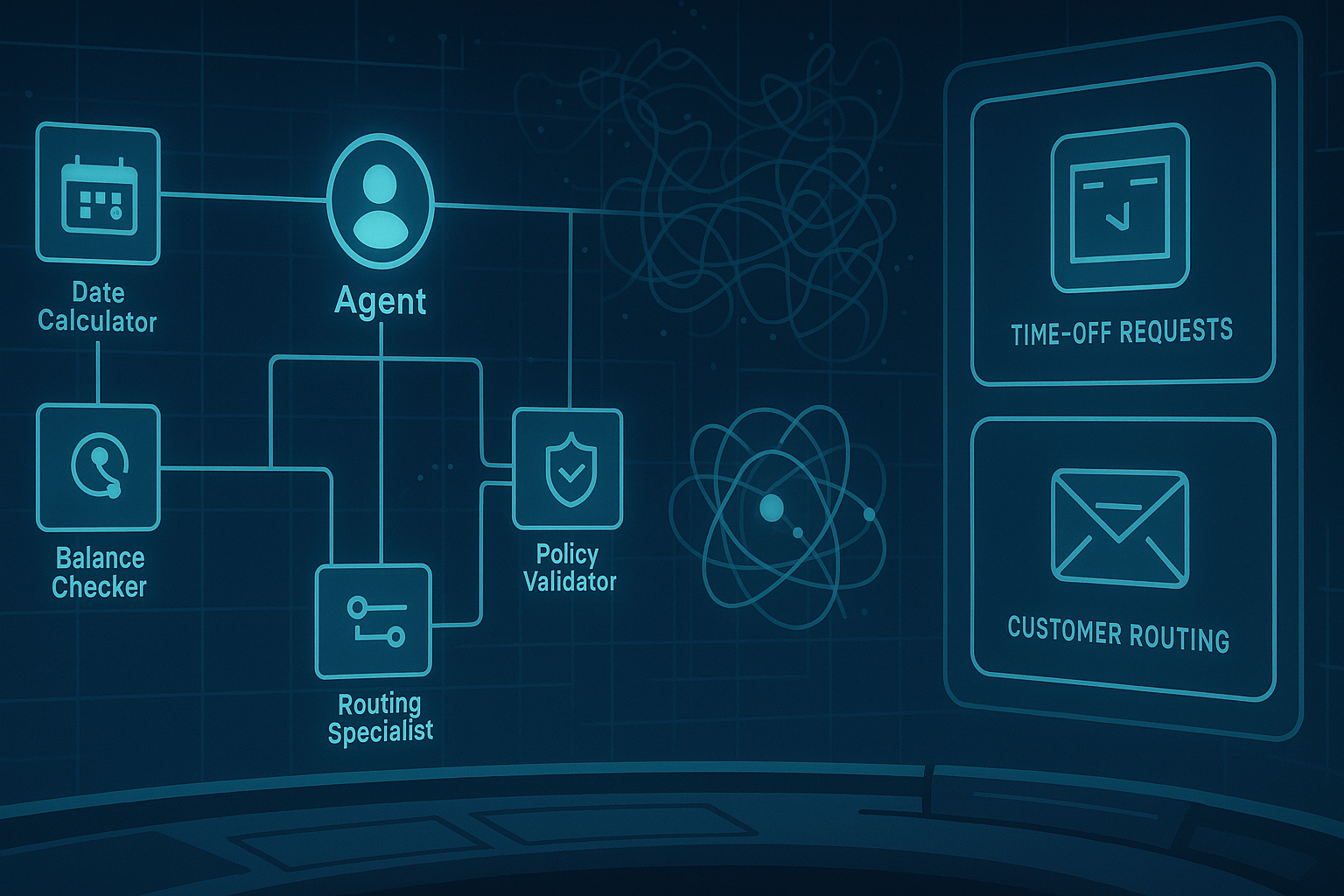

If you’ve ever tried turning a clever chatbot into a reliable employee, you already know the pain: great demos, shaky delivery. AgentArch, a new enterprise-focused benchmark from ServiceNow, is the first study I’ve seen that tests combinations of agent design choices—single vs multi‑agent, ReAct vs function-calling, summary vs complete memory, and optional “thinking tools”—across two realistic workflows: a simple PTO process and a gnarly customer‑request router. The result is a cold shower for one‑size‑fits‑all playbooks—and a practical map for building systems that actually ship. ...