Graphs, Gains, and Guile: How FinKario Outruns Financial LLMs

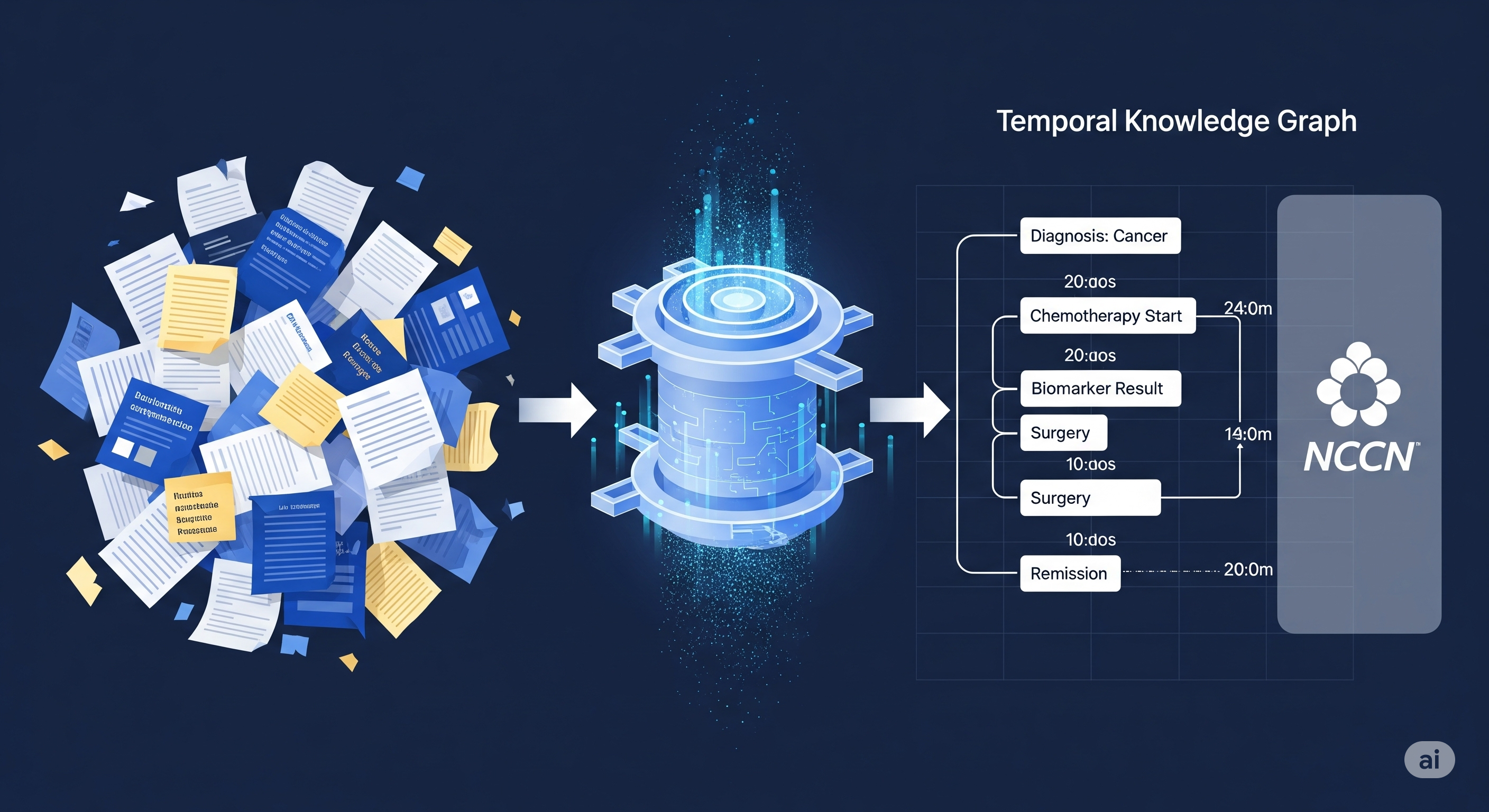

In the world of financial AI, where speed meets complexity, most systems are either too slow to adapt or too brittle to interpret the nuanced messiness of real-world finance. Enter FinKario, a new system that combines event-enhanced financial knowledge graphs with a graph-aware retrieval strategy — and outperforms both specialized financial LLMs and institutional strategies in real-world backtests. The Retail Investor’s Dilemma While retail traders drown in information overload, professional research reports contain rich insights — but they’re long, unstructured, and hard to parse. Most LLM-based tools don’t fully exploit these reports. They either extract static attributes (e.g., stock ticker, sector, valuation) or respond to isolated queries without contextual awareness. ...