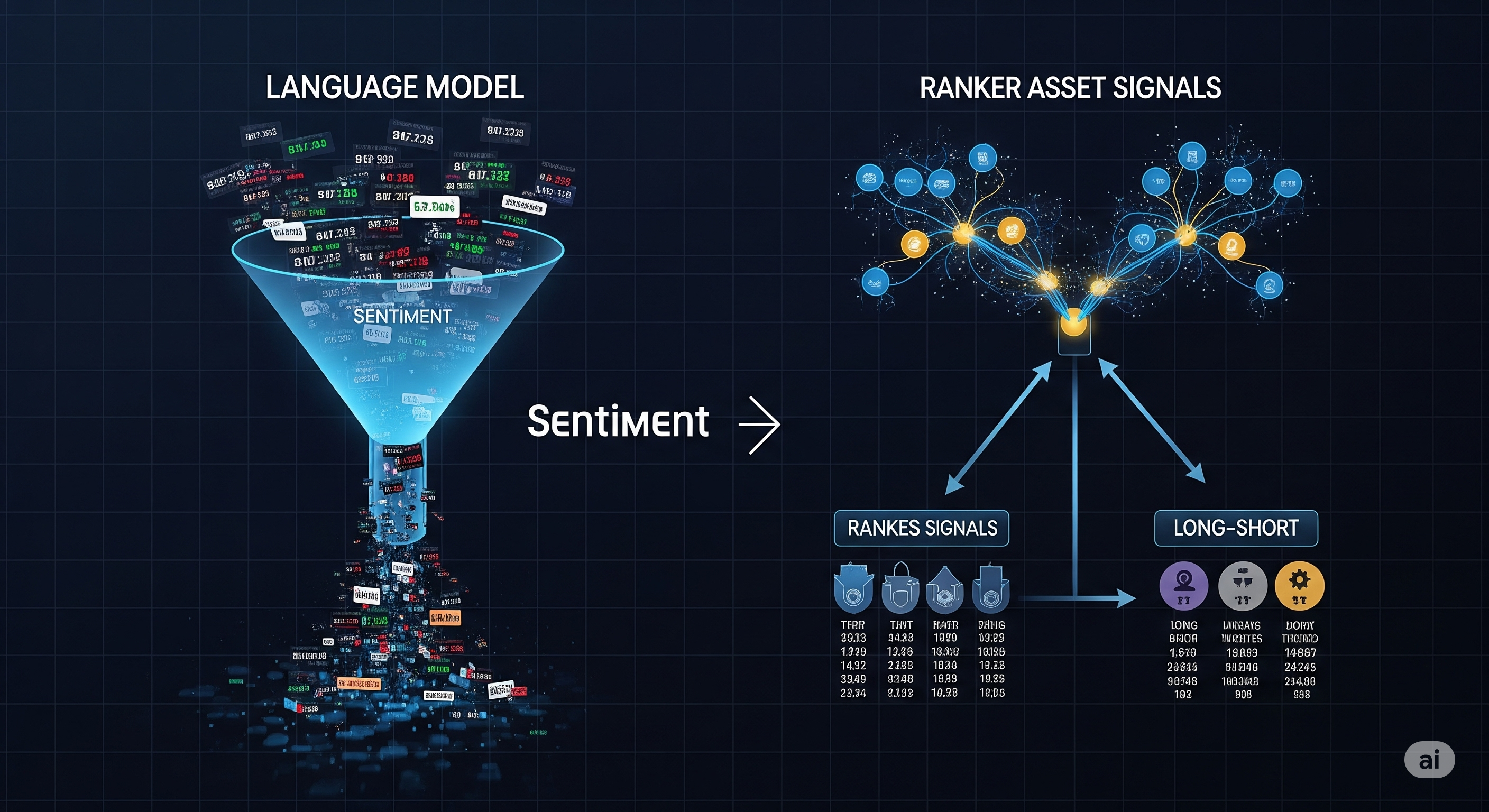

The Sentiment Edge: How FinDPO Trains LLMs to Think Like Traders

Financial markets don’t reward the loudest opinions. They reward the most timely, well-calibrated ones. FinDPO, a new framework by researchers from Imperial College London, takes this lesson seriously. It proposes a bold shift in how we train language models to read market sentiment. Rather than relying on traditional supervised fine-tuning (SFT), FinDPO uses Direct Preference Optimization (DPO) to align a large language model with how a human trader might weigh sentiment signals in context. And the results are not just academic — they translate into real money. ...