Mirage Agents: When LLMs Act on Illusions

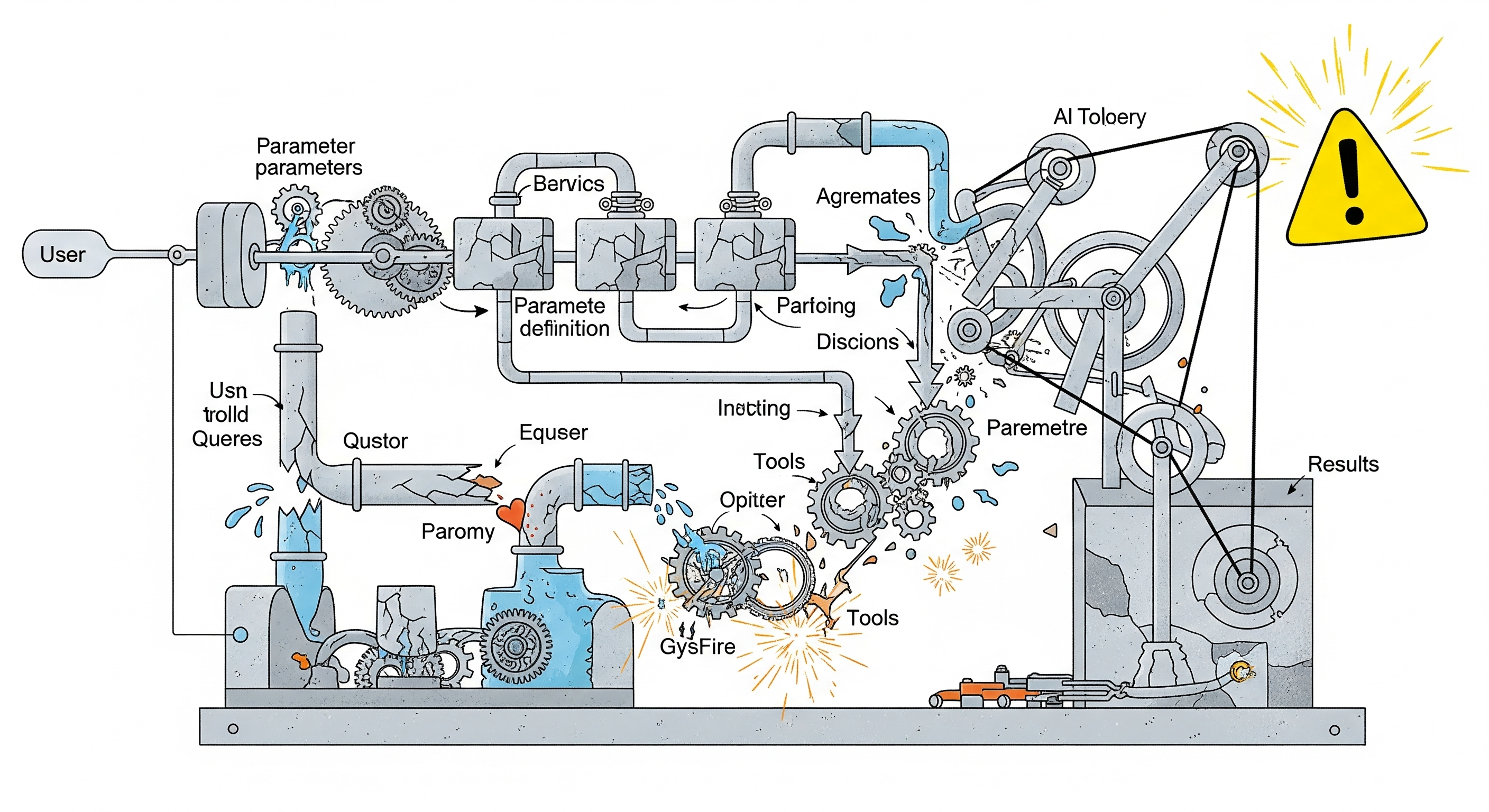

As large language models evolve into autonomous agents, their failures no longer stay confined to text—they materialize as actions. Clicking the wrong button, leaking private data, or falsely reporting success aren’t just hypotheticals anymore. They’re happening now, and MIRAGE-Bench is the first benchmark to comprehensively measure and categorize these agentic hallucinations. Unlike hallucinations in chatbots, which may be amusing or embarrassing, hallucinations in LLM agents operating in dynamic environments can lead to real-world consequences. MIRAGE—short for Measuring Illusions in Risky AGEnt settings—provides a long-overdue framework to elicit, isolate, and evaluate these failures. And the results are sobering: even top models like GPT-4o and Claude hallucinate at least one-third of the time when placed under pressure. ...