Provenance, Not Prompts: How LLM Agents Turn Workflow Exhaust into Real-Time Intelligence

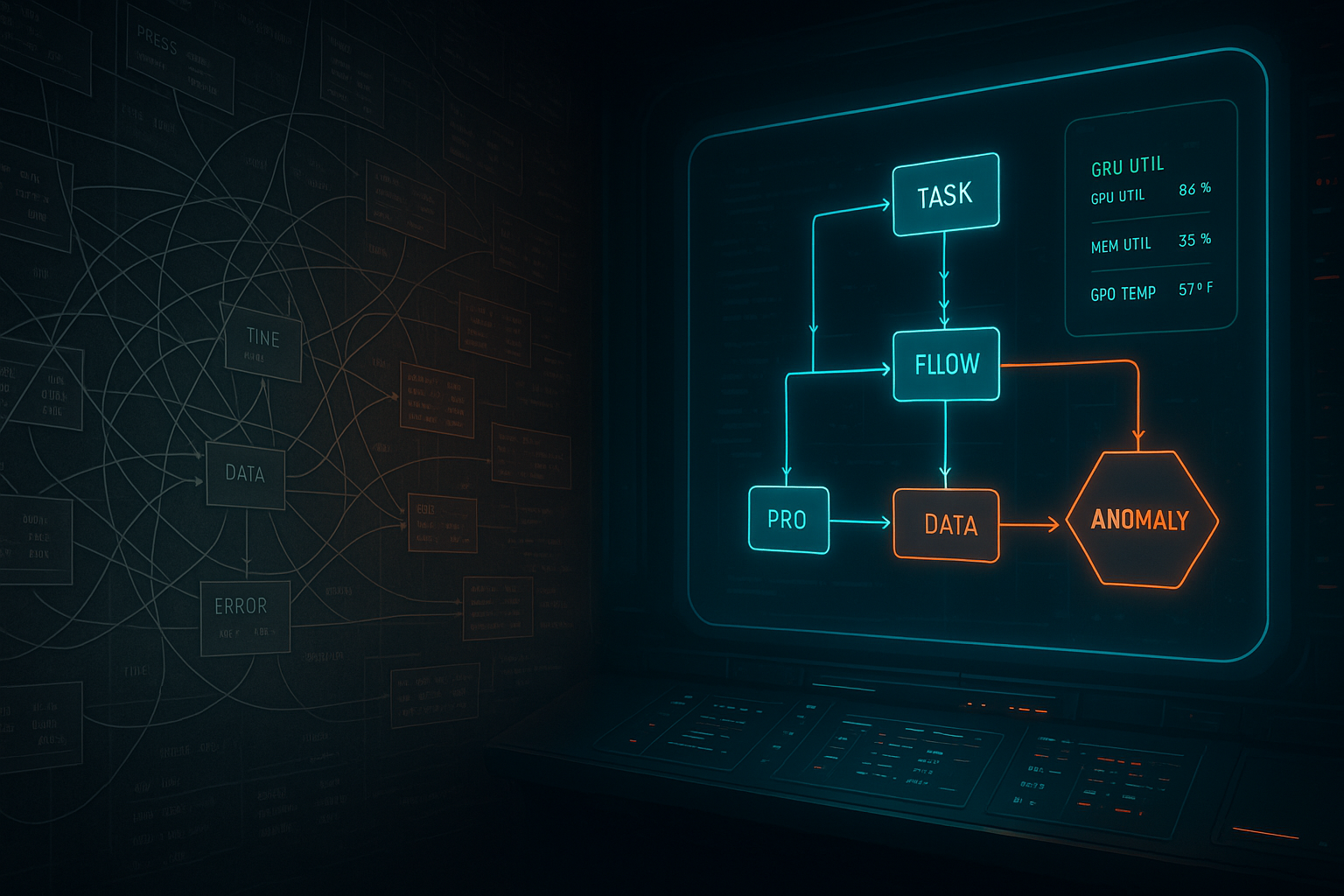

TL;DR Most teams still analyze pipelines with brittle SQL, custom scripts, and static dashboards. A new reference architecture shows how schema-driven LLM agents can read workflow provenance in real time—across edge, cloud, and HPC—answering “what/when/who/how” questions, plotting quick diagnostics, and flagging anomalies. The surprising finding: guideline-driven prompting (not just bigger context) is the single highest‑ROI upgrade. Why this matters (for operators, data leads, and CTOs) When production AI/data workflows sprawl across services (queues, training jobs, GPUs, file systems), the real telemetry isn’t in your app logs; it’s in the provenance—the metadata of tasks, inputs/outputs, scheduling, and resource usage. Turning that exhaust into live answers is how you: ...