Mirror, Signal, Manoeuvre: Why Privileged Self‑Access (Not Vibes) Defines AI Introspection

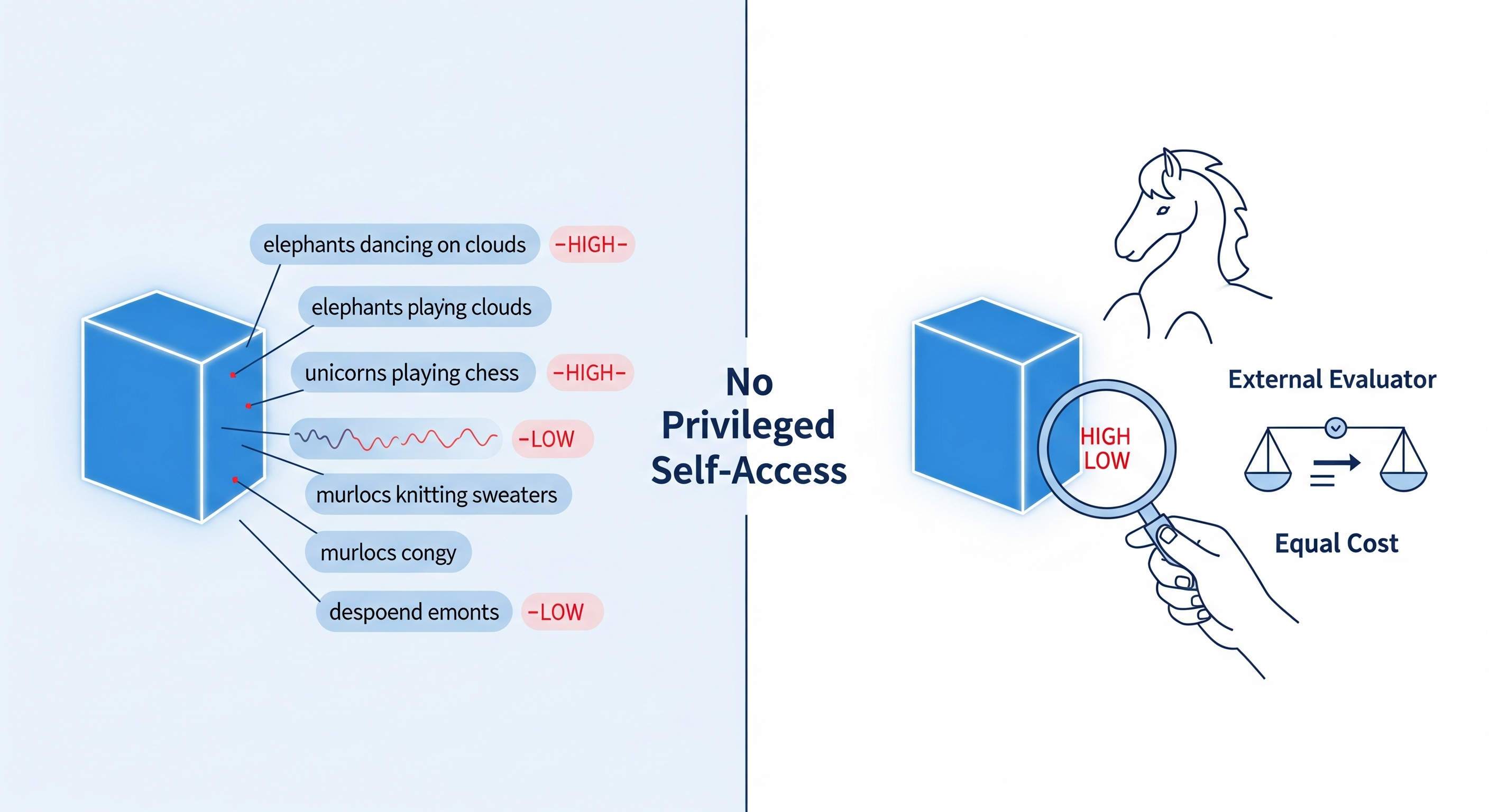

TL;DR Most demos of “LLM introspection” are actually vibe checks on outputs, not privileged access to internal state. If a third party with the same budget can do as well as the model “looking inward,” that’s not introspection—it’s ordinary evaluation. Two quick experiments show temperature self‑reports flip with trivial prompt changes and offer no edge over across‑model prediction. The bar for introspection should be higher, and business users should demand it. ...