Noise-Canceling Finance: How the Information Bottleneck Tames Overfitting in Asset Pricing

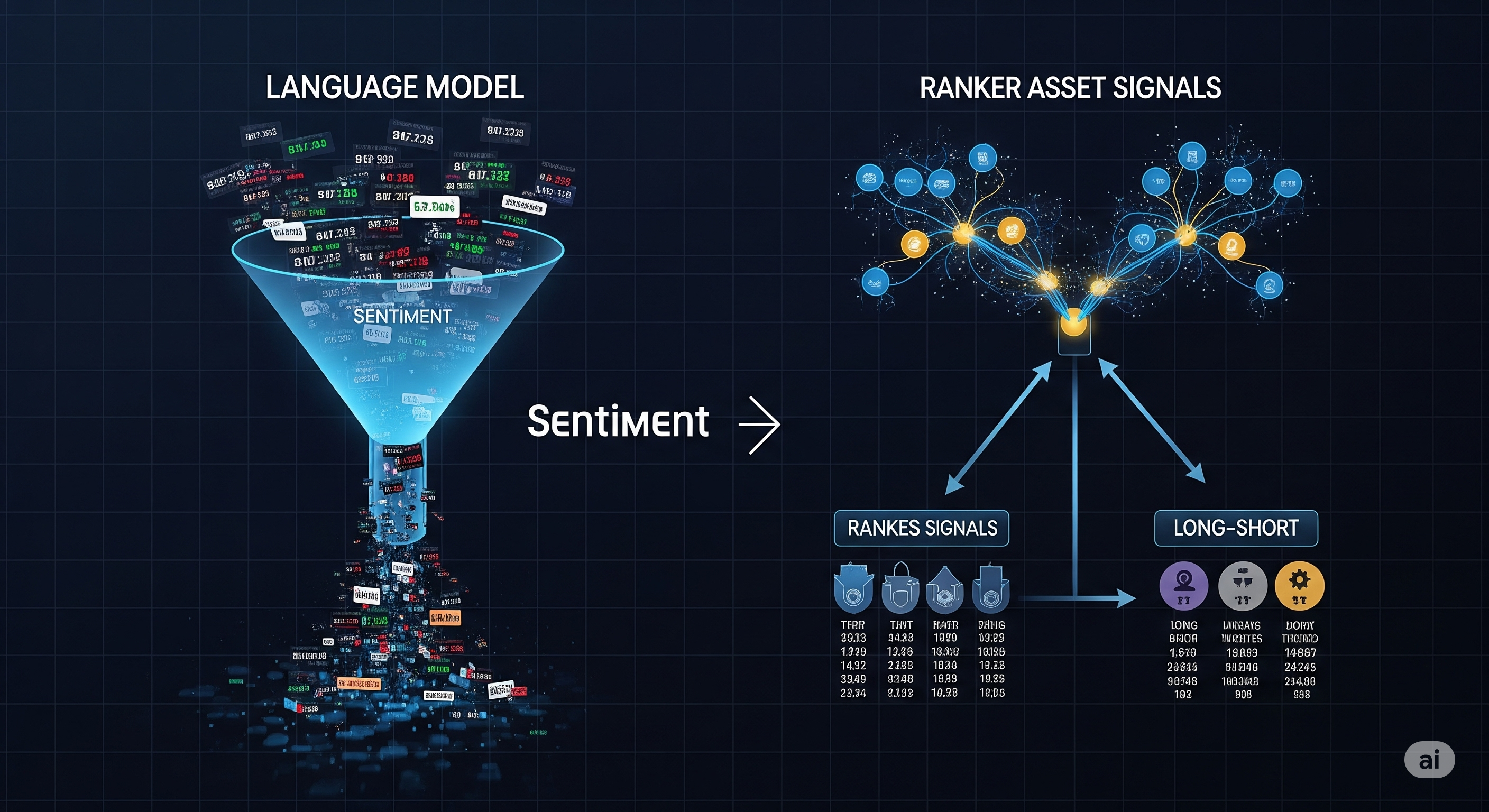

Deep learning has revolutionized many domains of finance, but when it comes to asset pricing, its power is often undercut by a familiar enemy: noise. Financial datasets are notoriously riddled with weak signals and irrelevant patterns, which easily mislead even the most sophisticated models. The result? Overfitting, poor generalization, and ultimately, bad bets. A recent paper by Che Sun proposes an elegant fix by drawing inspiration from information theory. Titled An Information Bottleneck Asset Pricing Model, the paper integrates information bottleneck (IB) regularization into an autoencoder-based asset pricing framework. The goal is simple yet profound: compress away the noise, and preserve only what matters for predicting asset returns. ...