Doctor, Interrupted: How Multi-Agent AI Revives the Lost Art of Pre‑Consultation

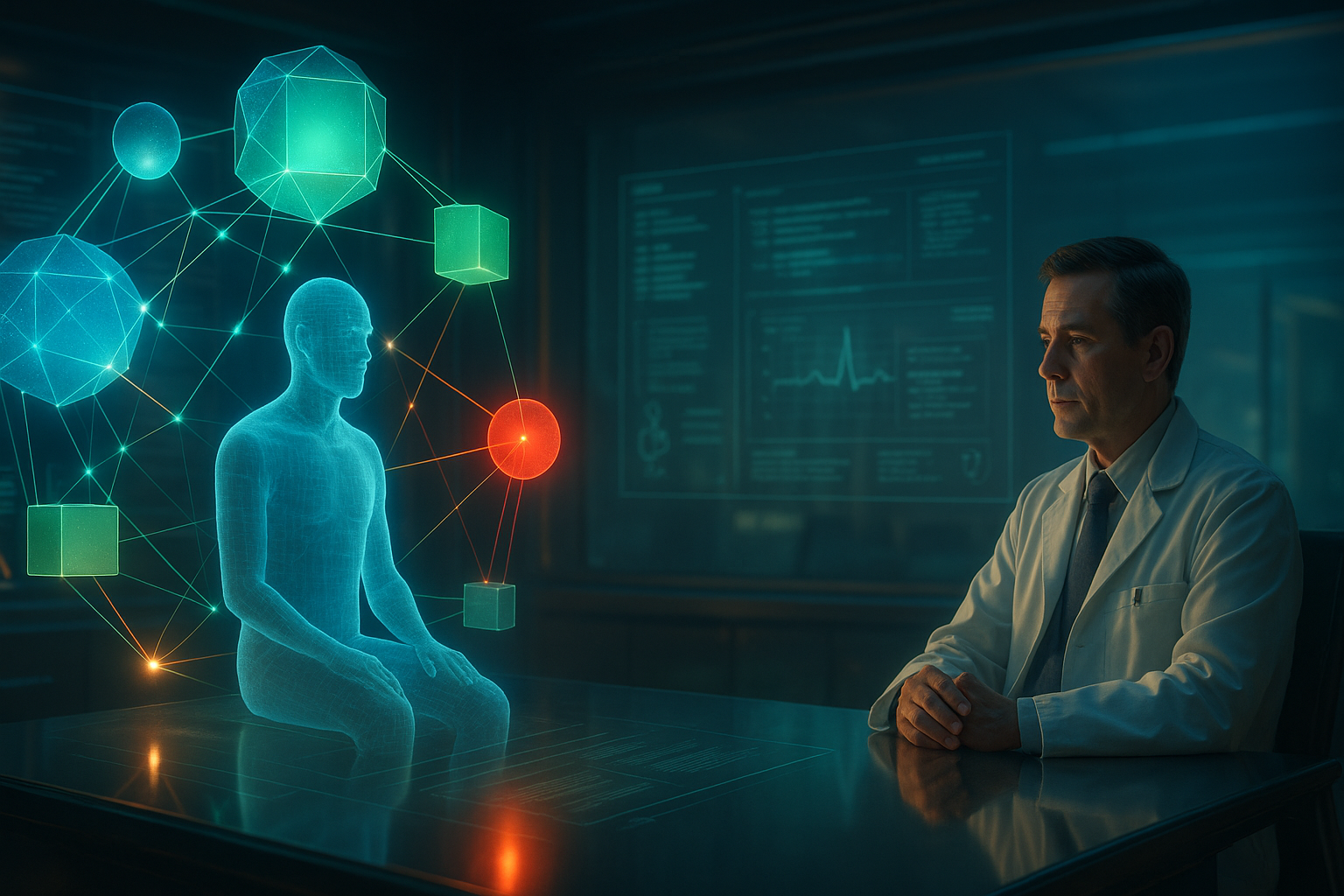

Opening — Why this matters now The global shortage of physicians is no longer a future concern—it’s a statistical certainty. In countries representing half the world’s population, primary care consultations last five minutes or less. In China, it’s often under 4.3 minutes. A consultation this brief can barely fit a polite greeting, let alone a clinical investigation. Yet every wasted second compounds diagnostic risk, burnout, and cost. Enter pre‑consultation: the increasingly vital buffer that collects patient data before the doctor ever walks in. But even AI‑based pre‑consultation systems—those cheerful symptom checkers and chatbots—remain fundamentally passive. They wait for patients to volunteer information, and when they don’t, the machine simply shrugs in silence. ...