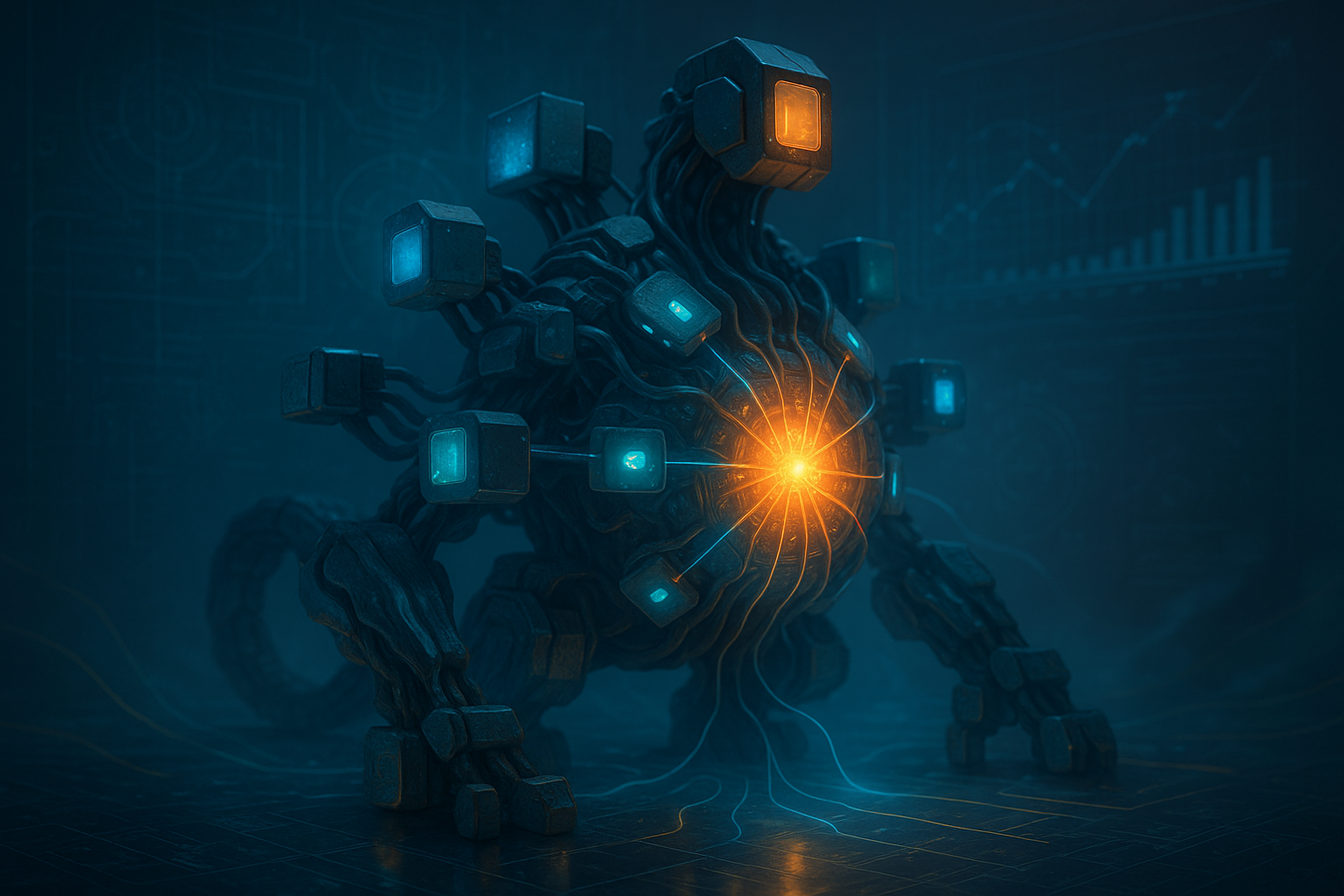

Blueprints of Agency: Compositional Machines and the New Architecture of Intelligence

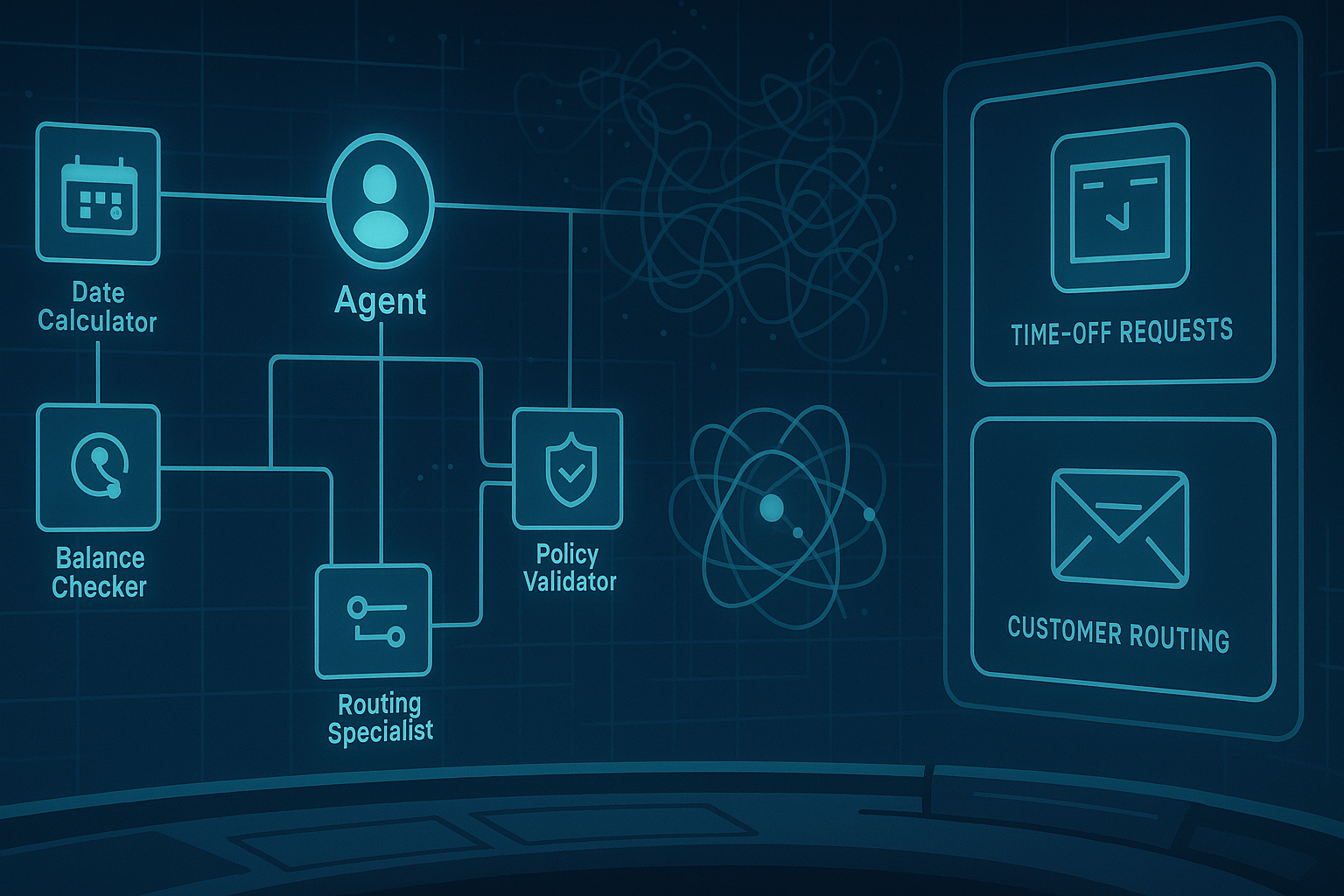

When the term agentic AI is used today, it often conjures images of individual, autonomous systems making plans, taking actions, and learning from feedback loops. But what if intelligence, like biology, doesn’t scale by perfecting one organism — but by building composable ecosystems of specialized agents that interact, synchronize, and co‑evolve? That’s the thesis behind Agentic Design of Compositional Machines — a sprawling, 75‑page manifesto that reframes AI architecture as a modular society of minds, not a monolithic brain. Drawing inspiration from software engineering, systems biology, and embodied cognition, the paper argues that the next generation of LLM‑based agents will need to evolve toward compositionality — where reasoning, perception, and action emerge not from larger models, but from better‑coordinated parts. ...