Opening — Why this matters now

The AI industry has spent the past two years selling a seductive idea: that large language models are on the cusp of becoming autonomous agents. They’ll plan, act, revise, and optimize—no human micro‑management required. But a recent study puts a heavy dent in this narrative. By stripping away tool use and code execution, the paper asks a simple and profoundly uncomfortable question: Can LLMs actually plan? Spoiler: not really.

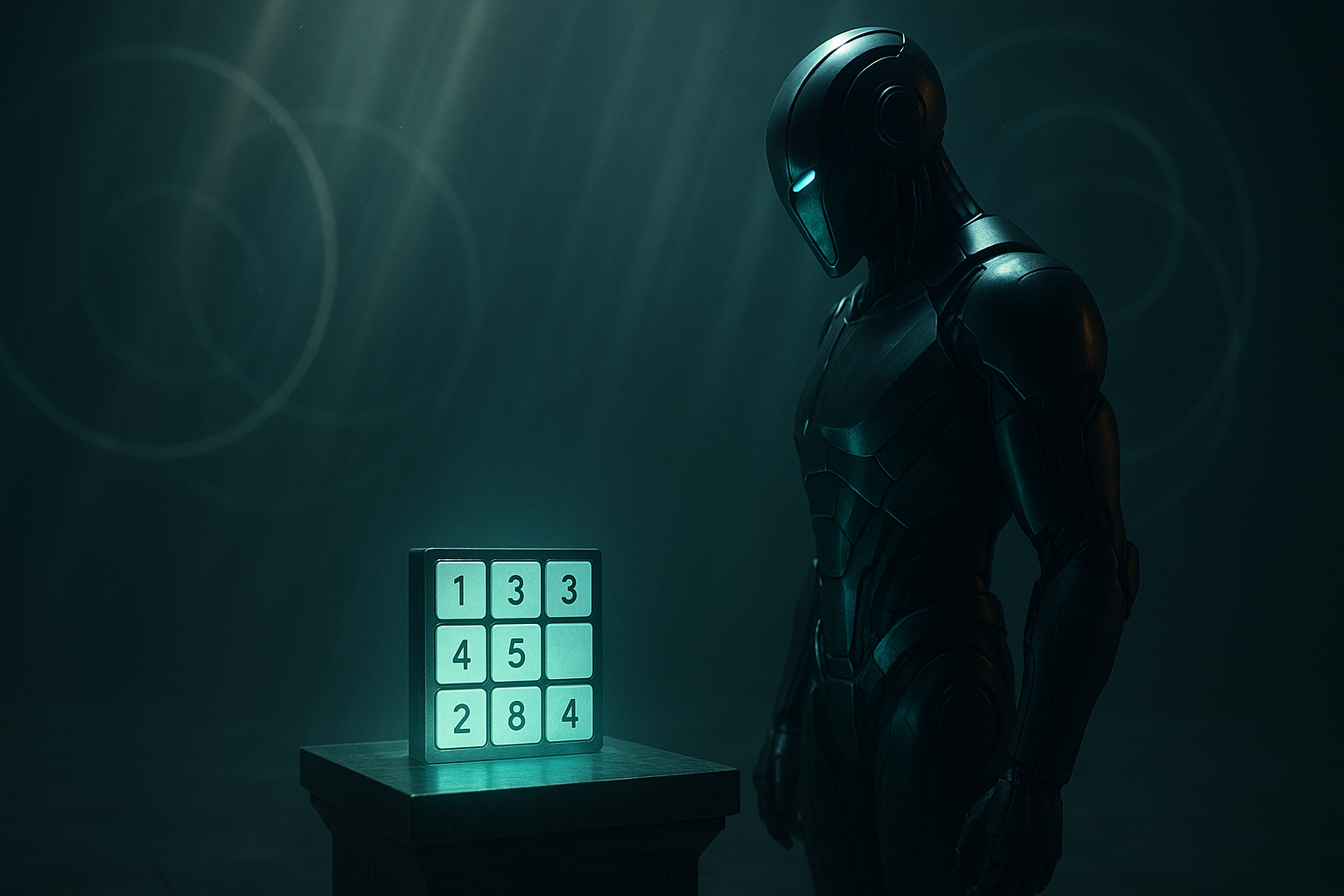

The authors deploy a classic toy problem—the 8‑puzzle—to probe whether models can track state, follow rules, and move toward a goal. It’s humble, yes. But also merciless. If you can’t solve a deterministic sliding‑tile puzzle, your dreams of orchestrating real‑world logistics or managing financial trades look… aspirational.

This article distills what the paper reveals about the limits of “innate planning” in LLMs—and what businesses should take away from it. fileciteturn0file0

Background — Context and prior art

Modern benchmarks overwhelmingly measure flash‑card intelligence: math, coding, knowledge recall. These tests reward correctness, not coherence. They rarely stress whether a model can maintain an internal world state and act across time.

Past work has tried to patch this gap with:

- Chain‑of‑Thought prompting (more words = more wisdom, allegedly)

- Algorithm‑of‑Thought (pseudo‑search in natural language)

- Tree‑of‑Thought (structured branching via text)

- Toolformer/ReAct (delegate hard tasks to external code or web queries)

But these all blur the line between the model’s reasoning and the crutch supporting it. What’s the model, and what’s the tool? This paper avoids that ambiguity entirely: no code interpreters, no search algorithms, no “just call Python.”

Just the model. A grid. And some tiles.

Analysis — What the paper actually does

The authors evaluate four LLMs (GPT‑5‑Thinking, Gemini‑2.5‑Pro, GPT‑5‑Mini, Llama‑3.1‑8B) across three prompting regimes:

- Zero‑Shot: plain instructions

- Chain‑of‑Thought: step‑by‑step examples

- Algorithm‑of‑Thought: guided pseudo‑search

Then they intensify the experiment using tiered corrective feedback:

- Repeat (just try again)

- Specific (point out the error)

- Suggestive (error + optimal solution length)

Finally, they introduce an “external move validator” that handles all move legality. The models only need to pick the best move from a list. The authors effectively eliminate state‑tracking errors and leave only the planning layer exposed.

And still—no model solves a single puzzle.

Even when spoon‑fed valid moves, models loop, oscillate, or wander aimlessly.

Two systemic failures appear across all conditions (page 7 analysis):

- Brittle internal state representations → hallucinated board states, invalid moves.

- Weak heuristic planning → looping behaviors, aimless step inflation, or moves that increase distance to the goal.

The models can describe planning. They cannot perform it.

Findings — Results with visualization

1. Baseline performance is bleak

Success rates (Fig. 4, paper):

- GPT‑5‑Thinking (AoT): 30%

- All others: effectively 0–8%

Llama‑3.1‑8B solves nothing. Gemini solves one. GPT‑5‑Mini performs worse with CoT/AoT than Zero‑Shot—a sign that “thinking out loud” can actually derail weaker models.

2. Feedback helps… at a cost

Suggestive feedback pushes GPT‑5‑Thinking to 68% success. But the average cost per solved puzzle (Table 1):

- 24 minutes,

- 75,284 tokens,

- Nearly 2 attempts,

- ~49 moves (optimal ≈ 21)

A model “solves” the puzzle, but in the time budget that would embarrass even a 1980s pocket computer.

3. External move validator exposes pure planning failure

Models are given:

- the current state

- a list of all legal moves

- the previous move (to avoid instant backtracking)

And yet:

- GPT‑5‑Thinking loops 100% of the time

- Gemini: 92% loops

- Llama: 86% loops

- GPT‑5‑Mini: 68% early termination (hits 50‑move cap)

Summary Table

| Capability Tested | With No Tools | With Feedback | With Valid-Move Assistance |

|---|---|---|---|

| State Tracking | ❌ fails often | ⚠️ improves with cost | ✔️ offloaded |

| Planning | ❌ fails | ❌ still weak | ❌ catastrophic |

| Success Rate | low | mid (best 68%) | 0% |

The visual metaphor

This paper is essentially a heat‑map of failure: the closer models get to “acting like agents,” the more unstable they become.

Implications — Why this matters for businesses and AI governance

1. “Agentic AI” is still a marketing phrase, not an operational capability.

If LLMs cannot reliably solve a trivial 8‑puzzle, we should be skeptical of claims that they can autonomously manage logistics, workflows, customer journeys, or financial transactions.

2. Stateful reasoning remains the unsolved frontier.

Models excel at text generation, summarization, and pattern recognition. But anything requiring memory + strategy + constraint adherence collapses quickly.

3. Tool use is doing most of the heavy lifting.

When models appear brilliant, it’s often because:

- Python executes a search

- an API provides structure

- a retrieval system maintains context

Without these, the illusion cracks.

4. Governance and safety frameworks must treat LLMs as stateless advisers, not autonomous actors.

The paper’s most alarming finding is not failure—it is false confidence. Models frequently output a sequence that “solves” the puzzle—while the intermediate moves contain undetected illegal operations.

This is the governance nightmare scenario:

The system believes it succeeded, produces a confident output, and hides its own mistakes.

In financial trading, supply‑chain optimization, or robotics, that is unacceptable.

5. Path forward: explicit state, modular reasoning, verifiable planning

The future likely requires:

- externalized memory or symbolic representations

- explicit state machines or structured search modules

- verifiable reasoning steps that can be audited

- agent architectures that separate “language skill” from “planning skill”

LLMs as monolithic reasoners may have peaked in this dimension.

Conclusion — The 3×3 lesson

This paper delivers a clarifying truth: LLMs are powerful linguistic engines but unreliable planners. Even the best models struggle with a tiny environment that demands uninterrupted state maintenance and goal‑directed behavior.

For enterprises building AI agents, the message is blunt:

If your system needs planning, don’t rely on LLMs to improvise it.

Treat them as components—not controllers. Wrap them in verifiable structures. And never confuse eloquence with competence.

Cognaptus: Automate the Present, Incubate the Future.