Opening — Why This Matters Now

The age of AI-powered learning assistants has arrived, but most of them still behave like overeager interns—confident, quick, and occasionally catastrophically wrong. The weakest link isn’t the models; it’s the structure (or lack thereof) behind their reasoning. Lecture notes fed directly into an LLM produce multiple-choice questions with the usual suspects: hallucinations, trivial distractors, and the unmistakable scent of “I made this up.”

The Drexel–Boston College team behind the paper Rate-Distortion Guided Knowledge Graph Construction from Lecture Notes Using Gromov–Wasserstein Optimal Transport fileciteturn0file0 proposes something more radical: treat knowledge graph construction as a compression problem. Not metaphorically—mathematically. Instead of winging it with heuristics or overstuffed rule-based pipelines, we can apply information theory to derive an optimal KG that is as compact as possible while still faithfully reflecting the lecture content.

AI in education doesn’t just need more data. It needs better structure. And this paper offers a principled path.

Background — The Trouble With Extracted Knowledge

Educational KGs promise wonders—better explainability, improved factual grounding, and more controllable question generation. Unfortunately, the current ecosystem vacillates between two extremes:

- Rule-based extraction: brittle, narrow, and allergic to generalization.

- LLM-based extraction: creative, yes, but prone to semantic drift and redundant nodes.

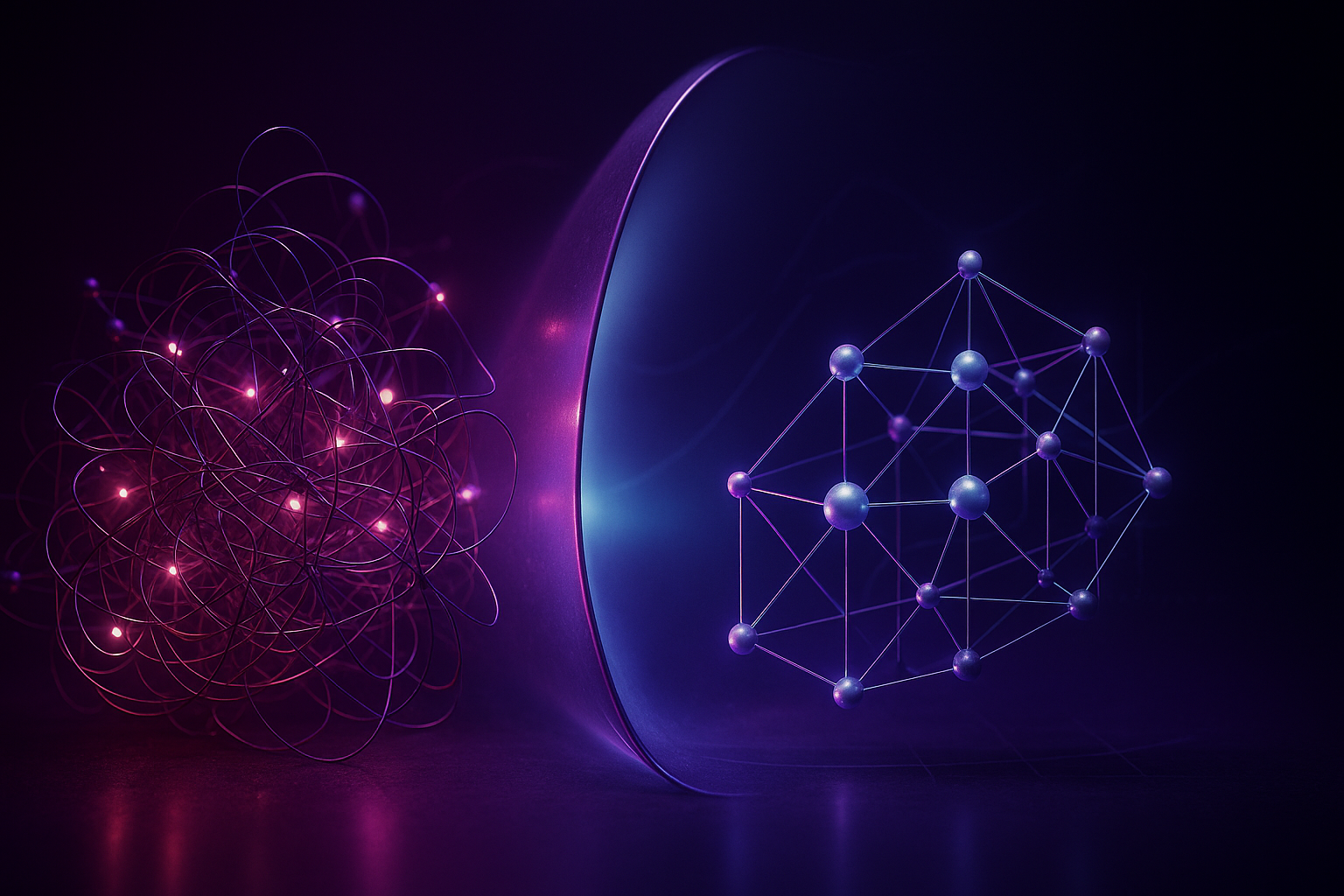

Both suffer from the same design flaw: no mechanism to balance completeness against complexity. The result is either bloated graphs that encode every adjective ever written or skeletal graphs that omit half the course.

Enter rate–distortion (RD) theory, the backbone of lossy compression. If we can compress images and speech while preserving human-perceived fidelity, why not compress knowledge?

Analysis — What the Paper Actually Does

The authors model both lecture notes and candidate KGs as metric–measure spaces—structured objects with distances capturing semantic and relational geometry. The core workflow looks like this:

1. Lecture notes → metric space (Z, d_Z, μ_Z)

Lectures are parsed into atomic units (page 5 of the paper). These segments are embedded via sentence encoders and assigned three types of distances:

- chronological (distance in lecture order),

- logical (document hierarchy: section/subsection similarity),

- semantic (cosine-based embedding distance).

These are fused into a distance matrix representing the conceptual landscape of the lecture.

2. Initial KG → metric space (V, d_V, μ_V)

A first-pass KG is extracted using an LLM under a fixed ontology (isA, partOf, prerequisiteOf, etc.) (page 6). Graph nodes include canonical labels, definitions, aliases, and provenance.

Distances between KG nodes combine:

- topological distance (normalized shortest paths),

- semantic distance (embedding similarity).

3. Align lecture and KG via Fused Gromov–Wasserstein (FGW) distance

This is the geometric core of the paper: align two different metric spaces by minimizing both relational mismatch and semantic mismatch (Figure 3). The coupling matrix π effectively answers: which lecture fragments correspond to which KG concepts—and with what confidence?

4. Define and minimize L = R + βD

Where:

- R ≈ complexity of the KG (nodes + 0.5 edges),

- D = FGW distortion between lecture and KG,

- β tunes how much distortion matters.

This yields a rate–distortion curve, the same elbow-shaped frontier we know from compression theory (Figure 7). The sweet spot—the knee—is where representation becomes maximally efficient.

5. Refine the KG using five operators (page 7):

- Add missing concepts.

- Merge redundant ones.

- Split overloaded ones.

- Remove under-supported nodes.

- Rewire relationships based on coupling evidence.

Each edit is accepted only if it reduces the overall objective L.

The process is visually summarized in Figure 4 of the paper.

Findings — What the System Actually Achieves

1. Major coverage improvements

Figure 8 shows that refined KGs cover substantially more lecture content, with an average +0.304 increase in alignment.

This means the optimized KG actually understands what is being taught, rather than vaguely orbiting around key terms.

2. Clear rate–distortion knees

The RD curves across lectures show the expected pattern:

- large distortion drops early on,

- diminishing returns thereafter,

- an identifiable elbow where complexity begins to cost more than it benefits.

On average, the knee captures:

- ≈50% reduction in distortion, with

- only ≈30% of maximum complexity.

This is the “Goldilocks KG”—not too big, not too small.

3. Better multiple-choice questions—consistently

Across eight lectures and 400 evaluated MCQs, KGs outperform raw notes across 15 quality criteria (Table I). A few highlights:

| Criterion | Improvement (Δ) |

|---|---|

| No answer hints | +1.805 |

| Hard to guess | +1.210 |

| No duplicates | +1.190 |

| Option balance | +1.110 |

| Plausible distractors | +1.095 |

These are precisely the areas where structure—not raw text—matters. A well-organized KG helps the LLM build questions that are challenging, balanced, and free of giveaways.

A few criteria dipped slightly (e.g., hallucinations, unambiguous options), likely because KGs remove textual redundancy the LLM might rely on for grounding.

Visualization — The Core Frameworks

Rate–Distortion Conceptual Map

| Rate (Complexity) | Distortion (Loss) | Interpretation |

|---|---|---|

| Low | High | KG too small → missing concepts |

| Medium | Low | Optimal zone → knee point |

| High | Very Low | Overfitting → unnecessary detail |

KG Refinement Operators

| Operator | When Triggered | Effect |

|---|---|---|

| Add | Under-covered lecture segments | Expands coverage |

| Merge | Redundant or highly similar nodes | Reduces complexity |

| Split | Nodes with high semantic entropy | Increases fidelity |

| Remove | Nodes with little coupling mass | Simplifies graph |

| Rewire | Structural mismatch detected | Corrects conceptual flow |

Implications — Why This Matters for AI and Business

1. Structured compression beats blind generation

The rate–distortion framing unlocks a more disciplined workflow for any AI system that converts unstructured content into knowledge:

- corporate training systems,

- compliance knowledge bases,

- technical documentation indexing,

- even AI agents that need to extract operational schemas.

2. Optimal transport is becoming an AI engineering primitive

The paper showcases FGW as a practical tool—not just theory—and suggests future agentic systems may routinely align heterogeneous knowledge sources using OT.

3. Pedagogical transparency is not optional

For onboarding, education, and enterprise learning, hallucinations are not charming—they’re liabilities. KGs tuned via RD give LLMs a safer backbone.

4. A new benchmark for AI-powered courseware

Tools like Cognaptus, which already focus on structured business automation, can leverage the same principle:

- use KGs as compressed knowledge substrates,

- optimize for minimal distortion under resource budgets,

- deploy LLMs downstream for generation tasks with guardrails.

In enterprise contexts, this means creating “optimal complexity” knowledge structures that human teams can trust and maintain.

Conclusion — Toward Information-Theoretic AI Education

This paper doesn’t just tweak knowledge graphs—it reframes them. By treating KG construction as a compression problem and aligning lecture materials using optimal transport, the authors establish a rigorous, elegant mechanism to balance conciseness and fidelity.

The result is a cleaner KG, a more grounded LLM, and a dramatically improved educational question-generation pipeline.

The lesson extends far beyond classrooms: when knowledge systems grow without guardrails, we get bloat, fragility, and confusion. Rate–distortion thinking offers a cure.

Cognaptus: Automate the Present, Incubate the Future.