Why This Matters Now

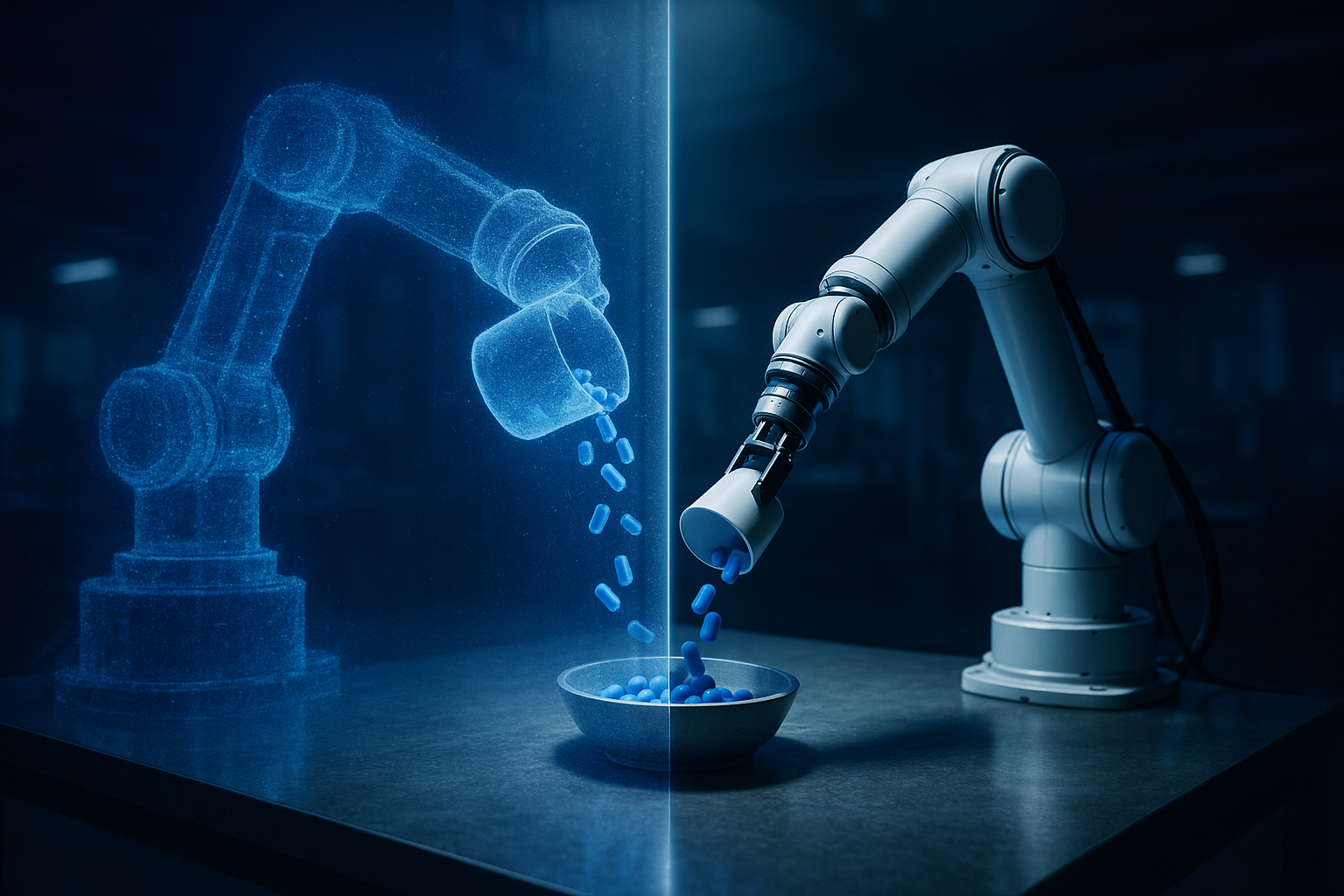

The robotics world has spent years fantasizing about a shortcut: take a generative video model, tell it “pour the tomatoes into the plate,” and somehow—magically—the robot just… does it. But there’s a quiet problem no one likes to admit. Videos are visually plausible, not physically correct. A model can show a tomato pour; it cannot guarantee the tomato isn’t clipping through the pan in frame six.

PhysWorld, the research paper at the center of today’s analysis, tackles this gap with admirable ambition: replace the magic with mechanics. If generative video gives you a dream, PhysWorld tries to give you a simulation robust enough to wake up and actually do the task.

Background — The Old Promise, The Old Pain

For years, two camps argued over how to make robots learn from video:

- Camp A: Use massive real-world datasets to map video frames to actions.

- Camp B: Skip the datasets—just imitate the pixel motions using optical flow, tracks, or poses.

Both approaches suffer from the same foundational problem: videos are a poor proxy for physics. They don’t contain mass, friction, gravity, object thickness, or the inconvenient real-world requirement that two solids should not occupy the same space.

This is where PhysWorld breaks with tradition. It treats video not as an instruction manual, but as a visual sketch from which a physical world model must be reconstructed, grounded, debugged, and used for reinforcement learning.

Analysis — What PhysWorld Actually Does

PhysWorld unfolds in three ambitious steps:

1. Generate a Task Video

Given a single RGB-D image and a language prompt, a video generator (e.g., Veo3) creates a short demonstration. The system is surprisingly pragmatic here: it doesn’t pretend generative video is reliable. It simply uses the video as a starting point.

2. Reconstruct a Physical World From That Video

This is the technical heart of the paper—and its main existential claim.

PhysWorld:

- Constructs a metric-aligned 4D point cloud from video frames.

- Uses image-to-3D generators to create complete object meshes.

- Recovers background surfaces using inpainting and geometry assumptions.

- Aligns the entire scene to gravity.

- Performs collision optimization so objects aren’t floating, fused, or subtly inside each other.

The end result is a digital twin built entirely from a generated video—complete enough for physical simulation.

3. Learn Robot Actions with Residual RL

Rather than blindly imitating the video, the robot learns to track object poses under physical constraints. PhysWorld introduces residual reinforcement learning: start with a baseline (grasping + planning), then learn tiny corrective edits.

This keeps the robot from drifting into chaos while still giving it flexibility to overcome planning failures.

Findings — What the Data Tells Us

The study evaluates PhysWorld across 10 real-world manipulation tasks. Three major insights emerge.

1. Video Generation Quality Matters

Usable demonstration rate across models:

| Model | Usable Video Rate |

|---|---|

| Veo3 | 70% |

| Tesseract | 36% |

| CogVideoX-5B | 4% |

| Cosmos-2B | 2% |

If your video generator hallucinates hands, distortions, or geometry shifts, the physical reconstruction falls apart. Higher-quality video models dramatically improve downstream success.

2. Physical World Modeling Improves Robustness

Success rate across 10 tasks (illustrative summary):

| Method | Avg Success Rate |

|---|---|

| PhysWorld | 82% |

| RIGVid | 67% |

| Gen2Act | 58% |

| AVDC | 44% |

This is the central finding: physics feedback reduces cascading failure modes—especially bad grasps, inaccurate motions, and dynamics mismatches.

3. Object-Centric Learning Beats Embodiment Imitation

Robots don’t need to copy human or hallucinated hand motions. They need to move the object.

| Task | Embodiment-Centric | Object-Centric |

|---|---|---|

| Put book in shelf | 30% | 90% |

| Put shoe in box | 10% | 80% |

Generated hand motions are unreliable; object motion is stable. This is a welcome shift in robotic learning paradigms.

Implications — Why This Matters for Businesses

PhysWorld isn’t just a robotics paper. It signals three deeper trends:

1. Generative AI Will Be Paired With Physical Simulators by Default

Business leaders hoping to leverage robot learning pipelines must understand: video alone is insufficient. Physical grounding will become a standard requirement.

2. Data Collection Costs Will Fall Dramatically

If robots can learn from generated demonstrations—without needing human teleoperation—entire robotics workflows become cost-efficient.

3. RL Pipelines Will Become Modular Layers, Not End-to-End Systems

Residual RL demonstrates a mature philosophy: let classical planning do the obvious steps, and let RL correct for physics.

This aligns well with enterprise adoption: reliability first, learning second.

Conclusion — The Quiet Maturity of Robot Learning

PhysWorld is not flashy. It does not promise robots that “just learn from YouTube.” Instead, it adds the missing scaffolding: physics, reconstruction, optimization, and restrained RL.

It is the kind of work that makes automation boringly reliable—which is perhaps the highest compliment in robotics.

Cognaptus: Automate the Present, Incubate the Future.