Opening — Why this matters now

Educational AI is racing ahead, but one of its most persistent blind spots is strangely analog: diagrams. Students rely on visuals—triangles, prisms, angles, proportions—yet most AI systems still operate as if the world is text-only. When LLMs do attempt diagram generation, the experience often feels like asking a cat to do architectural drafting.

This gap matters. If AI is going to scale from chatbots to true instructional assistants, it must see, interpret, and evaluate diagrams with mathematical discipline—not vibes.

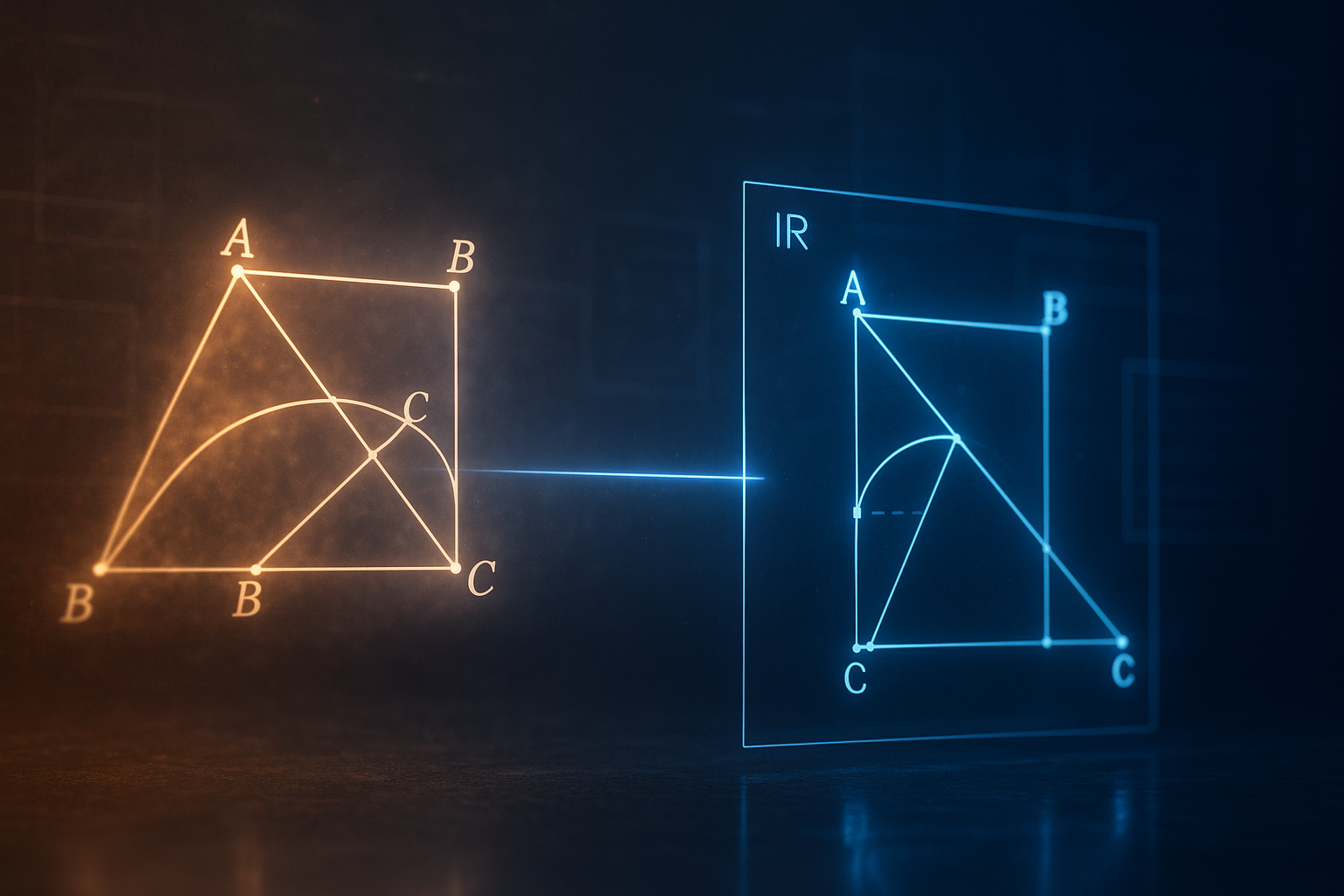

A new paper, DiagramIR: An Automatic Pipeline for Educational Math Diagram Evaluation, offers a sober and surprisingly elegant solution. Instead of pushing multimodal reasoning harder, the authors turn the problem inside-out: convert the diagram back into structured geometry, then judge it symbolically.

Quiet fix. Large impact.

Background — Context and prior art

Early attempts at evaluating AI‑generated math diagrams relied on:

- Human raters, which are accurate but slow and expensive.

- LLM‑as‑a‑Judge, which works for text tasks but collapses when geometry, scale, spatial layout, or proportions enter the equation.

- Multimodal LLMs, which remain unreliable on visual math according to MathVista and MathVerse.

The core issue is simple: math diagrams are structured objects; LLMs treat them as noisy pixel soups.

DiagramIR’s insight is to break this stalemate with an approach borrowed from compilers and neural machine translation: back‑translation into an intermediate representation (IR).

Analysis — What the paper actually does

The pipeline evaluates the mathematical and spatial correctness of diagrams produced from TikZ code. Rather than judging the rendered image, it:

- Parses TikZ → IR using an LLM.

- Runs deterministic geometric checks on the IR.

- Outputs pass/fail decisions on criteria like closed shapes, angle-label consistency, proportions, framing, readability, and overlaps.

This decoupling has several advantages:

- Parsing (semantic extraction) uses the LLM.

- Verification (geometry checking) avoids LLMs entirely.

- Errors become explicit and auditable.

- Even small models suddenly perform like expensive frontier models.

In short: use the LLM as a compiler front‑end, not a judge.

Why a structured IR works

The IR functions as a low‑entropy pivot language:

- Shapes become vertices.

- Labels become nodes.

- Angles become arcs.

- Scaling, transformations, and bounding boxes become numeric constraints.

Once diagrams are expressed in IR, the checks become deterministic. There’s no hallucination—just geometry.

Findings — Results with visualization

The headline result: Back‑translation beats LLM‑as‑a‑Judge across all model sizes.

Comparison of agreement with human ratings

| Model | Back‑translation κ | LLM‑Judge κ (code+image) | Relative Cost |

|---|---|---|---|

| GPT‑4.1 | 0.562 | 0.399 | Back‑tx ~2× cost, but higher accuracy |

| GPT‑5 | 0.555 | 0.498 | Back‑tx still better |

| GPT‑4.1 Mini | 0.483 | 0.388 | 10× cheaper than GPT‑5 for same performance |

| GPT‑5 Mini | 0.527 | 0.465 | Smaller wins again |

Interpretation

- The IR pipeline gives small models superpowers. They no longer need to “understand” an image—only translate code into structured geometry.

- LLM‑as‑a‑Judge fails especially on spatial criteria like framing and overlaps.

- Rule‑based checks are ruthlessly consistent; the LLM only handles translation.

This is a glimpse of a future where domain‑specific evaluation pipelines outperform general multimodal reasoning.

Implications — Why this matters for business and AI practitioners

The DiagramIR approach generalizes far beyond math education:

1. Evaluation by structure, not opinion

Most AI outputs have a latent structure—schemas, rules, workflows, layouts. Back‑translation allows you to:

- Convert messy outputs into structured IRs

- Run deterministic checks

- Remove subjective LLM judgments

In AI governance and enterprise QA, this pattern is gold.

2. Small models suddenly become cost‑efficient workhorses

With DiagramIR:

- GPT‑4.1 Mini evaluates diagrams as well as GPT‑5.

- Cost drops by an order of magnitude.

For any AI company looking to deploy at scale (hello, EdTech margins), this is the difference between “interesting R&D” and “sustainable product.”

3. Hybrid symbolic–neural pipelines are making a comeback

LLMs are powerful, but brittle. Symbolic checks are rigid, but reliable.

Together, they produce scalable assurance—exactly what enterprise AI deployments need.

This echoes a broader industry shift: agentic systems that delegate perception to LLMs and verification to symbolic engines.

Conclusion

DiagramIR is not flashy—no 10B‑parameter model, no photorealistic visual reasoning—but it’s strategically important. It shows that much of AI’s reliability 문제 can be solved by structuring the evaluation problem, not enlarging the model.

Sometimes, the winning move is simply to draw the lines cleanly.

Cognaptus: Automate the Present, Incubate the Future.