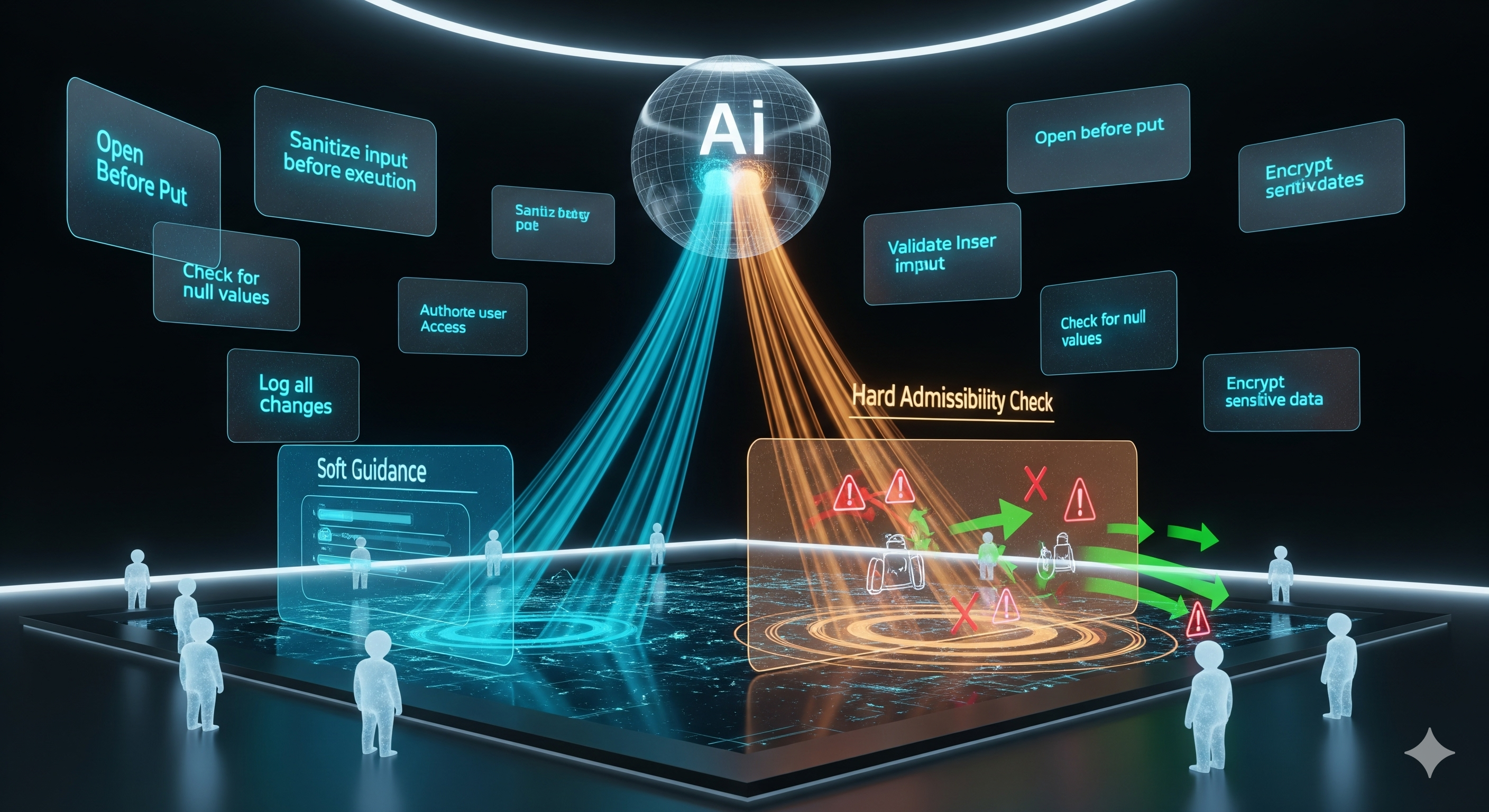

Enterprise buyers love what agents can do—and fear what they might do. Meta‑Policy Reflexion (MPR) proposes a middle path: keep your base model frozen, but bolt on a reusable, structured memory of “what we learned last time” and a hard admissibility check that blocks invalid actions at the last mile. In plain English: teach the agent house rules once, then make sure it obeys them, everywhere, without re‑training.

The big idea in one slide (text version)

-

What it adds: a compact, predicate‑like Meta‑Policy Memory (MPM) distilled from past reflections (e.g., “Never pour liquid on a powered device; unplug first.”)

-

How it’s used:

- Soft guidance—inject relevant rules into the prompt to bias decoding toward safe/valid actions.

- Hard Admissibility Check (HAC)—post‑generation filter that rejects actions violating domain constraints.

-

Why it matters: higher safety and transfer across tasks without weight updates or RL pipelines.

Why MPR is different (and useful) for business

Most reflective agents (ReAct/Reflexion) generate free‑form notes that die with the episode. MPR keeps the takeaways, converts them into short, checkable rules, and applies them in future contexts. That unlocks three concrete wins for enterprise deployments:

- Safety by construction. HAC acts like a circuit breaker: even if the LLM drifts, invalid API calls, out‑of‑scope file ops, or non‑compliant steps are blocked.

- Opex, not Capex. No fine‑tuning loops. Your agent improves via memory updates (cheap tokens) instead of parameter updates (GPU time + MLOps overhead).

- Transfer that actually transfers. The same rule (“sanitize SQL before execution”) can help across CRM, ERP, and analytics bots.

Quick scoreboard

| Approach | Improves with experience | Cross‑task transfer | Safety guarantees | Infra cost |

|---|---|---|---|---|

| Reflexion (free‑form notes) | Per episode | Limited (notes aren’t reused) | Soft only (prompt bias) | Low |

| RL / fine‑tuning | Yes (policy learning) | Good if tasks align | Indirect (via learned behavior) | High |

| MPR (this work) | Yes (rule consolidation) | Strong (predicate rules are portable) | Soft + Hard (HAC blocks invalids) | Low (no weight updates) |

What the paper shows (and how to interpret it)

Using a text‑based AlfWorld setup and a frozen LLM policy, the authors:

- Maintain an MPM that accrues predicate‑style rules from failed trajectories.

- Use the MPM at inference time for soft guidance and apply HAC to veto invalid actions.

- Report faster training‑set convergence versus Reflexion and better single‑shot test accuracy when transferring the learned memory; adding HAC delivers the best robustness.

Interpretation for buyers: AlfWorld is a clean testbed that rewards structural rules (“open before put”; “pick up before carry”). Real stacks—APIs, privacy walls, flaky data—are messier. Yet the architectural bet is sound: encode what you’d put in a SOC2 runbook as machine‑readable rules and enforce them at the action boundary.

An implementation blueprint you can ship this quarter

- Schema your memory. Treat each rule as

{predicate(args), scope, preconditions, postconditions, confidence, provenance}. Store in a tiny vector DB or SQLite—latency matters more than recall. - Add a reflection hook. On failures, call a small LLM to propose rules; de‑dup, collapse synonyms, and cap by domain. Human‑in‑the‑loop for anything that will become a hard constraint.

- Retrieve narrowly. At step t, fetch only rules whose scopes match the current tool, resource, tenant, or PII tier.

- Prompt for soft guidance. Inject a compact checklist (3–7 rules). Avoid long essays; you want bias, not overwhelm.

- HAC at the edge. Wrap every tool call with validators (JSON schema, role‑based resource ACLs, rate limits, regex for secrets, org‑specific policy). If rejected, either: (a) regenerate with stricter memory, or (b) fall back to a safe no‑op plus apology.

- Audit and roll‑forward. Log

(state, action, rules_applied, HAC_result, outcome); promote rules that repeatedly help; retire brittle ones.

HAC starter pack (examples you can copy)

- Data ops: deny

SELECT *on PHI tables; enforceLIMITunlesspurpose=analytics_approved. - File ops: forbid writes outside

/workspace/${tenant}/; requiredry_run=trueunless change ticket present. - Network: block external POSTs unless domain is in

allowlist; throttle by API tier. - GenAI: prevent tool calls that include raw secrets; strip tokens through a secret scanner.

Where it breaks (and how to harden it)

- Rule explosion & conflicts. Mitigation: deduplicate via canonical predicates; score by utility; require provenance; run a nightly consistency check.

- Over‑constraining the agent. Symptom: higher “HAC‑rejected” rates, falling task completion. Fix: soften offending rules to warnings, or narrow scope.

- Domain drift. Rotate rules with data drift monitors (new tools, API versions, schema migrations) and re‑validate high‑impact constraints.

For Cognaptus clients: what changes in your stack

- Governance: Security and Ops become first‑class co‑authors of the agent’s rulebook. Promotion to “hard” status requires sign‑off.

- Metrics: Track

success_rate,HAC_reject_rate,rule_utilization@k, and MTTR for failures. - ROI story: Fewer production rollbacks, fewer accidental escalations, and cheaper iteration versus RL‑heavy approaches. The payback is in risk‑adjusted velocity.

What we still don’t know (and should test next)

- Multi‑agent spillovers. Can several agents share a graph‑structured MPM without deadlocks or brittle priority rules?

- Multimodal grounding. Pair symbolic rules with embeddings for screenshots/logos to harden UI agents against layout drift.

- Automatic rule hygiene. Lightweight SAT‑style checks to catch contradictory constraints before they reach HAC.

Bottom line: MPR is less about clever prompting and more about operationalizing institutional knowledge. If your agent already follows a runbook in wiki pages, move that runbook into machine‑readable rules and enforce them at the action boundary. You’ll get safer behavior today and cheaper improvements tomorrow.

Cognaptus: Automate the Present, Incubate the Future