TL;DR

A new synthetic benchmark (INABHYD) tests inductive and abductive reasoning under Occam’s Razor. LLMs handle toy cases but falter as ontologies deepen or when multiple hypotheses are needed. Even when models “explain” observations, they often pick needlessly complex or trivial hypotheses—precisely the opposite of what scientific discovery and root-cause analysis require.

The Big Idea

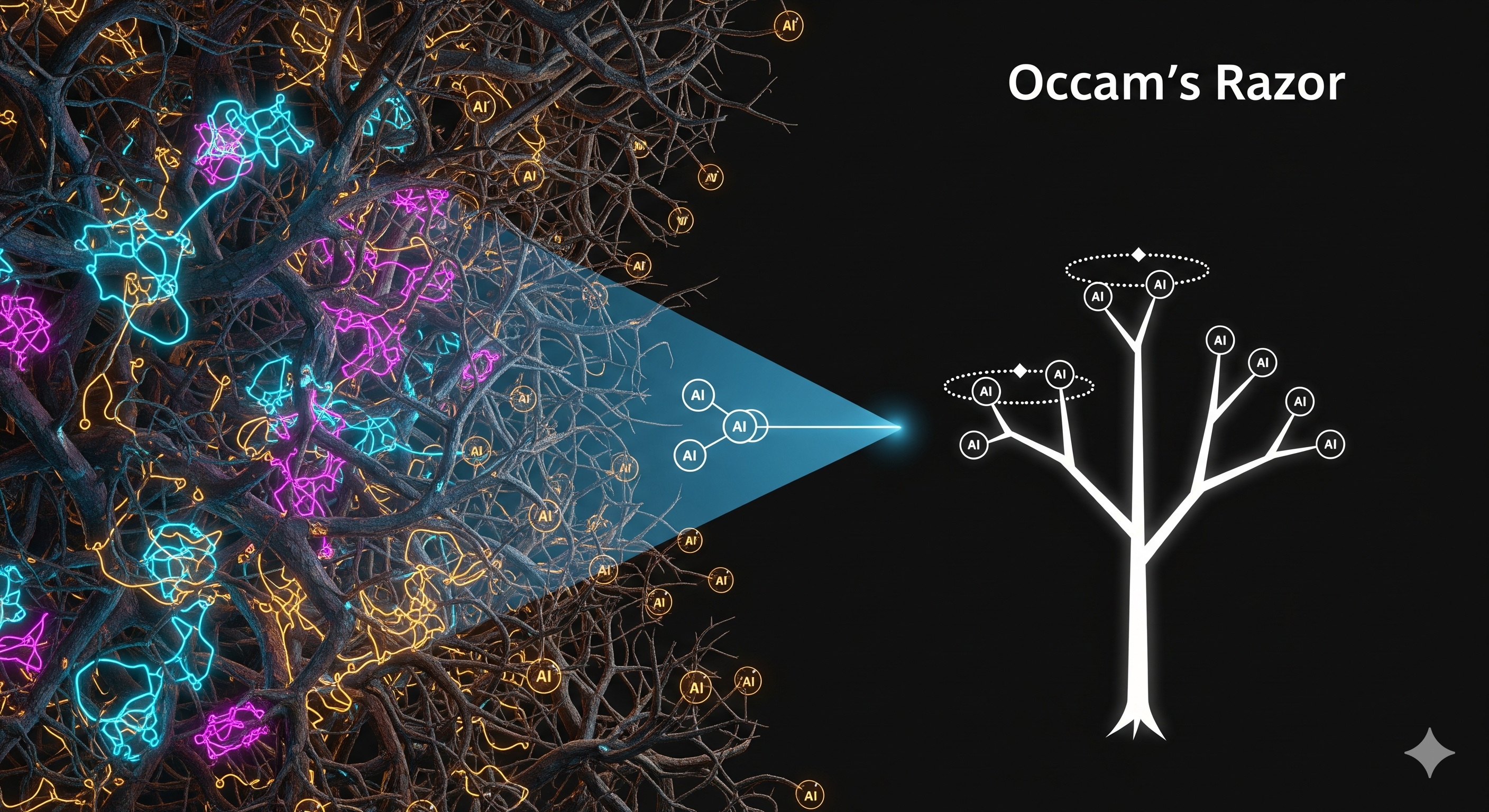

Most reasoning work on LLMs obsesses over deduction (step-by-step proofs). But the real world demands induction (generalize rules) and abduction (best explanation). The paper introduces INABHYD, a programmable benchmark that builds fictional ontology trees (concepts, properties, subtype links) and hides some axioms. The model sees an incomplete world + observations, and must propose hypotheses that both explain all observations and do so parsimoniously (Occam’s Razor). The authors score:

- Weak accuracy – do hypotheses explain observations?

- Strong accuracy – do they match ground truth axioms exactly?

- Quality – a parsimony metric rewarding fewer, broadly-explanatory hypotheses and penalizing redundancies.

Why this matters: In product settings—fraud triage, medical pre-diagnosis, SRE incident analysis—we don’t just want any explanation; we want the simplest, reusable rule that scales across cases. That is the difference between anecdote-fitting and insight.

What They Actually Built

- Synthetic worlds: First‑order‑logic ontology trees with tunable height (complexity). Nodes have members and properties; edges are subtype relations. Some axioms are hidden (the target hypotheses). Observations are generated so that a concise explanation exists.

- Three sub‑tasks: (a) infer a property rule (e.g., All X are cute), (b) infer a membership (e.g., Amy is an X), (c) infer a subtype (e.g., All X are Y).

- Models tested: GPT‑4o, Llama‑3‑70B, Gemma‑3‑27B, DeepSeek‑V3, and DeepSeek‑R1‑Distill‑Llama‑70B (RLVR‑style).

Results in Plain English

When the world is shallow (height‑1) and there’s only one hidden axiom to find, models do fine. Complexify the world or require multiple hypotheses, and performance drops sharply—especially on strong accuracy and quality. In‑context examples help a bit (more when in‑distribution); RLVR‑trained/distilled models help more, largely by verifying their own guesses post‑hoc. Still, even the best models often miss the simplest explanation.

One‑Screen Summary

| Scenario | What changes | What we see |

|---|---|---|

| Single hypothesis, shallow ontology | Low complexity | High weak & strong accuracy across models |

| Multiple hypotheses | Coupled search space | Weak ok, but quality & strong collapse—models overfit with extra or trivial rules |

| Deeper ontologies (height 3–4) | Exponential axioms | Navigation failure: wrong directions, redundant rules |

| 8‑shot in‑distribution demos | CoT + proofs as examples | Small lift in strong & quality; models learn to climb the tree better |

| RLVR‑style model (R1‑Distill) | Verifiable‑reward training | ~10–20% gain; explicit self‑verification prunes some bad hypotheses—but not all |

Interpretation: Today’s LLMs are explainers of convenience: they can rationalize observations but don’t reliably pick the most general, minimal hypothesis set. That’s a ceiling for discovery workflows.

Where Models Go Wrong (Actionable Failure Modes)

- Wrong direction: Swap subtype arrows (e.g., infer All mammals are cats).

- Ontology myopia: Propose both a general rule and a redundant subtype rule (needless duplication).

- Trivial echo: Reuse observations as hypotheses (explains nothing beyond itself).

- Hallucinated entities: Import concepts/properties seen in demonstrations but absent in the test world.

- Type confusion: Mix up members (Amy) and concepts (Wumpus).

Design note: These errors scream for structure‑aware decoding and proof‑checking during generation rather than only after.

Why Occam’s Razor as a Metric Is a Big Deal

Most “reasoning” leaderboards reward getting some explanation. This paper’s quality metric aligns with how scientists and analysts actually work: prefer the shortest theory that explains the most. A model picking five niche rules where one general rule suffices is a maintenance nightmare in production: more rules to audit, update, and misapply. By measuring parsimony, INABHYD shifts incentives from justifying to generalizing.

What Builders Can Do Now (Practical Playbook)

- Structure the search space: Constrain decoding to ontology‑consistent forms; prefer higher‑level rules before leaf‑level memoranda.

- Train for parsimony: Use verifiable rewards that explicitly score fewer, higher‑coverage rules (an Occam‑aware RLVR).

- Self‑verification loops: After proposing hypotheses, require the model to re‑derive all observations and count hypothesis reuse; reject low‑coverage proposals.

- Curriculum prompting: Provide in‑distribution exemplars that illustrate merging two specific rules into one general rule.

- Typed memory: Keep a lightweight symbol table for concepts vs. members, cutting type‑confusion errors.

Implications for Business Workflows

- Diagnostics (SRE, fraud, healthcare): Favor systems that surface one generalized root cause vs. several case‑specific reasons; measure parsimony explicitly.

- Policy & governance: When LLMs generate “policies,” test for redundancy and coverage; fewer, broader rules are easier to govern.

- Agent architectures: Put a Verifier‑Agent in the loop that scores minimality and rejects explanations that don’t compress the observation set.

A Fair Critique

The worlds are first‑order logic (FOL) and synthetic. That’s a feature (clean control) and a limitation (real data is messier). But as a unit test for induction/abduction under parsimony, INABHYD is the clearest we’ve seen—and it exposes the exact cracks that show up later in production rule systems. Future work should push to higher‑order logic, richer natural language, and end‑to‑end Occam‑aware training.

Bottom Line

LLMs can guess an explanation, not reliably the simplest one. If your product depends on finding general rules—from pricing heuristics to incident RCA—you must optimize for parsimony, not just plausibility.

Cognaptus: Automate the Present, Incubate the Future