TL;DR — L‑MARS replaces single‑pass RAG with a judge‑in‑the‑loop multi‑agent workflow that iteratively searches, checks sufficiency (jurisdiction, date, authority), and only then answers. On a 200‑question LegalSearchQA benchmark of current‑year questions, it reports major gains vs. pure LLMs, at the cost of latency. For regulated industries, the architecture—not just the model—does the heavy lifting.

What’s actually new here

Most legal QA failures aren’t from weak language skills—they’re from missing or outdated authority. L‑MARS tackles this with three design commitments:

-

Agentic, targeted search across heterogeneous sources (web via Serper, local RAG, CourtListener case law) rather than one‑shot retrieval.

-

A Judge Agent with a stop rule that refuses to answer until evidence is sufficient (authority, jurisdiction, temporal validity, contradiction scan).

-

Dual operating modes: a low‑latency Simple Mode and a more accurate Multi‑Turn Mode with query decomposition and sufficiency loops.

This is less a model trick and more an operational blueprint for high‑stakes QA.

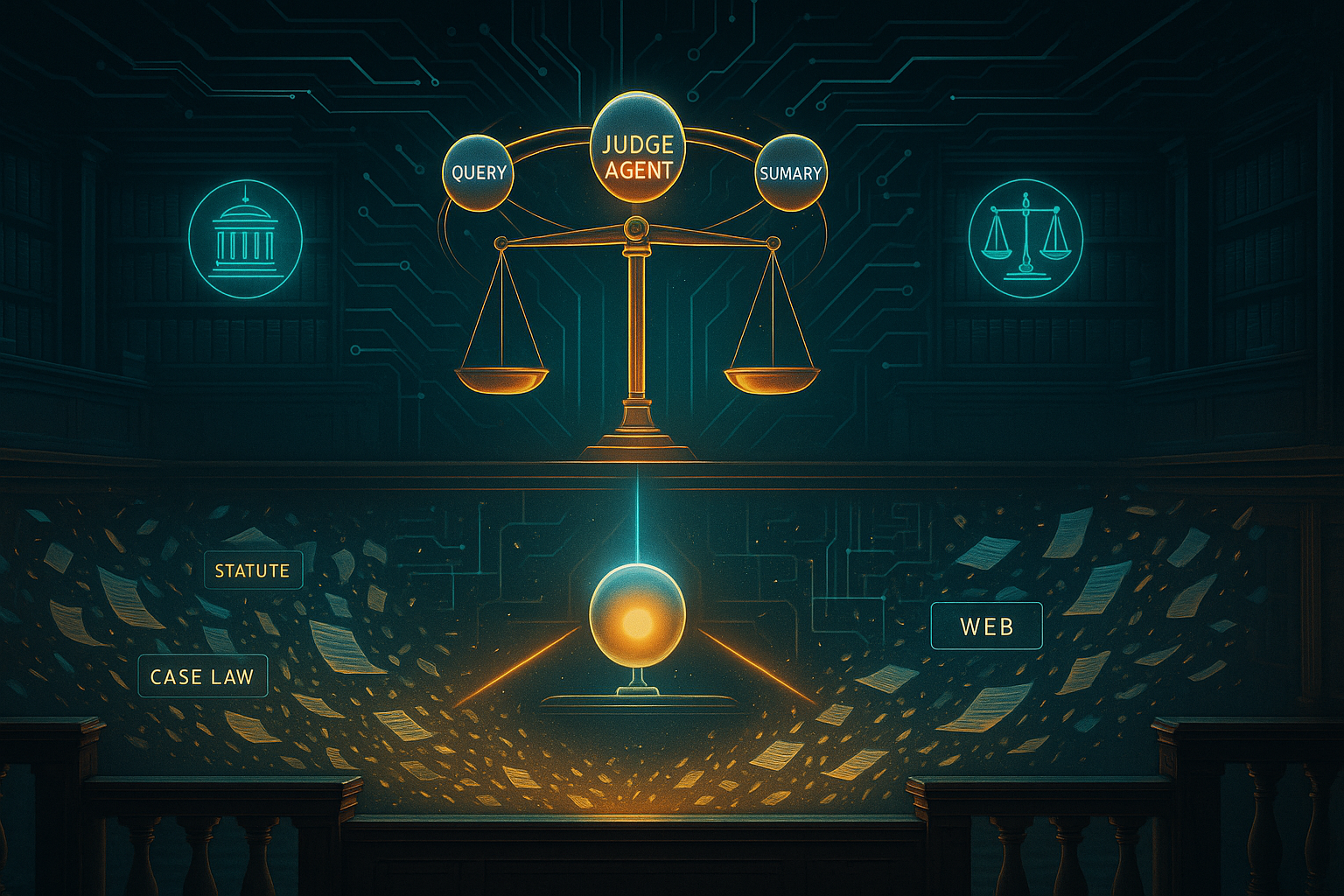

The workflow at a glance

| Role/Step | What it does | Failure it prevents |

|---|---|---|

| Query Agent | Parses the user prompt into structure (issue type, entities, time window, jurisdiction); generates search intents. | Ambiguous scope, wrong jurisdiction. |

| Search Agent | Runs agentic search: quick snippet triage → deep content extraction (top‑k), plus local BM25 and CourtListener case retrieval. | Shallow snippets, missing primary law. |

| Judge Agent | Deterministic (T=0) checklist: authority quality (gov/court/edu), date recency, jurisdiction match, contradiction scan; decides SUFFICIENT/INSUFFICIENT and proposes refinements. | Answering on flimsy, outdated, or off‑jurisdiction sources. |

| Summary Agent | Synthesizes the final answer after sufficiency is met; cites evidence. | Hallucinated conclusions, weak grounding. |

Operating modes

| Dimension | Simple Mode | Multi‑Turn Mode |

|---|---|---|

| Turn structure | Single pass | Iterative search → judge → refine loops |

| Latency | Low | Higher |

| Recall & grounding | Good | Best (keeps searching until sufficient) |

| When to use | Triage, FAQs | High‑stakes questions, changing regs |

Evidence sources that matter

- Web (Serper): fast exploration via titles/snippets, then enhanced extraction of the best pages (snippet‑anchored windows to keep citations tight).

- Local RAG (BM25): rolling index of your private policy memos, playbooks, or annotated statutes.

- CourtListener API: primary case law for authoritative grounding when blogs and secondary summaries aren’t enough.

Design choice I love: the snippet‑anchored extraction window that pulls just enough surrounding context to cite precisely, without bloating tokens.

Do the numbers justify the complexity?

Reported results on LegalSearchQA (200 up‑to‑date questions):

| Method | Accuracy | Uncertainty (U‑Score ↓) | LLM‑as‑Judge | Avg. Latency (s) |

|---|---|---|---|---|

| GPT‑4o (pure) | 0.89 | 0.55 | Moderate | 1.69 |

| Claude‑4‑Sonnet (pure) | 0.88 | 0.62 | Moderate | 3.84 |

| Gemini‑2.5‑Flash (pure) | 0.86 | 0.58 | Moderate | 1.87 |

| L‑MARS (Simple) | 0.96 | 0.42 | High | 13.62 |

| L‑MARS (Multi‑Turn) | 0.98 | 0.39 | High | 55.67 |

Interpreting U‑Score: it penalizes hedging (“may”), temporal vagueness (no dates), poor citation authority, weak jurisdiction specificity, and indecision. Lower is better, so the multi‑turn loop isn’t just more correct—it’s also more decisive with better citations.

Trade‑off: Multi‑turn mode is ~10–30× slower than pure LLM responses. That’s acceptable for counsel‑facing tools and batch memo generation; for chat triage, start Simple and escalate when needed.

Why this matters beyond law

If your domain changes weekly (tax, benefits, cloud pricing, compliance, HR policy), static fine‑tunes drift and single‑pass RAG misses edge cases. A judge‑gated search loop generalizes:

- Healthcare ops: coverage criteria and prior auth rules change frequently; judge‑gated “sufficiency” avoids outdated guidance.

- FinServ compliance: product disclosure rules and sanctions lists; the judge enforces jurisdiction & date checks before answer.

- Procurement & InfoSec: policy exceptions and vendor clauses; contradiction scans catch conflicting sources.

Enterprise implementation notes (what to copy, what to adapt)

Copy verbatim

- Sufficiency checklists (authority, date, jurisdiction, contradictions) with a temperature‑0 Judge.

- Two‑tier search (fast snippets → deep extraction) to keep latency predictable.

- Snippet‑anchored windows to improve citation precision.

Adapt to your stack

- Replace Serper with your search infra; swap BM25 for your vector store but keep a BM25 or keyword fallback for legalese and exact cites.

- Add data‑residency lanes (EU/US) so the Judge can reject sources on compliance grounds.

- Log judge rationales and stopping decisions for audit trails.

Pitfalls

- Over‑conservative judges can loop too long. Use iteration caps and leniency ramps (be stricter in early loops, looser later) to stop on “good enough.”

- Snippet drift: ensure your deep‑extraction window really surrounds the snippet anchor, not just a fuzzy match.

Build‑vs‑Buy quick guide

| If this is you… | Do this |

|---|---|

| You already have a legal content license & a case‑law API | Plug L‑MARS‑style Judge + agentic search into your stack; start with Simple Mode in prod, enable Multi‑Turn for escalations. |

| You’re RAG‑first with vector search only | Add a keyword/BM25 lane and snippet‑anchored extraction; train your Judge to down‑rank forums for primary‑law queries. |

| You operate in multiple jurisdictions | Encode jurisdiction in the Query Agent schema; blocklist irrelevant TLDs and prefer gov/edu/court domains. |

What I’m still watching

- Trajectory‑aware judges that consider prior iterations (to avoid refetching the same near‑duplicates).

- Cross‑jurisdiction scaling (EU + US + APAC) without slowing to a crawl.

- Latency budgets: dynamic switching between modes based on risk/urgency.

Bottom line

L‑MARS shows that architecture beats vibes: a judge‑gated, agentic‑search loop can systematically reduce hallucinations where it matters. If you answer questions where being “roughly right” is legally wrong, this blueprint deserves a pilot.

Cognaptus: Automate the Present, Incubate the Future