The pitch: a unified plug—and a tougher test

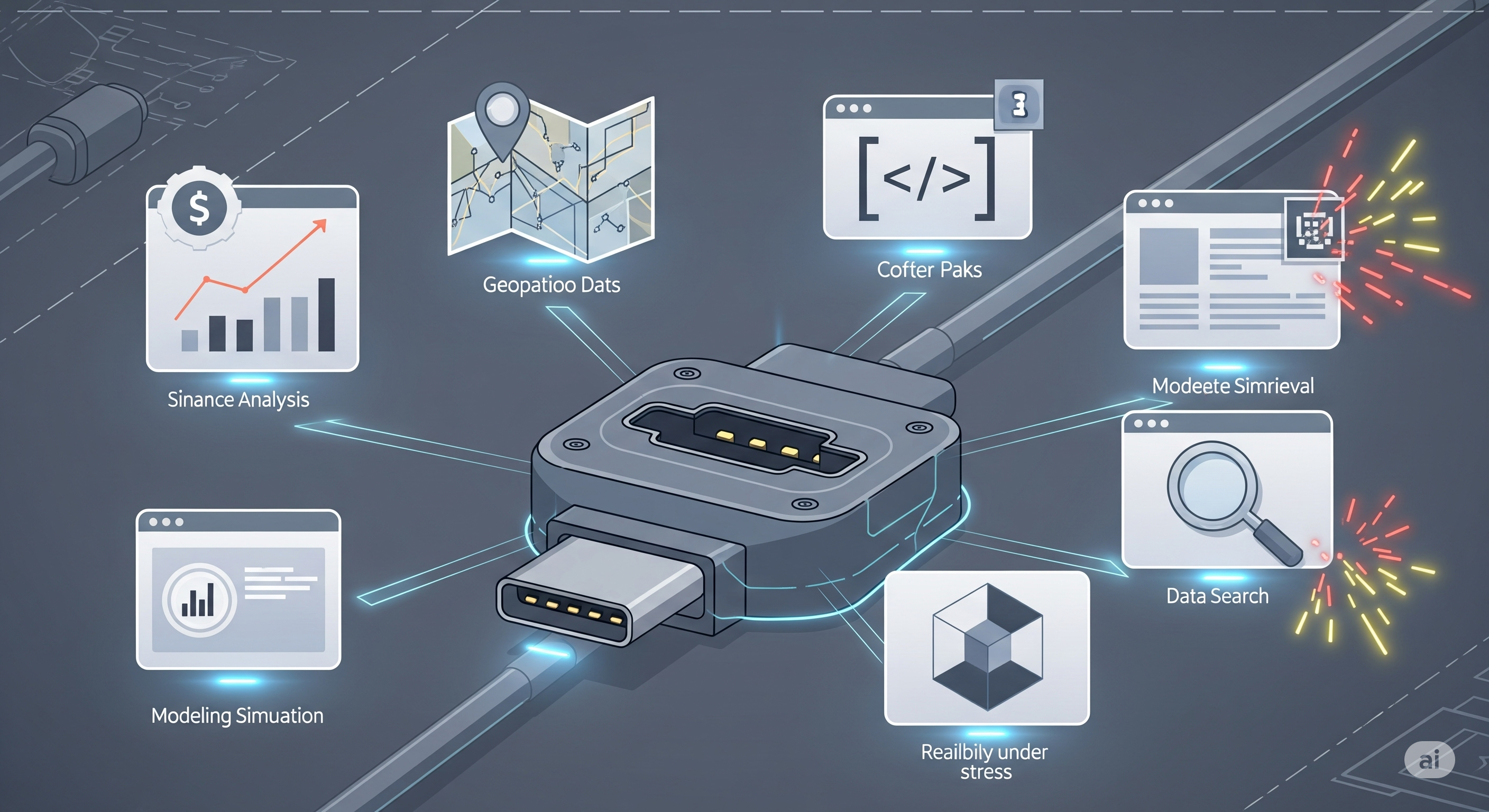

The Model Context Protocol (MCP) is often described as the “USB‑C of AI tools”: one standardized way for agents to talk to external services (maps, finance data, browsers, repos, etc.). MCP‑Universe, a new benchmark from Salesforce AI Research, finally stress‑tests that idea with real MCP servers rather than toy mocks. It derives success from execution outcomes, not multiple‑choice guesswork—exactly what enterprises need to trust automation.

Why this benchmark is different

Most agent benchmarks check whether a model can say the right thing. MCP‑Universe checks whether it can actually do the right thing across six domains (navigation, repo ops, finance, 3D, browser automation, web search) wired to 10+ genuine MCP servers. Three things make this consequential:

-

Execution‑based scoring – Tasks pass only if the tool calls produce the correct end state or verifiable output.

-

Long‑horizon pressure – Multi‑step tasks blow up context windows fast, making summarization, memory, and state management first‑class problems.

-

Unknown‑tool realism – Agents must infer APIs they haven’t memorized, read capability schemas, and adapt on the fly—like real deployments.

The uncomfortable results (and why they matter)

Headline frontier models that top chat benchmarks stumble here. Even strong closed‑source models and well‑known enterprise agents trail a carefully prompted ReAct baseline more often than many expect. Translation for operators: chat prowess ≠ reliable tool use. If your roadmap assumes “just add an agent,” MCP‑Universe is the cold shower.

What actually breaks

Below are the recurring failure modes we saw echoed across domains—and concrete mitigations we recommend for production.

| Failure mode | What it looks like in MCP tasks | Why it happens | Ship‑ready mitigation |

|---|---|---|---|

| Instruction drift over steps | Early sub‑goals satisfied, later steps ignored or overwritten | Context bloat; weak step‑state discipline | Enforce step contracts; auto‑append state ledgers; restrict message length with rolling summaries & structured memory |

| Tool misuse / wrong arguments | Calling search instead of issue.list in repo ops; malformed query bodies |

Shallow schema reading; hallucinated fields | Pre‑flight tool linting; schema‑aware planners; penalize invalid calls; tool‑specific unit tests in eval harness |

| Ground‑truth mismatch | Finance tasks graded wrong because prices moved | Evaluators compare to stale snapshots | Use dynamic evaluators (query real‑time truth) + cache the exact sources for audit |

| Context runaway | Each tool result gets pasted back verbatim; input tokens explode | Naïve transcript accumulation | Response distillation between steps; enforce extract‑only retention; retrieval‑augmented state instead of transcript replay |

| Exploration paralysis | The agent keeps “learning the tool” instead of finishing | Uncertainty handling missing | Phase‑gated plans (explore → exploit); hard cap exploration steps; temperature decay; action‑value heuristics |

| Framework illusions | Fancy IDE‑like agents underperform simple ReAct | Orchestration overhead; prompt bloat | Keep it lean: small, typed tools; minimal prompt boilerplate; metric‑driven ablations before adding layers |

What businesses should change this quarter

1) Instrument agents like you instrument microservices. Log every tool call, arguments, latency, and validator result; tie passes/fails to task IDs. Ship with an operability dashboard (p50/p95 step counts, invalid‑call rate, token burn per task, evaluator disputes).

2) Move from transcript‑centric to state‑centric planning. Treat intermediate results as structured state (typed memory objects) rather than ever‑longer messages. Summarize aggressively; persist only fields needed for the next decision.

3) Curate a minimal, high‑leverage tool slate. Performance drops as tool menus sprawl. Start with 4–6 critical MCP servers; write tool primers (one‑page how‑to + examples) that agents can read on step 0.

4) Adopt execution‑based CI for agents. Fork MCP‑Universe tasks into your repo with your data. Run them on every model/prompt change. Fail the build on regression in success rate, invalid‑call rate, or token‑per‑pass.

5) Add schema‑aware planners and pre‑flight checks. Don’t let the agent hit production tools blindly. Pre‑validate arguments against the MCP tool’s JSON schema; block calls that won’t parse; nudge the planner with auto‑generated examples.

A pragmatic eval stack you can copy

- Evaluator mix: (a) format compliance, (b) static content checks for time‑invariant tasks, (c) dynamic evaluators that fetch real‑time ground truth (finance, weather, search).

- Task design: Multi‑step, outcome‑verifiable, with explicit success predicates per domain.

- Metrics: success rate, steps‑to‑success, invalid‑call %, token‑per‑success, evaluator disagreements.

- Guardrails: max exploration iterations; per‑tool cooldowns; auto‑retry with arg repair only (no full re‑plan) to avoid loops.

What’s next for MCP—and for you

MCP‑Universe doesn’t kill the dream of agentic automation; it hardens it. The path forward looks like this: smaller, typed toolkits; stateful planners that compress ruthlessly; schema‑literate reasoning; and eval‑as‑CI. If your 2025 roadmap includes autonomous ops (research, QA, repo hygiene, browser RPA, finance queries), run MCP‑Universe‑style tests now and measure before you scale.

Cognaptus: Automate the Present, Incubate the Future.