What if an LLM could learn not by reading more, but by thinking harder? That’s the radical premise behind Self-Questioning Language Models (SQLM), a framework that transforms large language models from passive learners into active generators of their own training data. No curated datasets. No labeled answers. Just a prompt — and a model that gets smarter by challenging itself.

From Self-Play in Robotics to Reasoning in Language

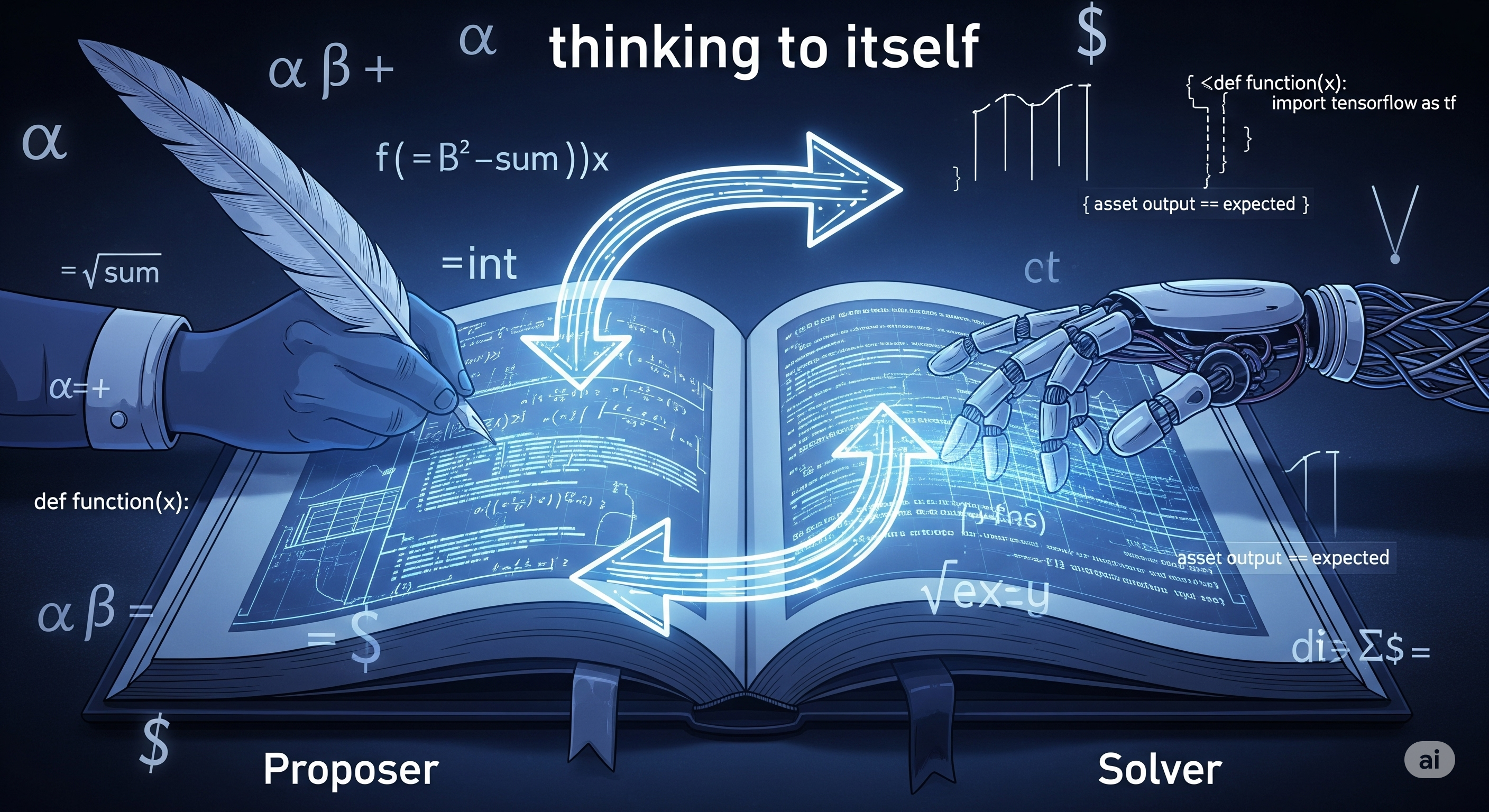

The inspiration for SQLM comes from asymmetric self-play, a technique used in robotics where one agent proposes tasks and another learns to solve them. Here, that paradigm is adapted to LLMs:

- A proposer model generates questions in a given domain (e.g., algebra, coding).

- A solver model attempts to answer them.

Both are trained via reinforcement learning. The twist? They’re the same model, taking turns in different roles.

No Labels? No Problem — Just Vote or Test

Without labeled answers, SQLM relies on proxy rewards to measure performance:

- For math problems, the solver generates multiple answers, and the majority answer is treated as correct.

- For coding problems, correctness is verified by passing unit tests.

The proposer gets rewarded for generating problems that are neither trivial nor impossible — that is, ones where some answers are correct, but not all. This encourages a natural curriculum: as the solver gets better, the proposer has to up its game.

Reward Design Cheat Sheet

| Domain | Solver Reward | Proposer Reward |

|---|---|---|

| Arithmetic | 1 if answer = majority vote | 1 if 0 < #matching answers < total |

| Coding | Fraction of tests passed | 1 if some but not all tests are passed |

Learning by Challenging Itself

With just a single prompt like “Generate a three-digit arithmetic problem,” SQLM trains itself to reach state-of-the-art results on multiple tasks:

| Task | Base Accuracy | + SQLM Accuracy |

|---|---|---|

| Multiplication (Qwen-3B) | 79.1% | 94.8% |

| Algebra (OMEGA) | 44.0% | 60.0% |

| Coding (Codeforces) | 32.0% | 39.1% |

Crucially, this is achieved with zero curated training data — just self-play and a prompt.

A Curriculum Emerges — Naturally

One striking observation is how SQLM’s training loop produces an emergent curriculum. Early on, proposers generate simple problems (e.g., addition). As the solver improves, problems become more complex — involving nested expressions or algorithmic reasoning. This mirrors how humans learn: not by jumping straight to expert problems, but by gradually increasing difficulty.

A PCA analysis confirms this: problems generated incrementally (one per step) are more diverse and effective than those pre-generated in batches.

The Generator-Verifier Gap: A Crucial Insight

SQLM hinges on a clever recognition: in some tasks, verifying an answer is just as hard as producing it (e.g., math); in others, verification is easier (e.g., coding with unit tests). This “generator-verifier gap” guides how SQLM designs its feedback loops. Where verification is easy, rely on it. Where it’s hard, use internal signals like self-consistency.

This insight isn’t just technical — it’s strategic. Future training systems should tailor their learning loops to match the cost of verification in each domain.

Limitations and the Road Ahead

SQLM isn’t magic. Prompt design still requires manual effort, especially for formatting or task scope. And since it lacks any external grounding, models might reinforce consistent but incorrect beliefs.

Yet the vision is compelling: self-improving models that don’t just learn from human input, but from themselves. Imagine:

- A tutoring model that gets better by writing its own practice exams.

- A coding assistant that invents novel challenges to test its logic.

- An LLM researcher that runs mental experiments before trying real ones.

In SQLM, we glimpse a future where language models aren’t just tools — they’re co-thinkers.

Cognaptus: Automate the Present, Incubate the Future