It has become fashionable for AI researchers to pepper their papers with references to psychology: System 1 and 2 thinking, Theory of Mind, memory systems, even empathy. But according to a recent meta-analysis titled “The Incomplete Bridge: How AI Research (Mis)Engages with Psychology”, these references are often little more than conceptual garnish.

The authors analyze 88 AI papers from NeurIPS and ACL (2022-2023) that cite psychological concepts. Their verdict is sobering: while 78% use psychology as inspiration, only 6% attempt to empirically validate or challenge psychological theories. Most papers cite psychology in passing — using it as window dressing to make AI behaviors sound more human-like.

Psychology as Metaphor, Not Method

The paper identifies three distinct modes of engagement:

| Mode | Description | Common Examples | Flaws |

|---|---|---|---|

| Inspirational | Using psychology as a source of concepts or metaphors | “Our LLM shows signs of Theory of Mind” | Lacks empirical rigor or proper grounding |

| Methodological | Using psych-inspired tests or tasks | False-belief tasks, reasoning quizzes | Often decontextualized or oversimplified |

| Theoretical Integration | Building AI models that reflect psychological theory | Rare (e.g., ACT-R-style modeling) | Almost absent in modern LLM work |

The overwhelming dominance of the first mode shows that much of AI’s interaction with psychology is aesthetic rather than scientific. Researchers invoke psychology to make claims more intuitive or impressive — but rarely submit those claims to the standards of psychological science.

Case in Point: Theory of Mind

Theory of Mind (ToM) has become a hot topic for LLMs. Papers abound with claims like “GPT-4 solves false belief tasks,” suggesting that the model possesses rudimentary ToM abilities. But this paper dismantles that narrative:

- The tasks used (often adapted from the classic Sally-Anne test) lose crucial context in translation to text prompts.

- Results are cherry-picked and lack developmental grounding — real ToM in children develops gradually and contextually.

- Researchers rarely reference foundational work in cognitive development.

In short, these ToM tests are more theatrical than diagnostic. They create the illusion of cognitive parity without meaningful validation.

Why the Gap Matters

One might argue: what’s the harm in using a few metaphors? The authors push back hard. Without disciplined engagement, AI risks falling into folk psychology — making AI seem human-like based on vague resemblance, not shared mechanism.

This isn’t just a matter of academic precision. Overclaiming about LLMs’ cognitive capabilities has downstream risks:

- Policy misfires: Misinterpreting LLM capacities could influence AI regulation or legal frameworks.

- Ethical confusion: Assigning moral agency to machines based on flawed analogies invites peril.

- Scientific stagnation: Poor cross-disciplinary practice slows actual understanding of cognition.

Toward a More Honest Bridge

The paper ends with a call for methodological humility. If AI wants to make real psychological claims, it must:

- Design experiments that can falsify theories, not just support them

- Collaborate with cognitive scientists and developmental psychologists

- Engage with the messiness of human cognition — not just cherry-pick tests that fit

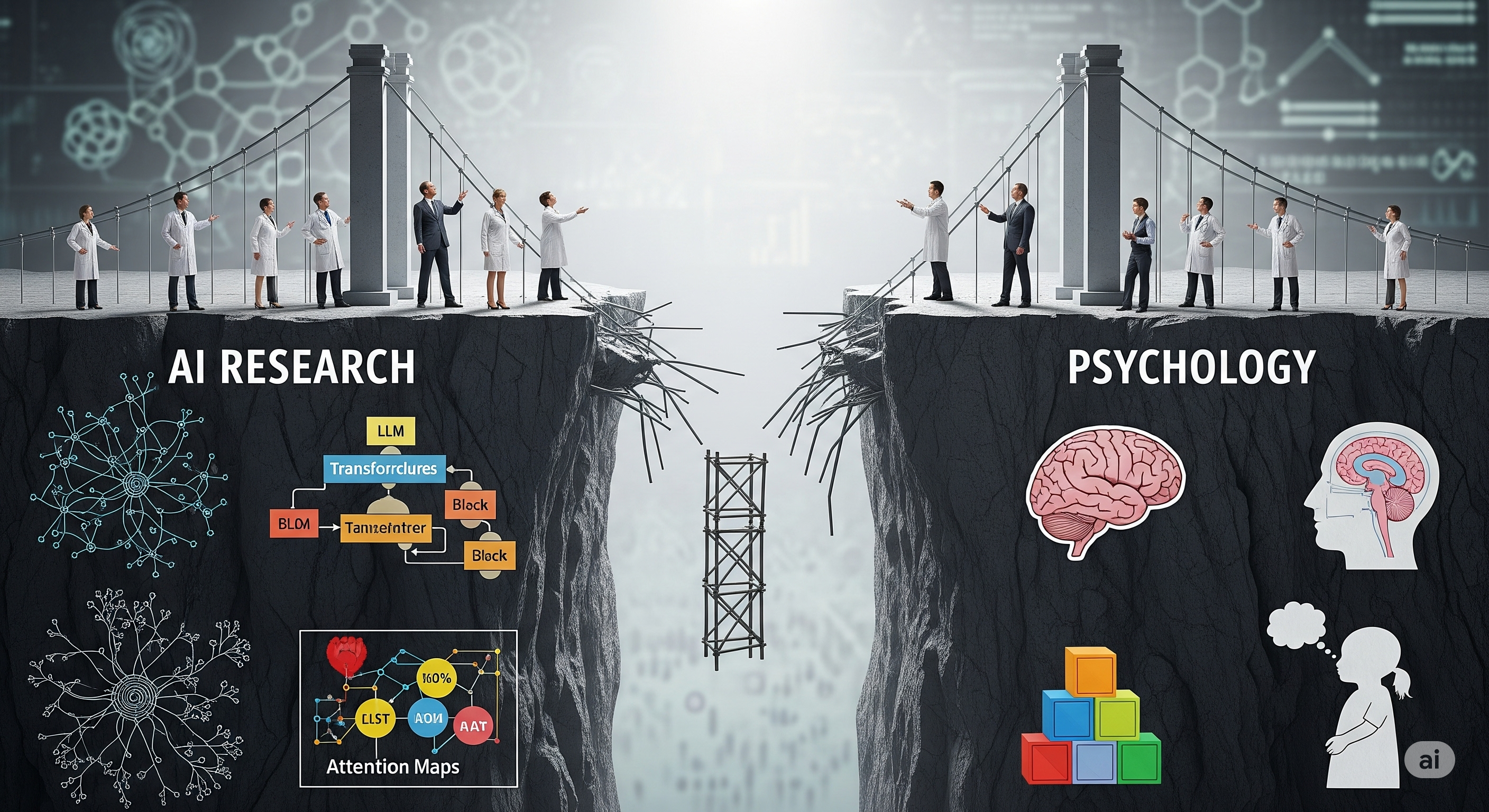

Until then, the bridge between AI and psychology will remain a fragile scaffold — decorative, but dangerously incomplete.

Cognaptus: Automate the Present, Incubate the Future