Social simulations are entering their uncanny valley. Fueled by generative agents powered by Large Language Models (LLMs), recent frameworks like Smallville, AgentSociety, and SocioVerse simulate thousands of lifelike agents forming friendships, spreading rumors, and planning parties. But do these simulations reflect real social processes — or merely replay the statistical shadows of the internet?

When Simulacra Speak Fluently

LLMs have demonstrated striking abilities to mimic human behaviors. GPT-4 has passed Theory-of-Mind (ToM) tests at levels comparable to 6–7 year-olds. In narrative contexts, it can detect sarcasm, understand indirect requests, and generate empathetic replies. But all of this arises not from embodied cognition or real-world goals — it’s just next-token prediction trained on massive corpora.

The illusion of understanding is powerful. Well-crafted persona prompts can make an LLM agent feel introspective or conflicted. Yet beneath the surface, there’s no enduring self, no consistent memory state unless explicitly engineered. The model doesn’t know anything; it samples based on probabilities. This becomes a critical distinction when LLMs are deployed to simulate entire societies.

From Smallville to Society: What’s Changing?

A new wave of simulation platforms is stretching the scale and ambition of LLM-based multi-agent systems:

| Platform | Scale | Unique Features |

|---|---|---|

| Generative Agents | 25 agents | Rich memory stream, planning via reflection loops |

| AgentSociety | 10,000+ agents | Social networks, economic roles, empirical validation |

| GenSim | 10k+ agents | Memory management + anomaly detection + dynamic scheduling |

| SocioVerse | Population-scale | Demographically conditioned agents via real-world user data |

| SALLMA | Modular | Layered architecture with APIs and simulation playback tools |

While these systems show that LLMs can support vast and expressive agent behaviors, they also inherit deep flaws:

- Convergence toward an “average persona” suppresses diversity

- Cognitive biases like anchoring and framing are replicated, not corrected

- Hallucinations inject plausible but false beliefs into agent reasoning

- Temporal drift reduces reproducibility across runs

These aren’t mere technical bugs. They’re epistemic liabilities that strike at the credibility of simulation-based research.

Black Boxes, Average People, and the Illusion of Science

At first glance, LLMs seem like the ultimate social modelers — capable of simulating anyone from a cynical cab driver to a grieving parent. But their fluency masks a dangerous convergence: in many simulations, agents become overly normative, reflecting the statistical center of their training data. This undermines efforts to model subcultures, minority opinions, or unpredictable behaviors that are central to real social dynamics.

Worse, modelers often cannot explain why an LLM agent chose a certain action. Unlike classical ABMs where decision rules are explicit, LLMs are functionally opaque. This creates a risk of automation bias — where simulation designers defer to the authority of LLM outputs, forgetting they’re shaped by unknown training biases.

When Are LLMs Actually Useful?

LLMs shine in interactive contexts:

- Educational games where students role-play with responsive NPCs

- Training simulators for emergency response or diplomacy

- Narrative-rich worlds with improv storytelling and dynamic events

In these cases, believability and engagement outweigh the need for scientific rigor. Here, the lack of inner psychology is less of a problem — the goal is experience, not explanation.

But in scientific or policy simulations — modeling vaccine hesitancy, political polarization, or economic shocks — LLMs’ limitations become critical. Their outputs may sound reasonable yet rest on hallucinated logic. Their behavioral patterns may align with training-set majorities, not real-world demographics.

Toward Hybrid Simulations: The Best of Both Worlds?

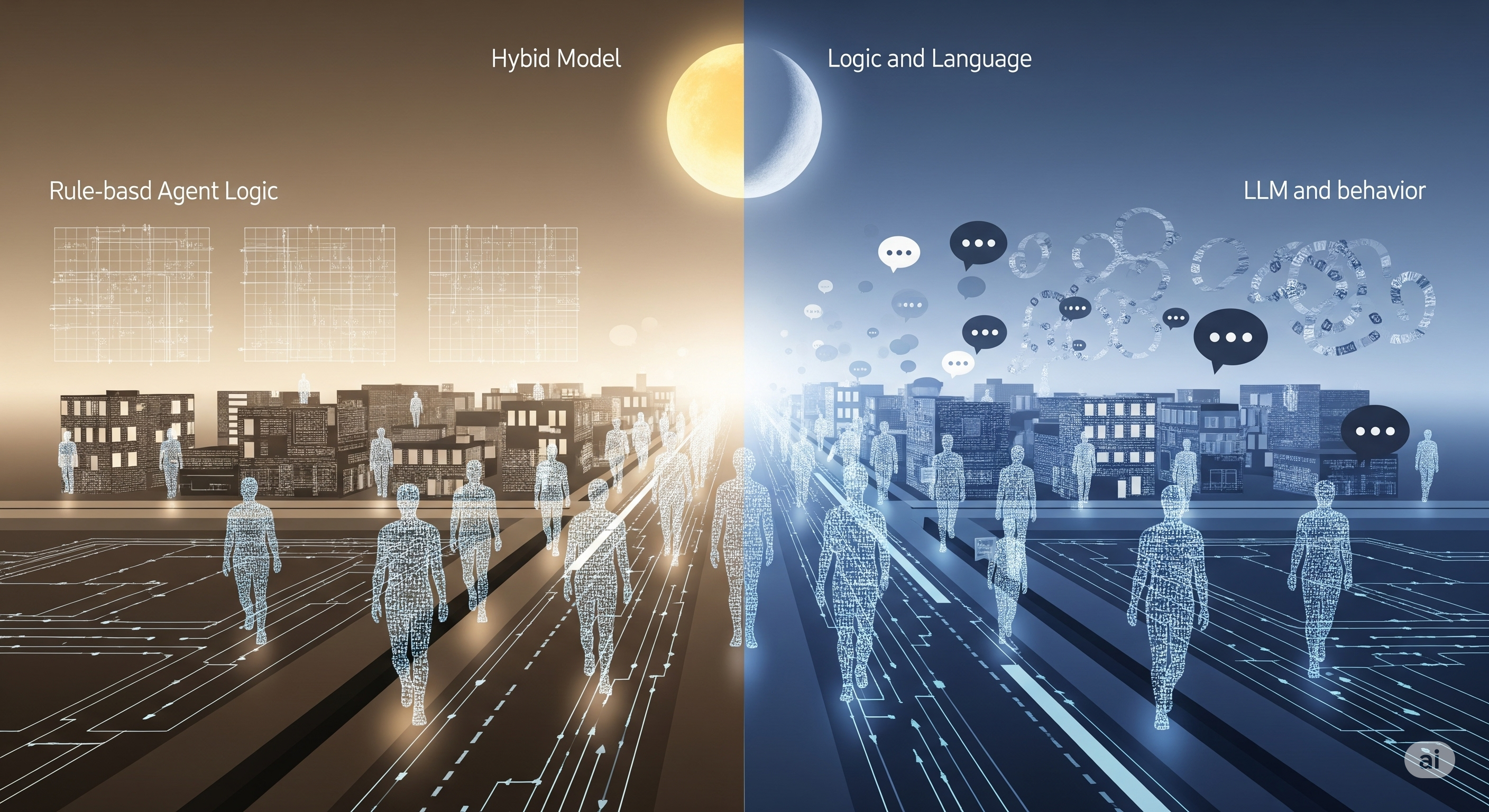

The paper makes a compelling case for LLM-augmented ABMs, not LLM-only simulations. In this view:

- Rule-based agents maintain transparency, reproducibility, and alignment with theory.

- LLMs can enrich agents with natural language reasoning, dynamic emotion expression, or context-sensitive reactions.

This hybrid approach is already in motion. Platforms like NetLogo and GAMA are integrating LLM modules via Python APIs. LLMs can handle decision subroutines, policy interpretation, or persona dialogue — while leaving control logic to the deterministic core of the simulation.

In large-scale simulations, even small language models (SLMs) may be preferable, offering efficiency and stability without the massive compute overhead of GPT-tier models.

Final Thoughts: Simulating Wisely

Using LLMs to simulate society is like building a city out of mirrors: you’ll see familiar shapes and human-like behaviors, but they reflect data distributions, not lived experience. That doesn’t mean these systems are useless. But it means we need to be careful about what questions we ask of them — and what answers we trust.

Cognaptus: Automate the Present, Incubate the Future.