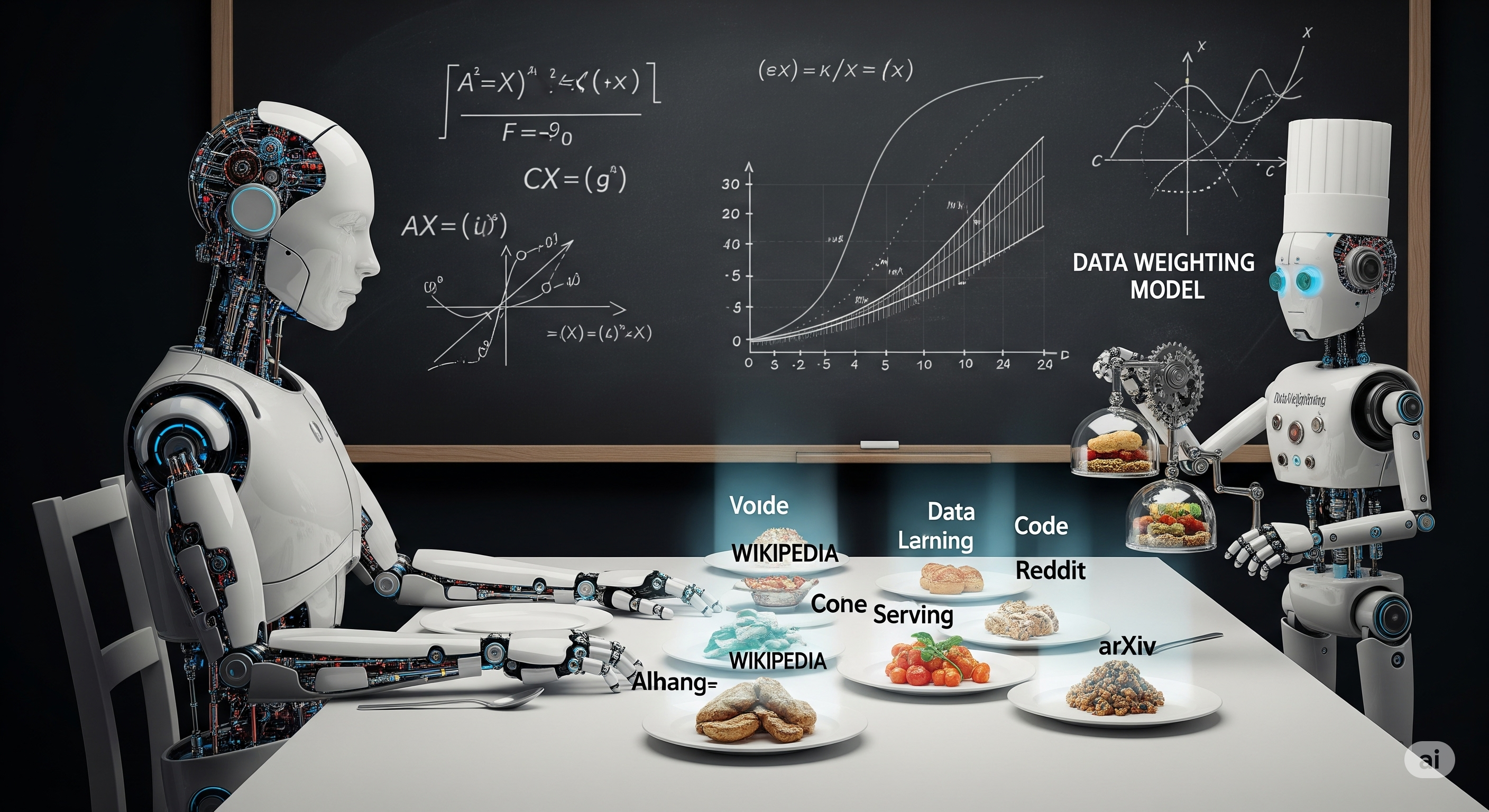

Most large language models (LLMs) are trained as if every piece of data is equally nutritious. But just as elite athletes optimize not just what they eat but when and how they eat it, a new paper proposes that LLMs can perform better if we learn to dynamically adjust their data “diet” during training.

The Static Selection Problem

Traditional data selection for LLMs is front-loaded and fixed: you decide what data to keep before training, often using reference datasets (e.g., Wikipedia) or reference models (e.g., GPT-3.5) to prune the lowest-quality examples. While effective in reducing cost, this approach ignores a key insight: an LLM’s preference for certain types of data evolves over time.

Even the most advanced selection techniques today fail to capture the interactions among samples in a batch or adjust to the LLM’s changing needs at different stages. Training with uniformly applied data can lead to plateaus in downstream generalization.

Enter Dynamic Bi-Level Optimization

The authors propose a clever alternative: a Data Weighting Model (DWM) that learns to assign different weights to training samples within each batch. Rather than deciding what to throw out, this model decides how much each sample should matter at any given point in training.

The architecture relies on bi-level optimization, a technique borrowed from meta-learning:

- Inner loop (lower level): The LLM is updated using weighted training data.

- Outer loop (upper level): The DWM is optimized based on the LLM’s performance on a held-out validation set (e.g., LAMBADA), using the chain rule to propagate gradients back through the data weighting.

This is done in 5-stage alternate training cycles. In each stage, the DWM is updated, then frozen while the LLM trains with it, and so on.

Empirical Results That Matter

Training on 30B tokens of SlimPajama data with a 370M LLaMA-style model, the authors show:

| Setup | Avg. Zero-Shot Acc | Avg. Two-Shot Acc |

|---|---|---|

| Random Data Only | 44.0% | 45.1% |

| + DWM (Dynamic Weights) | 45.0% | 46.4% |

Performance gains are more pronounced in later training stages and on few-shot reasoning tasks like BoolQ and SciQA. Moreover, DWM improves data utilization even when applied on top of existing selection methods (e.g., DSIR, QuRating).

| Method | Model Size | With DWM | Gain |

|---|---|---|---|

| Random | 370M | +1.3 pts | ✅ |

| QuRating | 1.3B | +0.7 pts | ✅ |

| DSIR | 370M | +1.1 pts | ✅ |

Why This Matters

This isn’t just another benchmark squeeze. The implications extend to every company fine-tuning its own LLMs:

- Efficiency: Rather than filtering your dataset upfront and praying it works across stages, you can train a small weighting model once and reuse it.

- Transferability: The same DWM trained on a 370M model can benefit a 1.3B model, introducing minimal (~9%) computational overhead.

- Adaptivity: Early in training, models prefer clean general data. Later, they crave expertise-rich and logic-heavy samples. DWM tracks this evolution.

Beyond the Paper: A Future of Adaptive LLM Training

This work aligns with a larger movement we’ve tracked at Cognaptus: making LLM training more feedback-driven and context-aware. From retrieval-augmented instruction tuning to agent-guided curriculum shaping, the key theme is this:

Don’t just pick the best data. Learn which data matters when.

With techniques like DWM, we inch closer to treating model training not as a one-shot filtering game, but as a strategic, stage-wise optimization—one that mirrors how humans learn: with changing needs, evolving context, and adaptive focus.

Cognaptus: Automate the Present, Incubate the Future.