Cognaptus Insights introduces Retrieval-Augmented Learning (RAL), a new approach proposed by Zongyuan Li et al.¹, allowing large language models (LLMs) to autonomously enhance their decision-making capabilities without adjusting model parameters through gradient updates or fine-tuning.

Understanding Retrieval-Augmented Learning (RAL)

RAL is designed for situations where fine-tuning large models like GPT-3.5 or GPT-4 is impractical. It leverages structured memory and dynamic prompt engineering, enabling models to autonomously refine their responses based on previous interactions and validations.

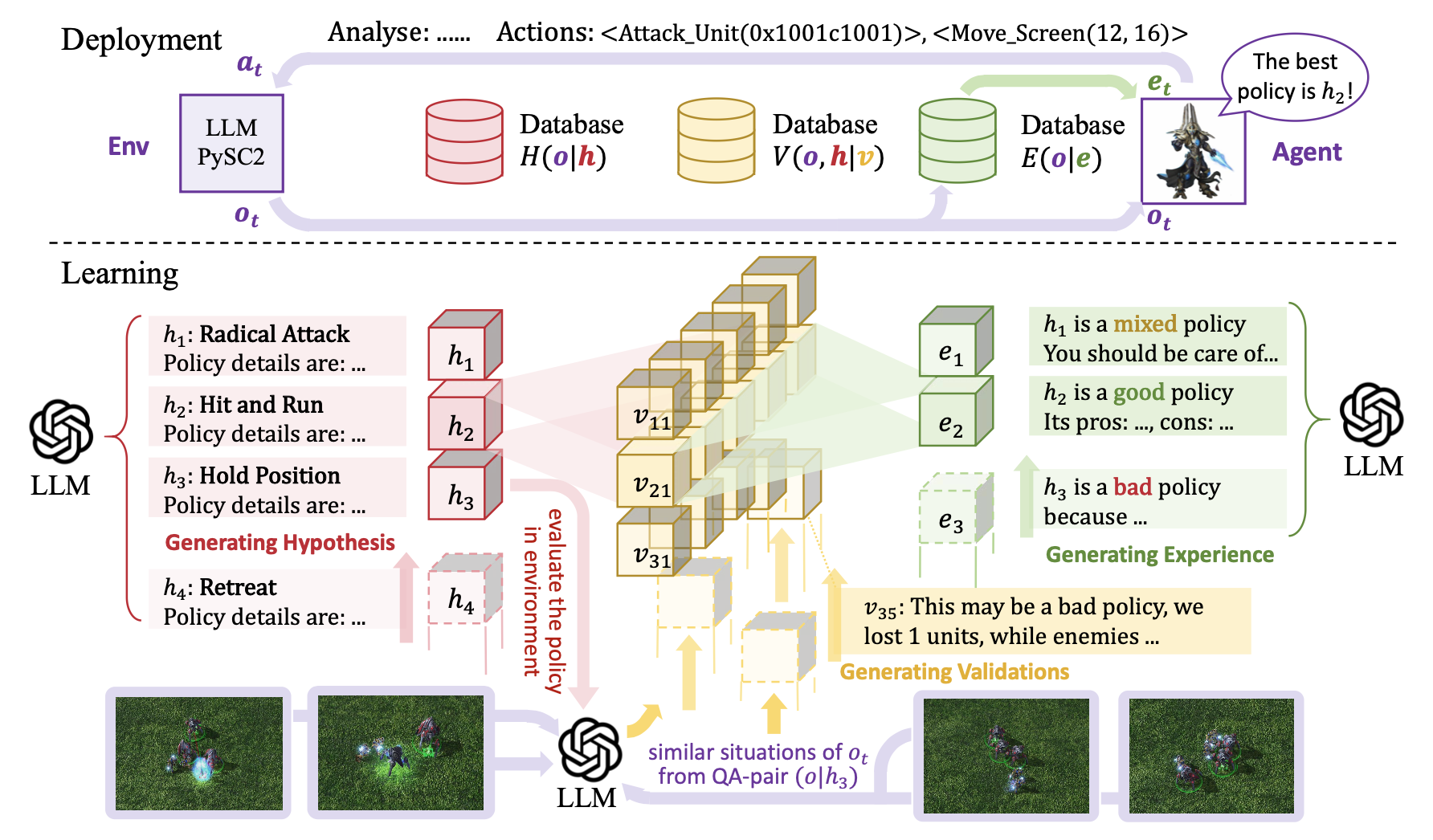

Unlike traditional fine-tuning or reinforcement learning approaches that require gradient updates, RAL achieves improvements by:

- Hypothesis generation: Creating and testing new strategies based on initial inputs.

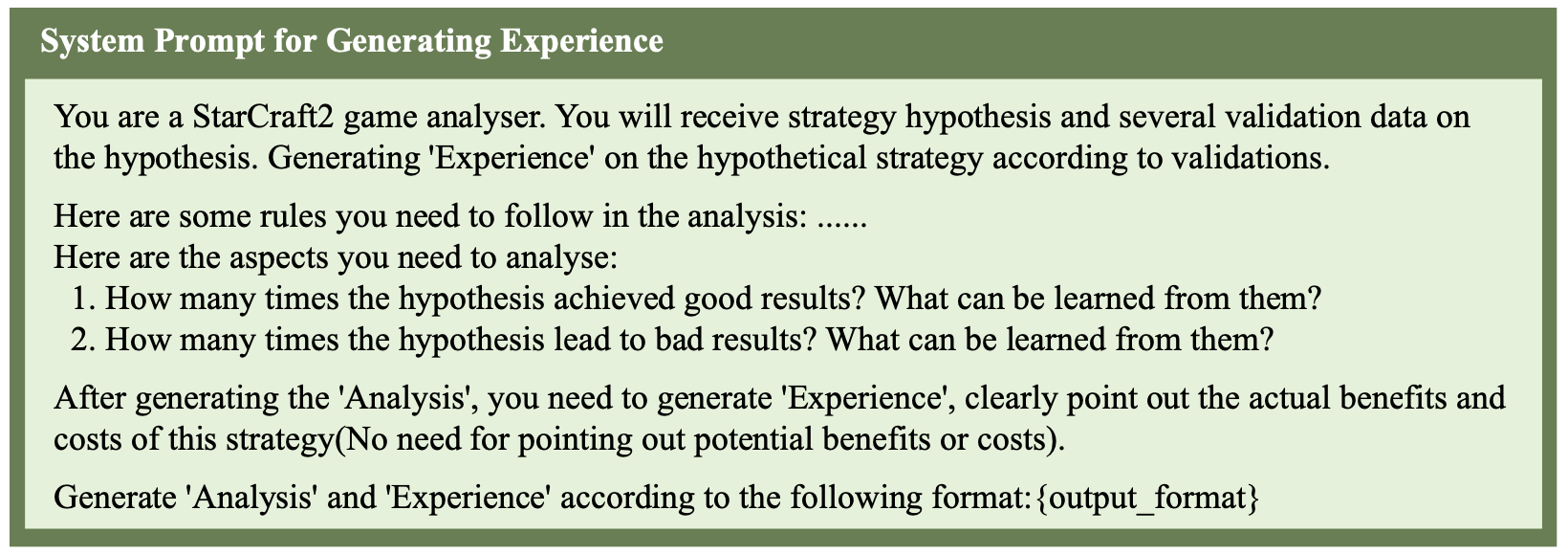

- Validation: Assessing the effectiveness of these strategies through repeated trials.

- Experience generalization: Extracting generalized knowledge from successful validations to inform future decisions.

To visualize this, consider the general flow of how an LLM iteratively proposes strategies, evaluates outcomes, and forms experience over time:

Mechanisms of RAL in Detail

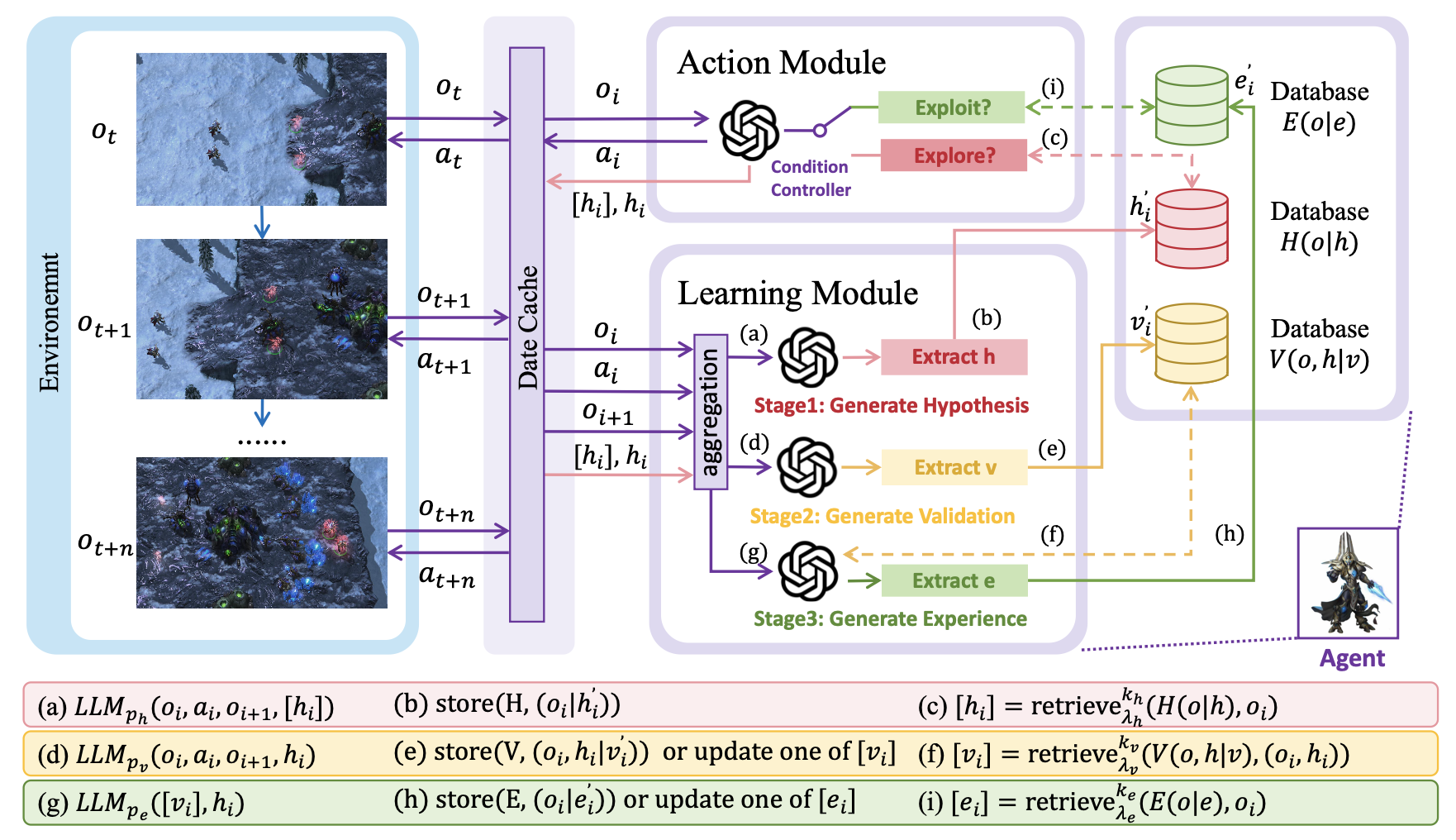

RAL systematically organizes knowledge into three structured databases:

| Database | Role | Example |

|---|---|---|

H |

Hypotheses about possible strategies | “Attack with small groups to minimize losses” |

V |

Validations that test hypotheses in similar contexts | “Small-group attacks succeeded 70% of the time against weaker defenses” |

E |

Experiences distilled from multiple validations | “Use small-group attacks only against weaker defenses” |

At each time step, the system retrieves relevant past hypotheses and experiences, then either explores a new strategy or applies learned insights to guide action:

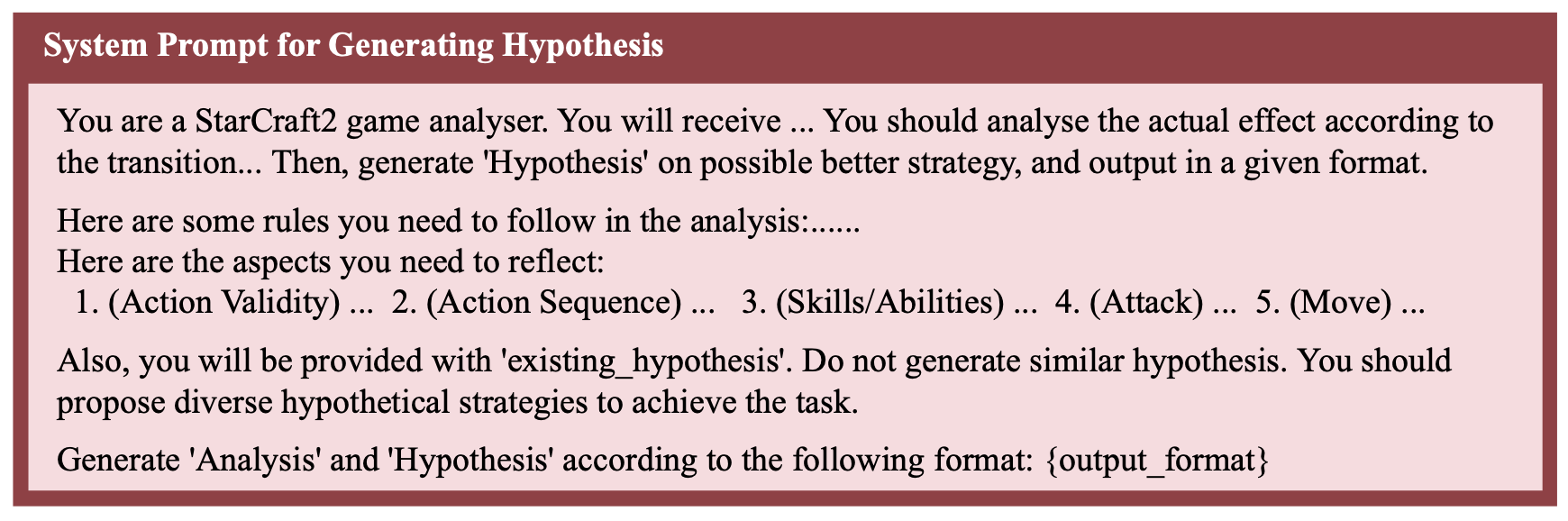

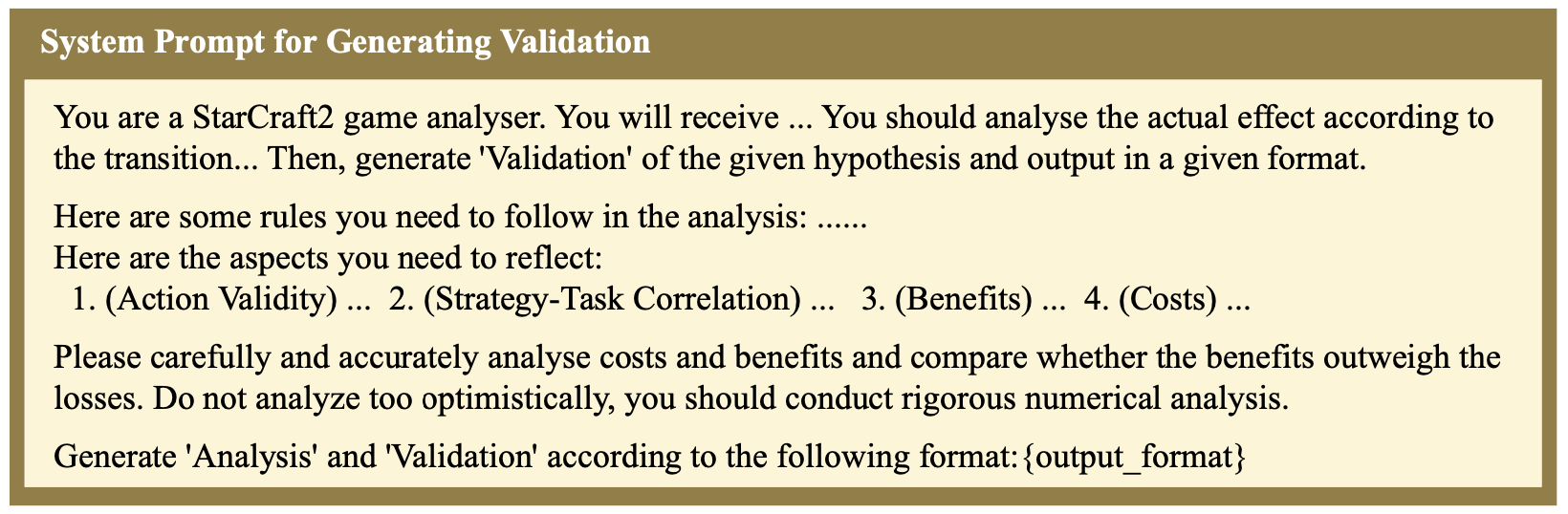

These decisions are mediated through a modular use of prompts. Below are example templates used by the system to execute each stage:

Comparison to Traditional Methods

RAL differs substantially from other methods:

| Feature | RAL | Fine-Tuning | Reflection-based Prompting |

|---|---|---|---|

| Parameter Updates | No | Yes | No |

| Learning Speed | Fast, real-time improvement | Slow, training-intensive | Moderate, depends on reflection prompt |

| Generalization | Strong through distilled experiences | Moderate, depends on training data | Limited, based on reflection capability |

| Computational Cost | Low | High | Moderate |

Strengths and Limitations of RAL

One major strength of RAL is that it allows LLMs to become increasingly competent without undergoing computationally expensive retraining. Its structured retrieval mechanism makes it especially powerful for handling edge cases and unseen scenarios, as lessons learned in similar contexts are recycled effectively. This makes RAL highly applicable to dynamic environments where human-in-the-loop supervision or frequent model updates are not feasible.

However, the RAL framework also has limitations. Its learning is bounded by what can be inferred from single-step transitions, making it less suitable for long-horizon planning or complex reasoning chains. Additionally, if the retrieval logic isn’t well managed, the system may rely too heavily on outdated or biased experience, leading to suboptimal behavior over time.

RAL’s Model Generalization Performance

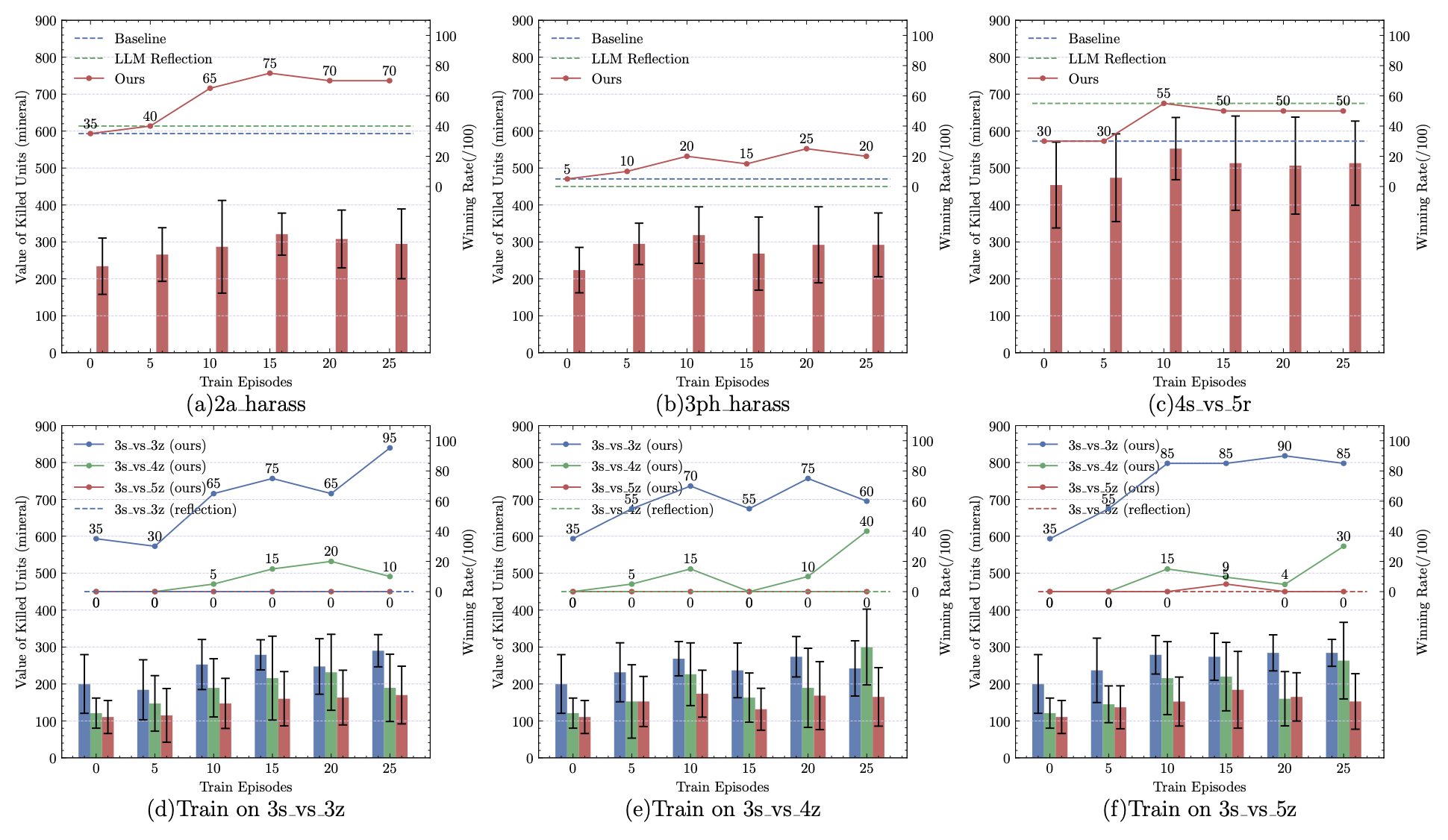

Interestingly, RAL’s benefits appear to transfer across models. Experiments show that while stronger models (like GPT-3.5 or GPT-4o) demonstrate more stable improvements, even smaller models can benefit from structured memory and experience re-use, though they are more prone to hallucination and brittle reasoning.

Detailed Business Use Cases of RAL

1. Enhanced Customer Support

For customer support, RAL enables a static model to continuously improve by learning from real-world interactions. The LLM can generate multiple alternative response styles for a query, then validate them by monitoring user feedback such as satisfaction ratings or issue resolution time. These results are distilled into actionable patterns that the bot retrieves later for similar questions. This setup eliminates the need for costly retraining while ensuring the AI evolves with the customer base.

2. Dynamic Marketing Campaigns

In fast-moving markets, product copy and campaign messaging often require updates. RAL allows AI marketing tools to propose variant phrasings, track which ones lead to the most user engagement (click-throughs, conversions), and generate lessons from these observations. Over time, this results in self-adjusting tone and keyword preferences, enabling copywriters or AI tools to remain effective even as audience tastes shift.

3. Intelligent Corporate Knowledge Systems

Companies often struggle with static documentation and changing policy interpretations. RAL-based knowledge assistants can experiment with different explanations for policies, monitor whether employees find the outputs helpful, and retain only those which consistently reduce confusion or improve compliance. This creates a dynamic feedback loop between policy use and interpretation, all without altering the base model.

Implementing RAL Effectively in Firms

To integrate RAL successfully, organizations should first identify tasks that benefit from incremental improvement rather than static accuracy. Use cases like internal support, decision guidance, or user-facing content are ideal. From there, firms must structure data pipelines to collect strategy attempts, feedback outcomes, and extraction of patterns — these act as the H, V, and E components of RAL. Finally, ensure the retrieval mechanism remains relevant by curating memory entries regularly and embedding clear validation criteria to assess success.

Conclusion

RAL offers businesses a powerful method to leverage existing LLMs with minimal additional cost, enabling real-time adaptability and improved decision-making without extensive retraining.

¹ Zongyuan Li, Pengfei Li, Runnan Qi, Yanan Ni, Lumin Jiang, Hui Wu, Xuebo Zhang, Kuihua Huang, Xian Guo. (2024). Retrieval Augmented Learning: A Retrial-based Large Language Model Self-Supervised Learning and Autonomous Knowledge Generation. arXiv:2505.01073. https://arxiv.org/abs/2505.01073