What if your business processes could think like your most experienced employee—recalling similar past cases, adapting on the fly, and explaining every decision? Welcome to the world of CBR-augmented LLMs: where Large Language Models meet Case-Based Reasoning to bring Business Process Automation (BPA) to a new cognitive level.

From Black Box to Playbook

Traditional LLM agents often act like black boxes: smart, fast, but hard to explain. Meanwhile, legacy automation tools follow strict, rule-based scripts that struggle when exceptions pop up.

Enter CBR (Case-Based Reasoning): a memory-inspired framework where past problem-solution pairs—cases—are stored, retrieved, and adapted to solve new problems. When integrated with LLMs, this creates an agent that not only understands language but also learns from experience.

Why Business Processes Are Case Goldmines

Business processes—from customer service to compliance workflows—are naturally case-driven:

- “This complaint looks like what we saw with Client X.”

- “Last time someone ordered this combo, we offered a custom bundle.”

By encoding these into a case library, a CBR-LLM agent can:

- Retrieve precedents when a similar situation arises

- Explain decisions by showing why this case applies

- Adapt solutions using LLM reasoning

- Learn over time by storing new cases with outcomes

How CBR-LLMs Work

A typical CBR-LLM-powered automation system includes:

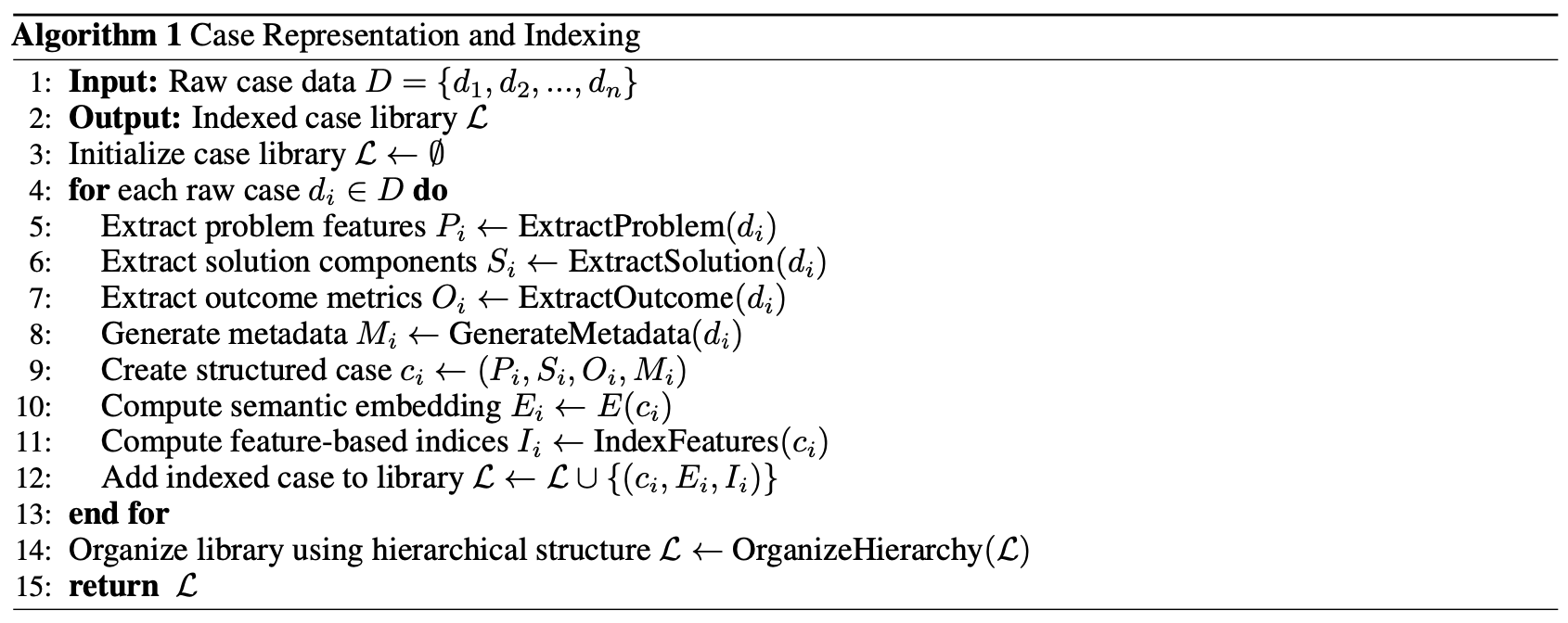

1. Case Representation

Each case consists of structured components:

| Case Component | Example 1 | Example 2 |

|---|---|---|

| Problem | Late delivery complaint from Region A | Unexpected charge on corporate invoice |

| Solution | Expedite next delivery + 10% refund | Issue credit note + email explanation |

| Outcome | Customer satisfaction score: 9.2/10 | Resolved with no escalation |

| Metadata | Timestamp, client tier, agent name | Case handled by team lead in Q4 |

These features can be defined by humans (for structured retrieval) or encoded by LLMs into semantic embeddings for flexible matching.

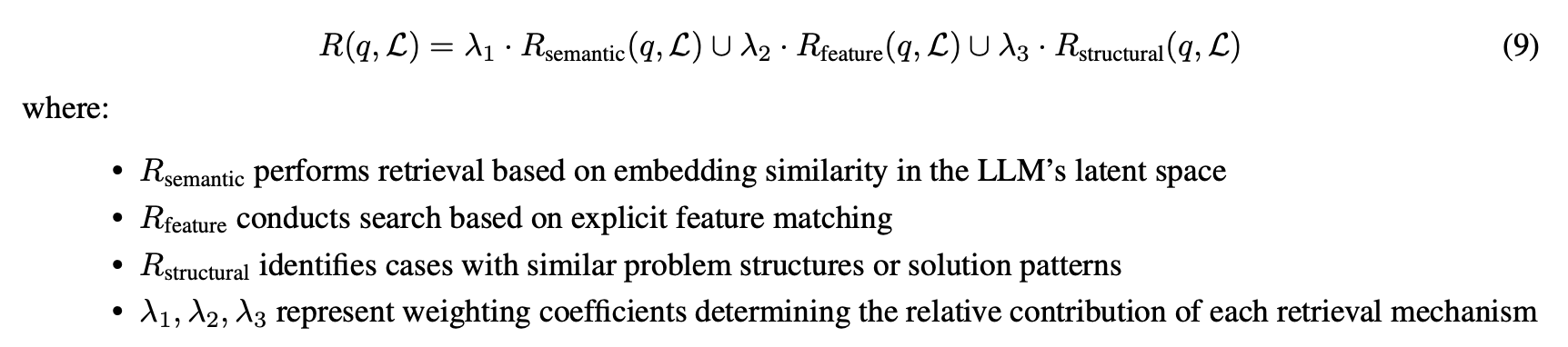

2. Hybrid Similarity Retrieval

The agent uses both:

- Feature-based similarity: comparing structured fields (e.g., region, complaint type)

- Semantic similarity: comparing LLM-generated embeddings of the problem description

The weights (λ₁, λ₂, λ₃) across these methods can be tuned manually, learned through feedback loops, or adjusted dynamically depending on task sensitivity or model confidence.

3. LLM-Powered Adaptation

This is where CBR-LLMs shine. Instead of copying and pasting past solutions, the LLM uses retrieved cases as context to reason, modify, and generate new responses.

Example:

- Retrieved Case: “Customer in Region A complained about late shipment. Solution: expedite next delivery and give 10% discount.”

- New Problem: “Customer in Region B got their item one day late, but it’s a subscription service.”

- Adapted Response: “We’ll extend their subscription by a week and notify them with a personalized message.”

The LLM’s role includes:

- Selecting relevant solution fragments

- Transforming them based on context differences

- Composing new responses using domain tone and policy constraints

4. Case Retention and Learning

The system stores only valuable new cases. A new case is retained if it meets utility criteria:

- Novel: The case introduces a previously unseen combination of features.

- Effective: The solution achieved high outcome scores (e.g., satisfaction, task success).

- Generalizable: The case is relevant across multiple contexts, not just a one-off.

Formally, this is guided by a utility score that balances novelty, effectiveness, and applicability. Cases below threshold δ are not retained.

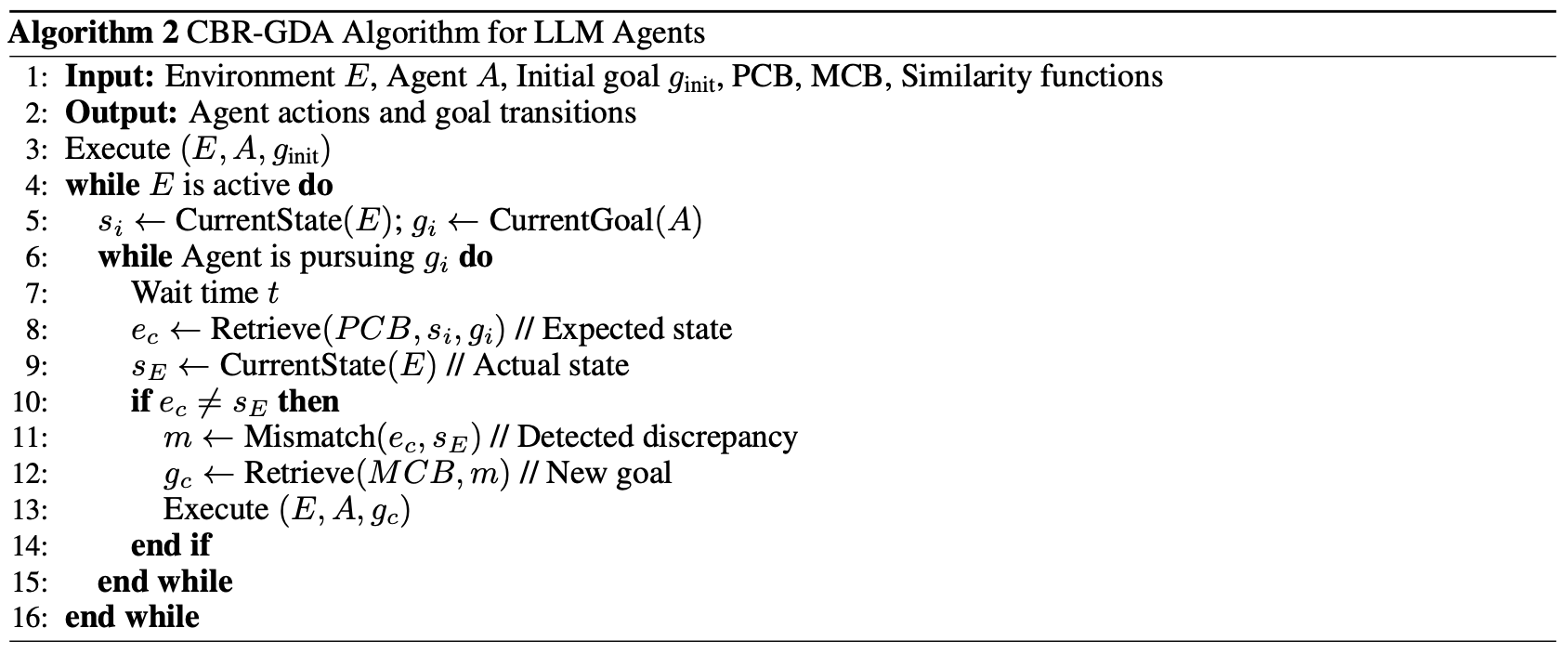

Cognitive Reasoning with Goal-Driven Autonomy (CBR-GDA)

CBR-LLMs grow even stronger when combined with Goal-Driven Autonomy (GDA), forming a hybrid that allows:

- Monitoring expectations vs. reality

- Explaining why outcomes differ from predictions

- Setting and reprioritizing goals on the fly

This enables agents to react to unexpected events, replan when disruptions occur, and keep operations aligned with business intent.

Business Benefits

- Explainability: See exactly which case and logic led to a decision

- Auditability: Structured history is ideal for compliance reviews

- Adaptability: Continuously improves from operational experience

- Resilience: Handles edge cases where rules fail

Comparative Analysis: CBR vs. CoT vs. Vanilla RAG

| Dimension | CBR-LLM | Chain-of-Thought (CoT) | Vanilla RAG |

|---|---|---|---|

| Knowledge Use | Experiential | Parametric | Reference-based |

| Reasoning Transparency | High | Medium | Low |

| Adaptation Capacity | High | Limited | Moderate |

| Domain Specificity | Explicit | Implicit | Reference-dependent |

| Learning Mechanism | Case acquisition | Parameter updates | Corpus expansion |

| Cognitive Capability | Rich | Limited | Moderate |

| Goal Reasoning | Dynamic | Static | Static |

Future-Proofing BPA

As business operations become more complex, adaptable, and data-rich, CBR-LLMs offer a scalable and explainable solution:

- Apply precedents without retraining

- Adapt to exceptions and changing policies

- Grow smarter with every successful (or failed) action

By integrating memory, reasoning, and autonomy, CBR-LLMs redefine what smart automation means.

🧾 Reference:

Review of Case-Based Reasoning for LLM Agents: Theoretical Foundations, Architectural Components, and Cognitive Integration, Kostas Hatalis, Despina Christou, Vyshnavi Kondapalli. arXiv:2504.06943 [cs.AI]. Submitted on 9 Apr 2025.

💡 Cognaptus builds AI systems that combine memory, reasoning, and real-world adaptability. Ready to upgrade your automation IQ?