The CoRAG Deal: RAG Without the Privacy Plot Twist

The tension is growing: organizations want to co-train AI systems to improve performance, but data privacy concerns make collaboration difficult. Medical institutions, financial firms, and government agencies all sit on valuable question-answer (QA) data — but they can’t just upload it to a shared cloud to train a better model.

This is the real challenge holding back Retrieval-Augmented Generation (RAG) from becoming a truly collaborative AI strategy. Not the rise of large context windows. Not LLMs like Gemini 2.5. But the walls between data owners.

The Misconception: Multi-Party Model Training Requires Data Sharing

Many believe that to build a shared retriever-generator model, all participants must upload their labeled QA data. This assumption blocks collaboration in high-stakes industries where privacy is non-negotiable.

Why It’s a Reasonable Assumption

Traditional supervised learning models — including most RAG pipelines — rely on paired inputs and outputs to optimize. If no one shares their labels, how can the model learn jointly?

The Breakthrough: CoRAG

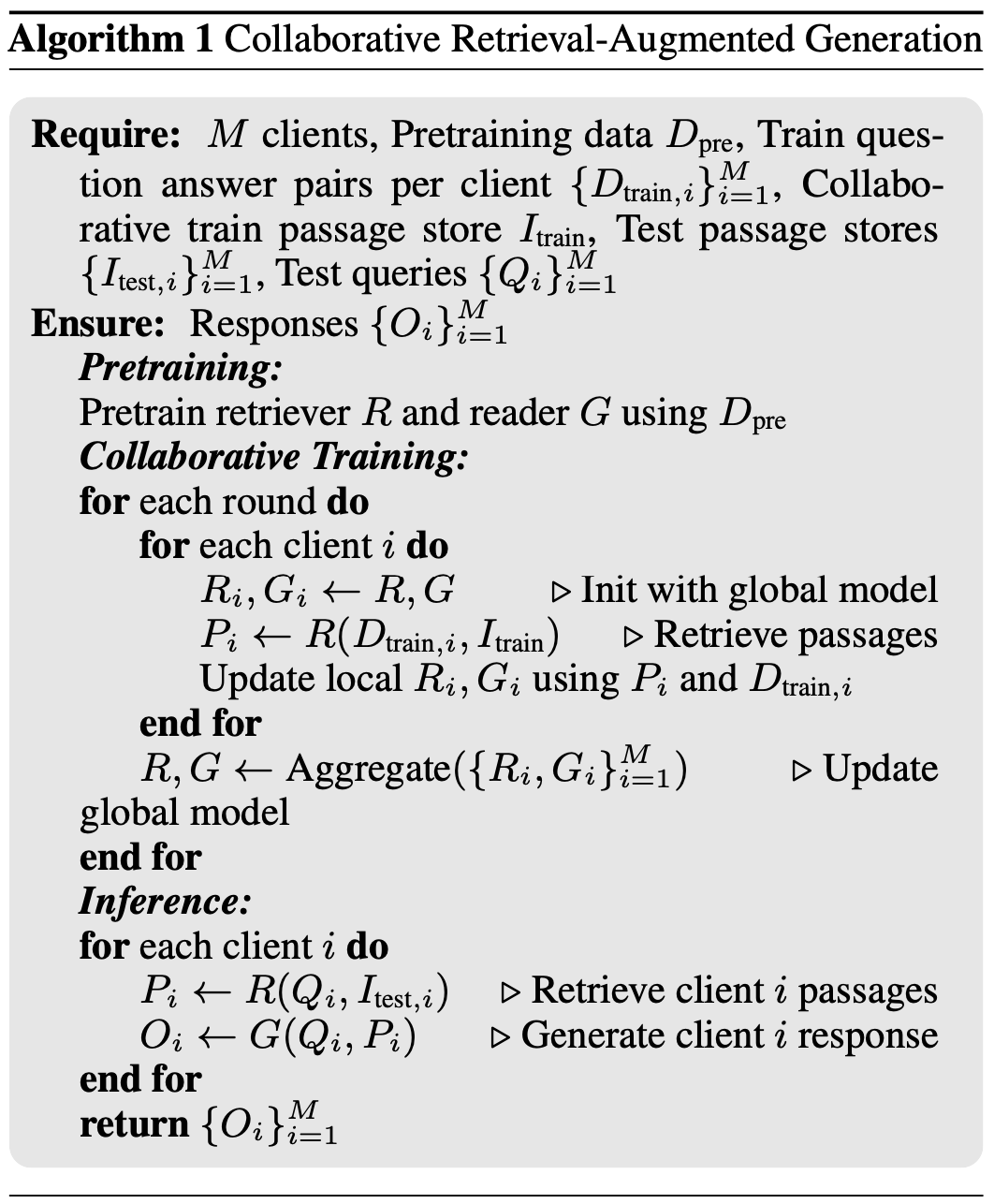

Enter CoRAG — short for Collaborative Retrieval-Augmented Generation (Aashiq Muhamed, Mona Diab, Virginia Smith, 2025). Instead of pooling labeled examples, CoRAG allows each client to:

- Share a passage/document collection (not labeled data)

- Train locally on their own private QA pairs

- Collaboratively update a shared retriever and generator model via federated-style learning

In CoRAG, the central insight is that retrievers trained across pooled documents, guided by private labels, can generalize better — without leaking sensitive data.

This diagram shows how CoRAG’s shared passage store and local label-based training interact in a federated loop.

Suggested placement: directly after the paragraph above for maximum clarity, as it visualizes the entire system architecture.

That said, CoRAG isn’t perfect. Potential drawbacks include:

- Risk of model leakage through update gradients

- Uneven contribution from clients with imbalanced data

- Dependency on high-quality, shareable passage sets

However, these limitations are manageable:

- Techniques like differential privacy or gradient masking can reduce leakage.

- Clients benefit from the model even with minimal input.

- The shared passage store doesn’t require sensitive labels — only textual knowledge.

The result: privacy-preserving performance gains that would otherwise be impossible under strict data isolation.

In benchmark experiments (CRAB dataset), CoRAG outperformed both local-only and naive federated training models. It even showed that mixing in “irrelevant” passages helped generalization, while “hard negatives” hurt — an insight only possible through large-scale collaboration.

From Teams to Codebases: Where RAG Still Shines

What CoRAG achieves for multi-party QA, another RAG adaptation accomplishes in a totally different arena: software testing. While CoRAG manages distributed privacy, this approach handles distributed context — namely, sprawling, structured codebases.

A recent paper — From Code Generation to Software Testing: AI Copilot with Context-Based RAG (Yuchen Wang, Shangxin Guo, Chee Wei Tan, 2025, arXiv:2504.01866) — shows how RAG can guide an LLM to:

- Detect bugs

- Propose code fixes

- Generate test cases

This is done by modeling the codebase as a graph of contextual nodes — functions, bug reports, test results — and retrieving the most relevant context for the LLM to act upon. It’s another form of smart filtering: not for privacy this time, but for scale and structure.

📊 Table: Impact of Context-Based RAG in Software Testing

| Metric | Baseline Model | With Context-Based RAG |

|---|---|---|

| Bug Detection Accuracy | — | +31.2% |

| Critical Test Coverage | — | +12.6% |

| Developer Acceptance Rate | — | +10.5% |

The connection is clear: whether split by data ownership or code complexity, RAG enables localized reasoning with shared intelligence.

RAG Is Evolving — Not Disappearing

Gemini 2.5 and other mega-context LLMs are powerful, but they don’t solve every problem. In settings where:

- Data is siloed

- Context is structured or relational

- Efficiency or explainability is critical

…RAG — and especially collaborative frameworks like CoRAG — offer a compelling alternative. Retrieval is no longer just a way to cut costs. It’s a way to align AI with real-world constraints.

Written by Cognaptus Insights — where enterprise intelligence meets real-world AI.

References

-

Aashiq Muhamed, Mona Diab, Virginia Smith. CoRAG: Collaborative Retrieval-Augmented Generation. arXiv:2504.01883 [cs.AI], 2025. https://doi.org/10.48550/arXiv.2504.01883

-

Yuchen Wang, Shangxin Guo, Chee Wei Tan. From Code Generation to Software Testing: AI Copilot with Context-Based RAG. arXiv:2504.01866 [cs.SE], accepted by IEEE Software. https://doi.org/10.1109/MS.2025.3549628, https://doi.org/10.48550/arXiv.2504.01866