Add to Cart, Add to Power: What Happens When AI Shops for You

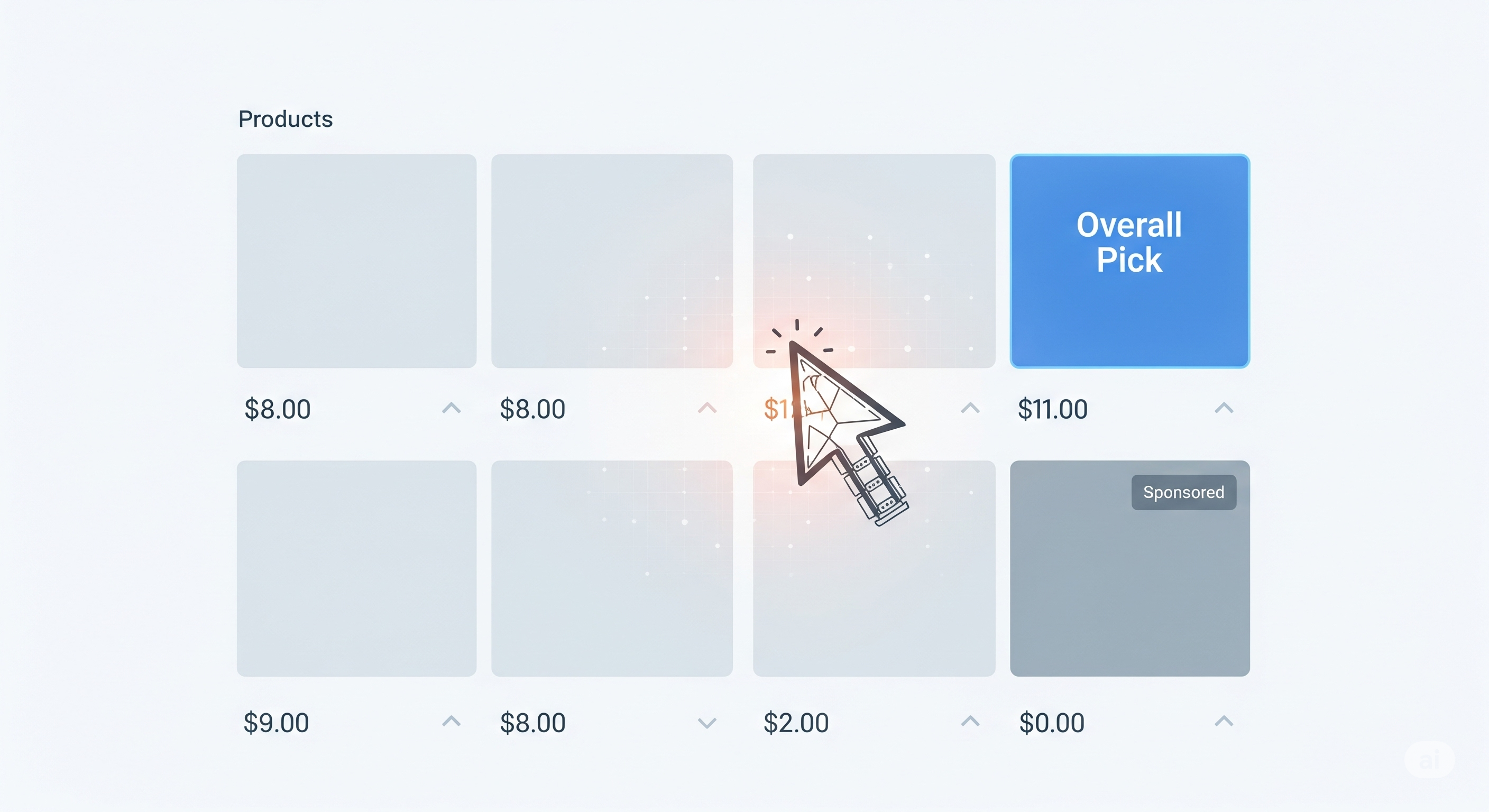

When humans stop shopping and AI takes over, the cart becomes a new battleground. A recent study titled “What Is Your AI Agent Buying?” introduces a benchmark framework called ACES to simulate AI-mediated e-commerce environments, and the results are far more consequential than a simple switch from user clicks to agent decisions. The ACES Sandbox: Agentic E-Commerce Under the Microscope ACES (Agentic e-Commerce Simulator) offers a controlled environment that pairs state-of-the-art vision-language-model (VLM) agents with a mock shopping website. This setup enables causal measurement of how different product attributes (price, rating, reviews) and platform levers (position, tags, sponsorship) influence agentic decision-making. ...