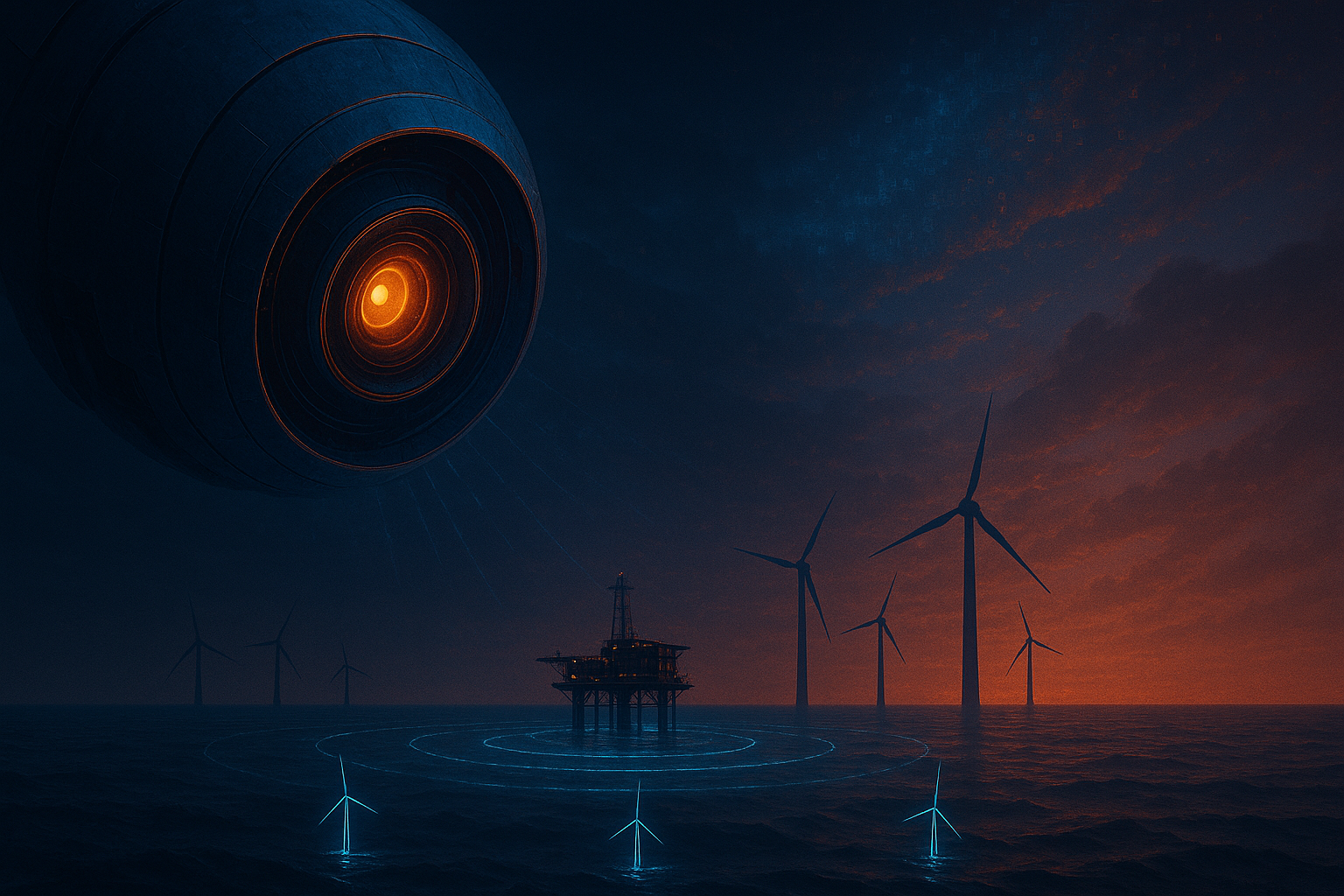

Synthetic Seas: When Artificial Data Trains Real Eyes in Space

Opening — Why this matters now The ocean economy has quietly become one of the world’s fastest‑growing industrial frontiers. Oil and gas rigs, offshore wind farms, and artificial islands now populate the seas like metallic archipelagos. Yet, despite their scale and significance, much of this infrastructure remains poorly monitored. Governments and corporations rely on fragmented reports and outdated maps—while satellites see everything, but few know how to interpret the data. ...