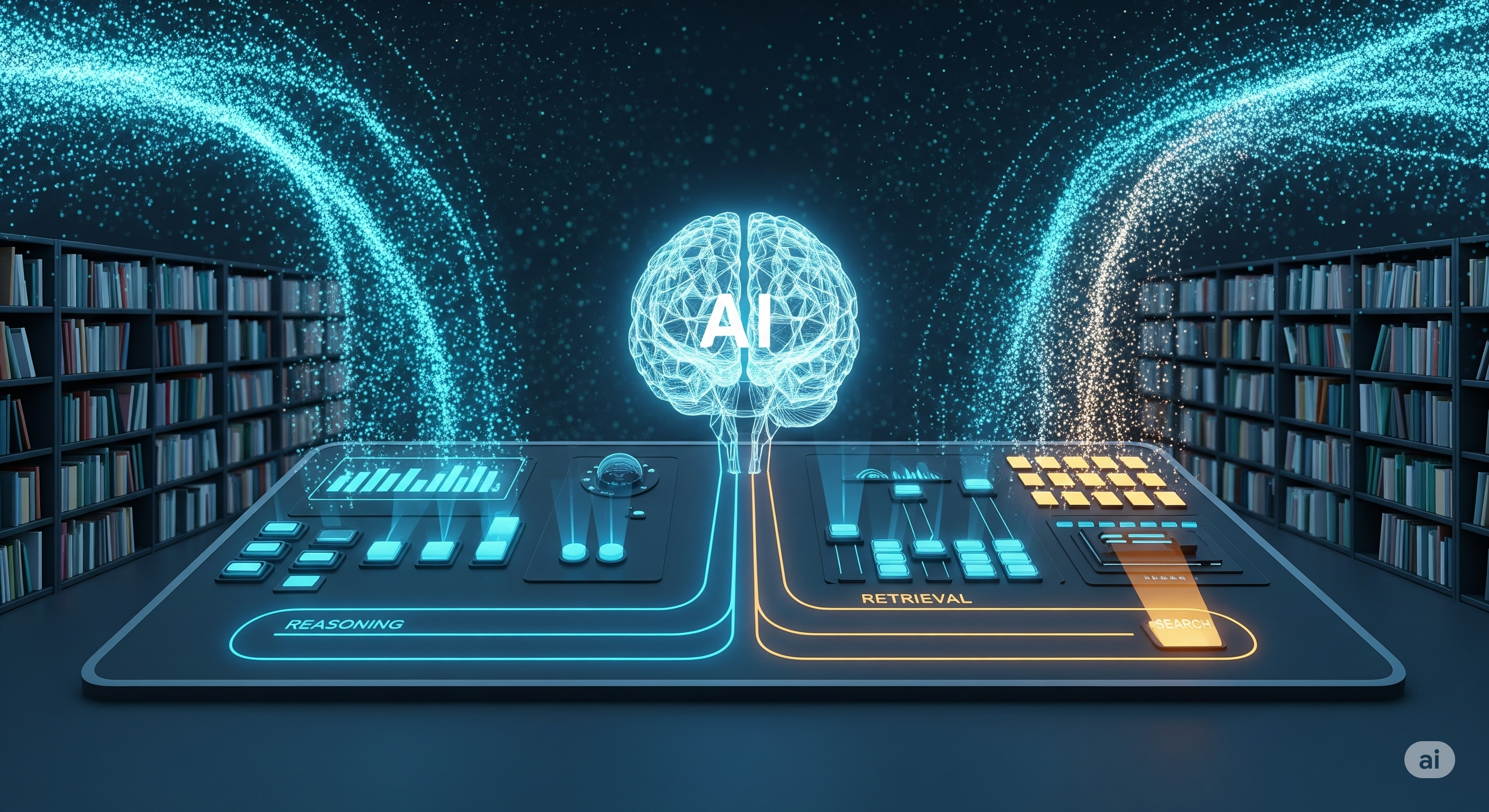

Search When It Hurts: How UR² Teaches Models to Retrieve Only When Needed

Most “smart” RAG stacks are actually compulsive googlers: they fetch first and think later. UR² (“Unified RAG and Reasoning”) flips that reflex. It trains a model to reason by default and retrieve only when necessary, using reinforcement learning (RL) to orchestrate the dance between internal knowledge and external evidence. Why this matters for builders: indiscriminate retrieval is the silent cost center of LLM systems—extra latency, bigger bills, brittle answers. UR² shows a way to make retrieval selective, structured, and rewarded, yielding better accuracy on exams (MMLU‑Pro, MedQA), real‑world QA (HotpotQA, Bamboogle, MuSiQue), and even math. ...