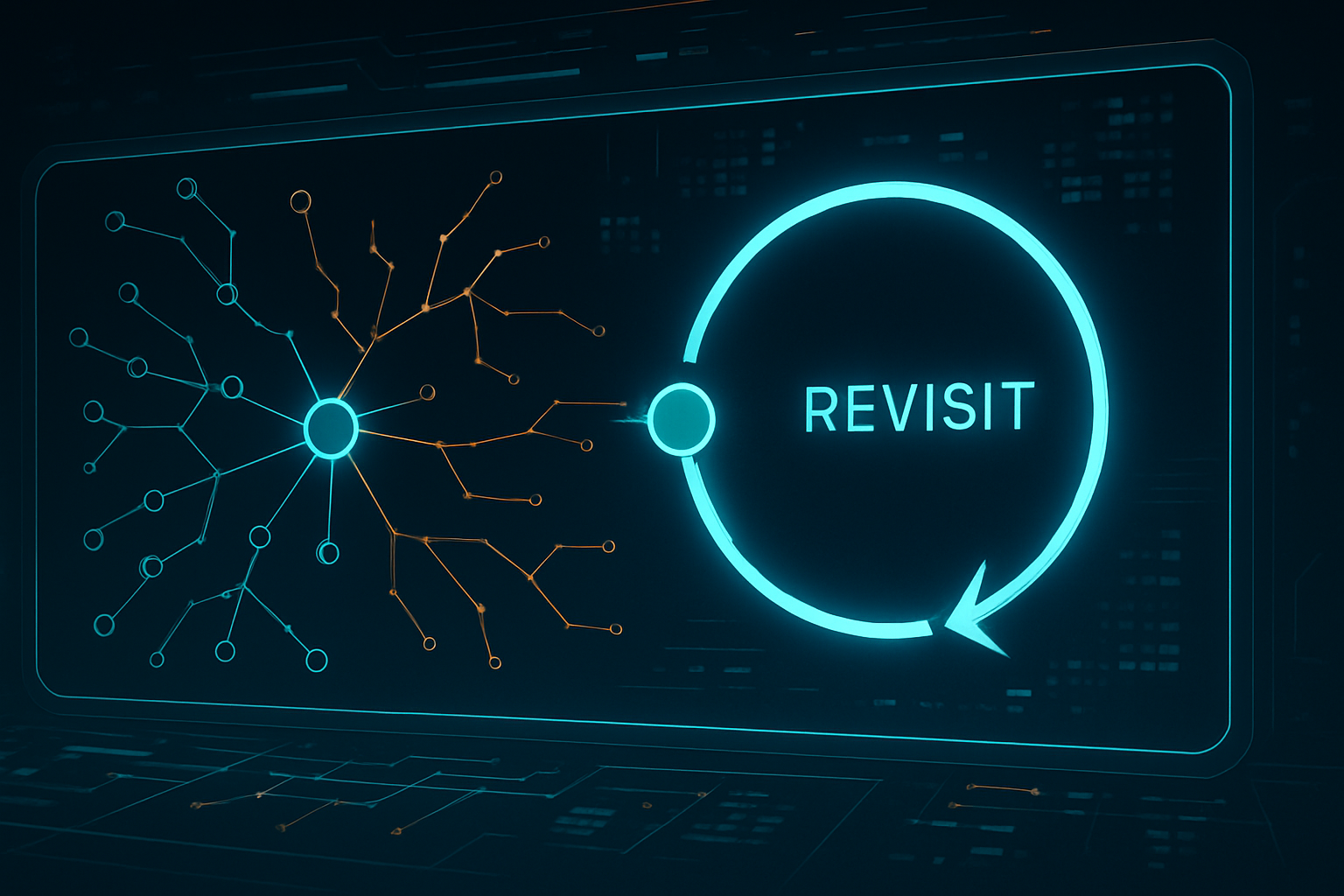

Backtrack to Breakthrough: Why Great AI Agents Revisit

TL;DR Agentic performance isn’t just about doing more; it’s about going back. In GSM-Agent—a controllable, tool-using version of GSM8K—top models only reach ~65–68% accuracy, and the strongest predictor of success is a high revisit ratio: deliberately returning to a previously explored topic with a refined query. That’s actionable for enterprise AI: design agents that can (1) recognize incomplete evidence, (2) reopen earlier lines of inquiry, and (3) instrument and reward revisits. ...