When LLMs Invent Languages: Efficiency, Secrecy, and the Limits of Natural Speech

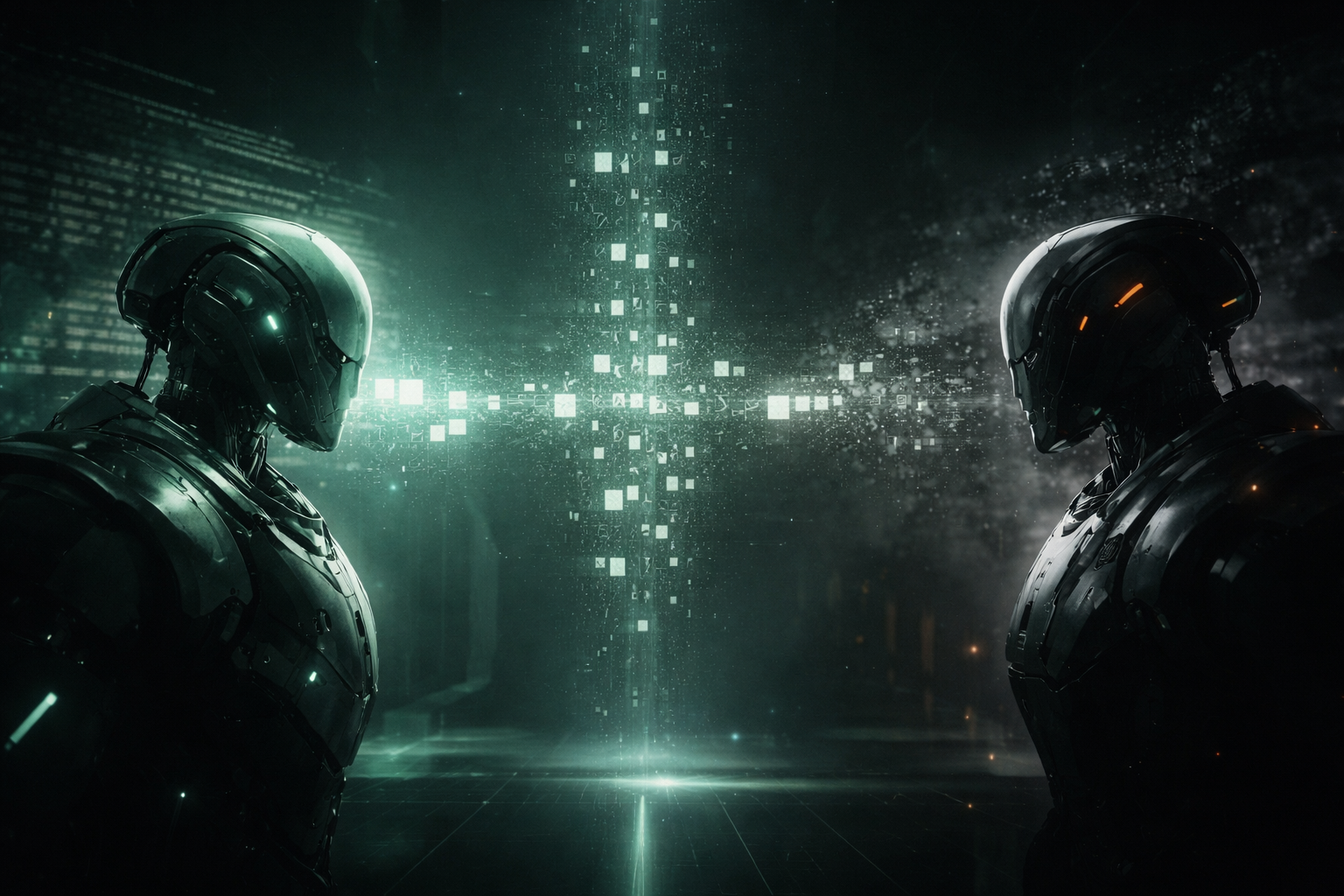

Opening — Why this matters now Large language models are supposed to speak our language. Yet as they become more capable, something uncomfortable emerges: when pushed to cooperate efficiently, models often abandon natural language altogether. This paper shows that modern vision–language models (VLMs) can spontaneously invent task-specific communication protocols—compressed, opaque, and sometimes deliberately unreadable to outsiders—without any fine-tuning. Just prompts. ...