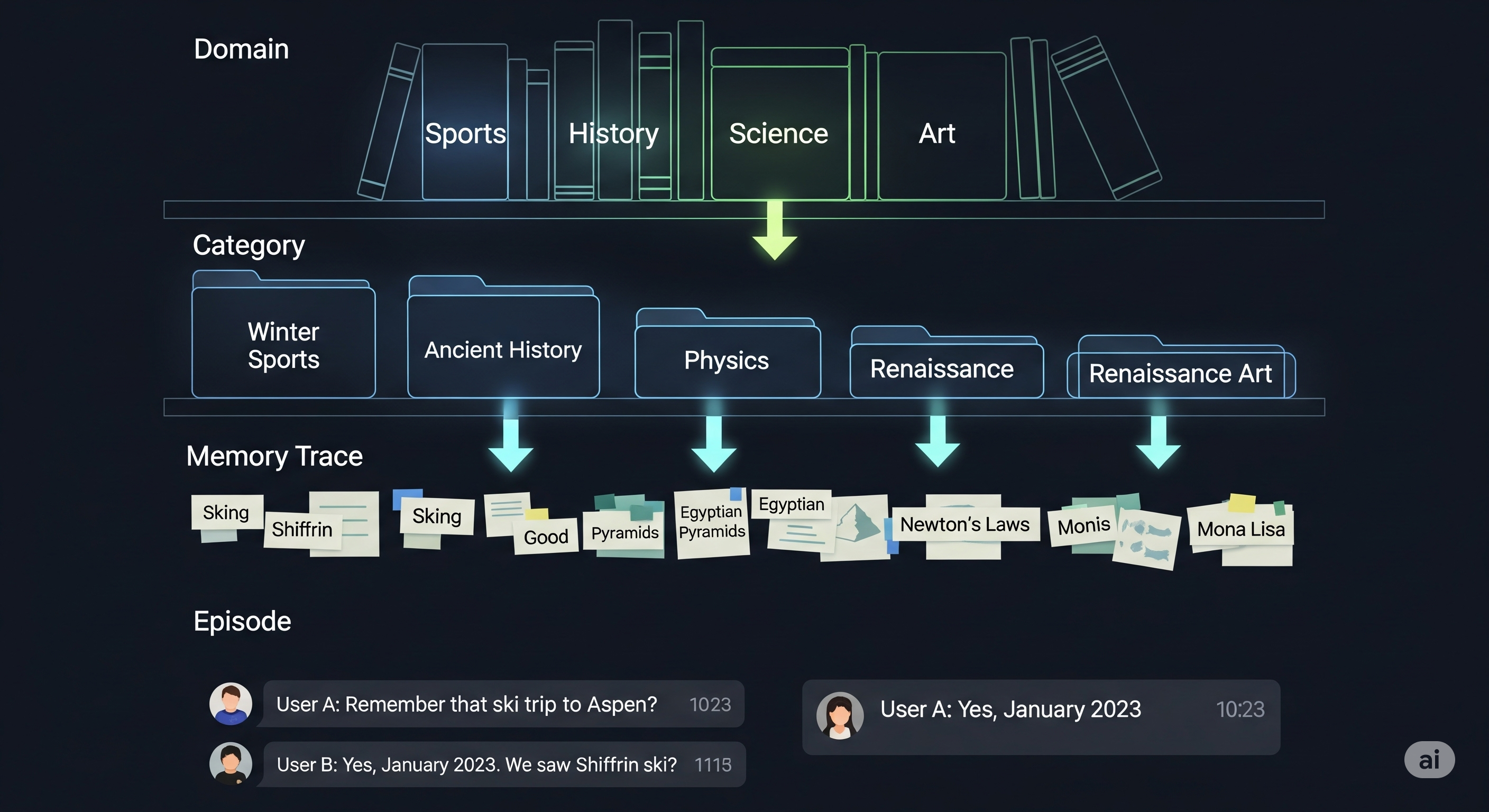

FadeMem: When AI Learns to Forget on Purpose

Opening — Why this matters now The race to build smarter AI agents has mostly followed one instinct: remember more. Bigger context windows. Larger vector stores. Ever-growing retrieval pipelines. Yet as agents move from demos to long-running systems—handling days or weeks of interaction—this instinct is starting to crack. More memory does not automatically mean better reasoning. In practice, it often means clutter, contradictions, and degraded performance. Humans solved this problem long ago, not by remembering everything, but by forgetting strategically. ...