Layers of Thought: How Hierarchical Memory Supercharges LLM Agent Reasoning

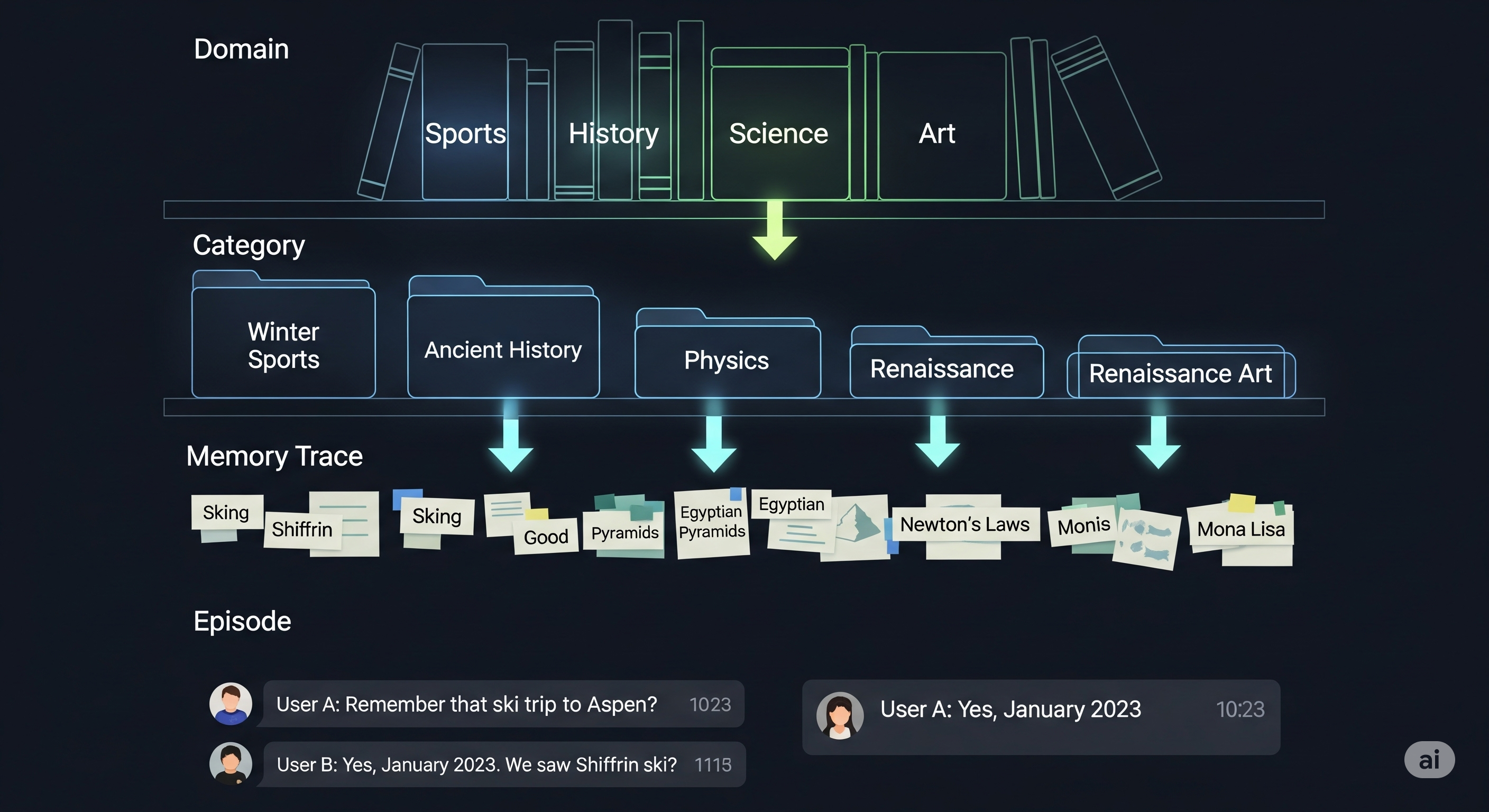

Most LLM agents today think in flat space. When you ask a long-term assistant a question, it either scrolls endlessly through past turns or scours an undifferentiated soup of semantic vectors to recall something relevant. This works—for now. But as tasks get longer, more nuanced, and more personal, this memory model crumbles under its own weight. A new paper proposes an elegant solution: H-MEM, or Hierarchical Memory. Instead of treating memory as one big pile of stuff, H-MEM organizes past knowledge into four semantically structured layers: Domain, Category, Memory Trace, and Episode. It’s the difference between a junk drawer and a filing cabinet. ...