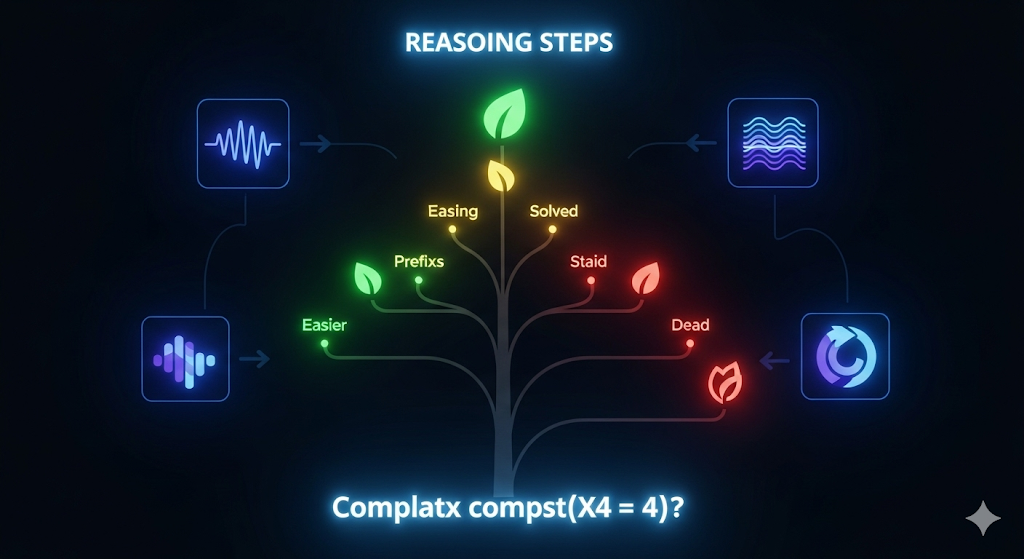

Branching Out of the Box: Tree‑OPO Turns MCTS Traces into Better RL for Reasoning

The punchline Tree‑OPO takes something many labs already produce—MCTS rollouts from a stronger teacher—and treats them not just as answers but as a curriculum of prefixes. It then optimizes a student with GRPO-like updates, but with staged, tree-aware advantages instead of a flat group mean. The result in math reasoning (GSM8K) is a modest but consistent bump over standard GRPO while keeping memory/complexity low. Why this matters for practitioners: you can get more out of your expensive searches (or teacher traces) without training a value model or lugging around teacher logits during student training. ...