When Diffusion Learns How to Open Drawers

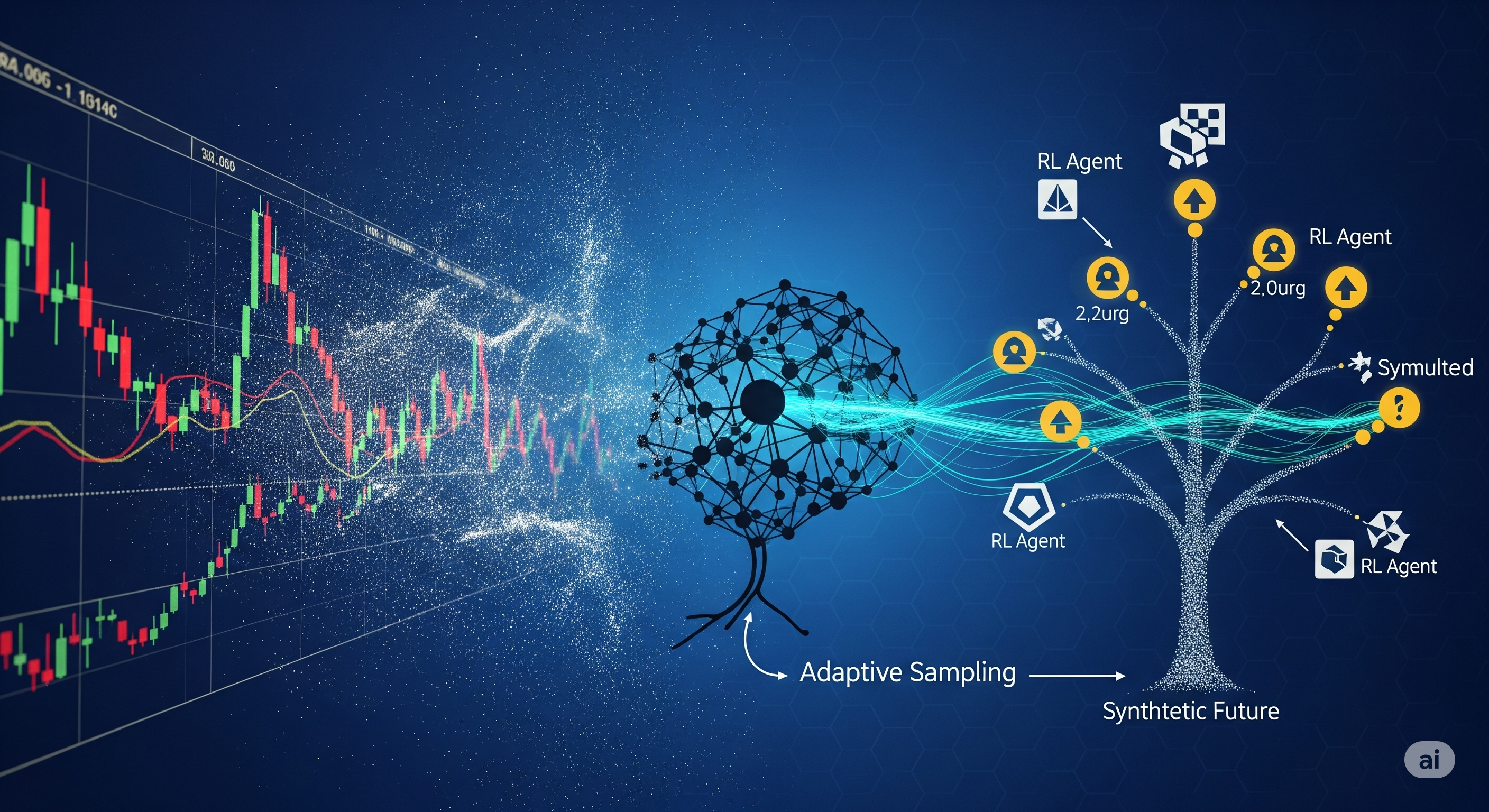

Opening — Why this matters now Embodied AI has a dirty secret: most simulated worlds look plausible until a robot actually tries to use them. Chairs block drawers, doors open into walls, and walkable space exists only in theory. As robotics shifts from toy benchmarks to household-scale deployment, this gap between visual realism and functional realism has become the real bottleneck. ...