Guard Rails > Horsepower: Why Environment Scaffolding Beats Bigger Models

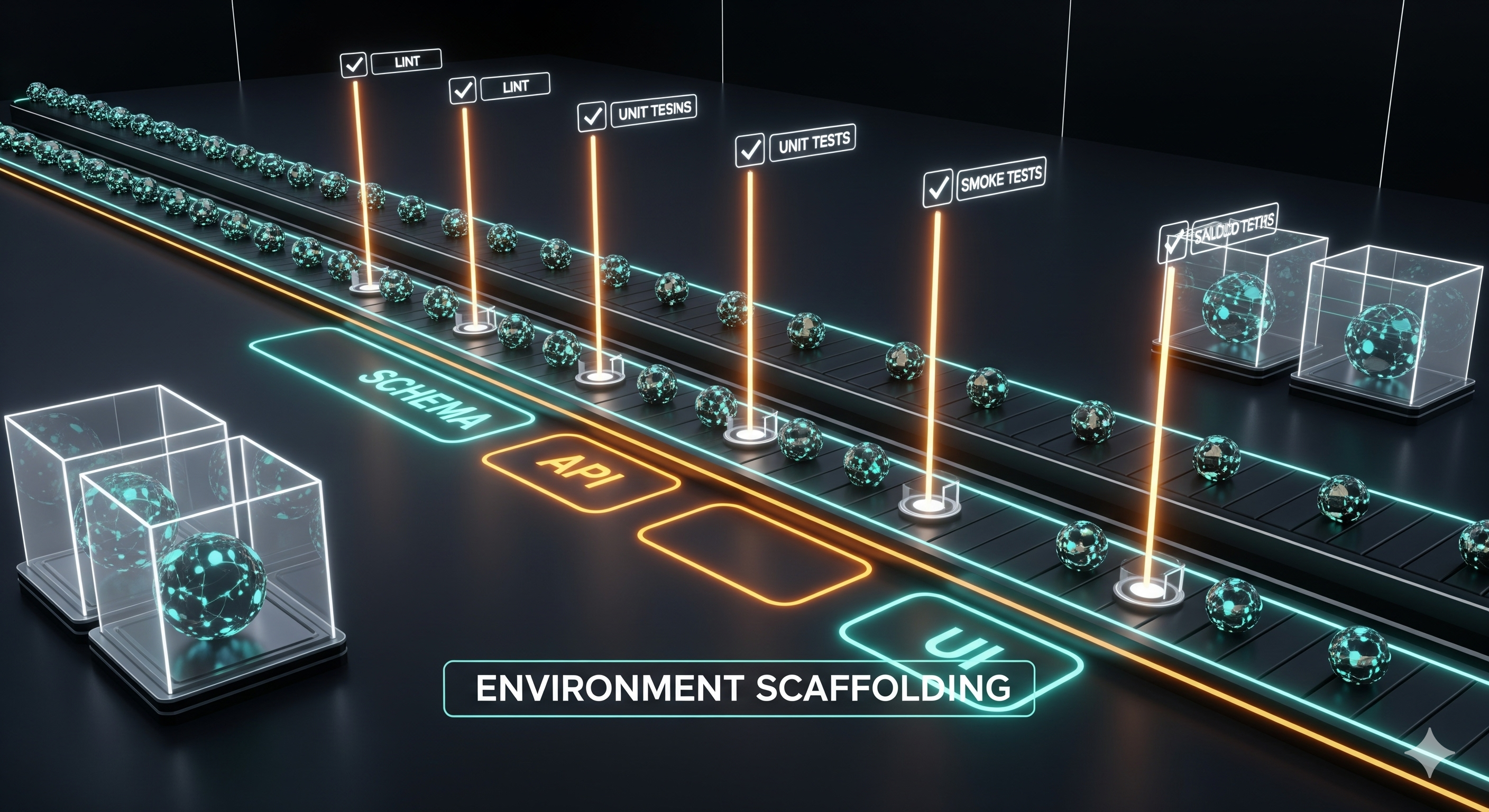

Most “AI builds the app” demos fail exactly where production begins: integration, state, and reliability. A new open-source framework from Databricks—app.build—argues the fix isn’t a smarter model but a smarter environment. The paper formalizes Environment Scaffolding (ES): a disciplined, test‑guarded sandbox that constrains agent actions, validates every step, and treats the LLM as a component—not the system. The headline result: once viability gates are passed, quality is consistently high—and you can get far with open‑weights models when the environment does the heavy lifting. ...