Many Minds Make Light Work: Boosting LLM Physics Reasoning via Agentic Verification

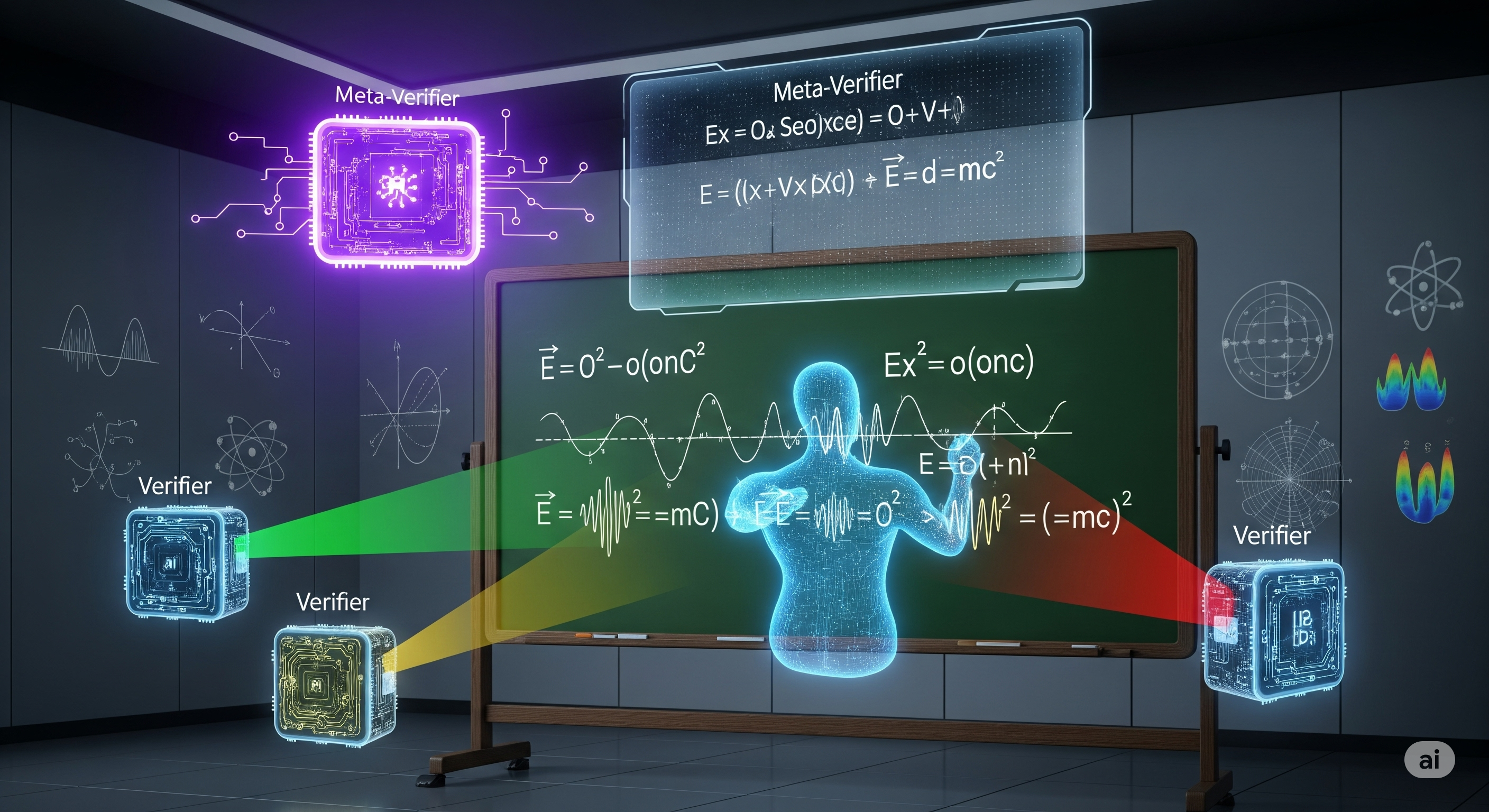

If you think AI models are getting too good at math, you’re not wrong. Benchmarks like GSM8K and MATH have been largely conquered. But when it comes to physics—where reasoning isn’t just about arithmetic, but about assumptions, abstractions, and real-world alignment—the picture is murkier. A new paper, PhysicsEval: Inference-Time Techniques to Improve the Reasoning Proficiency of Large Language Models on Physics Problems, makes a bold stride in this direction. It introduces a massive benchmark of 19,609 physics problems called PHYSICSEVAL and rigorously tests how frontier LLMs fare across topics from thermodynamics to quantum mechanics. Yet the real breakthrough isn’t just the dataset—it’s the method: multi-agent inference-time critique. ...