Hypotheses, Not Hunches: What an AI Data Scientist Gets Right

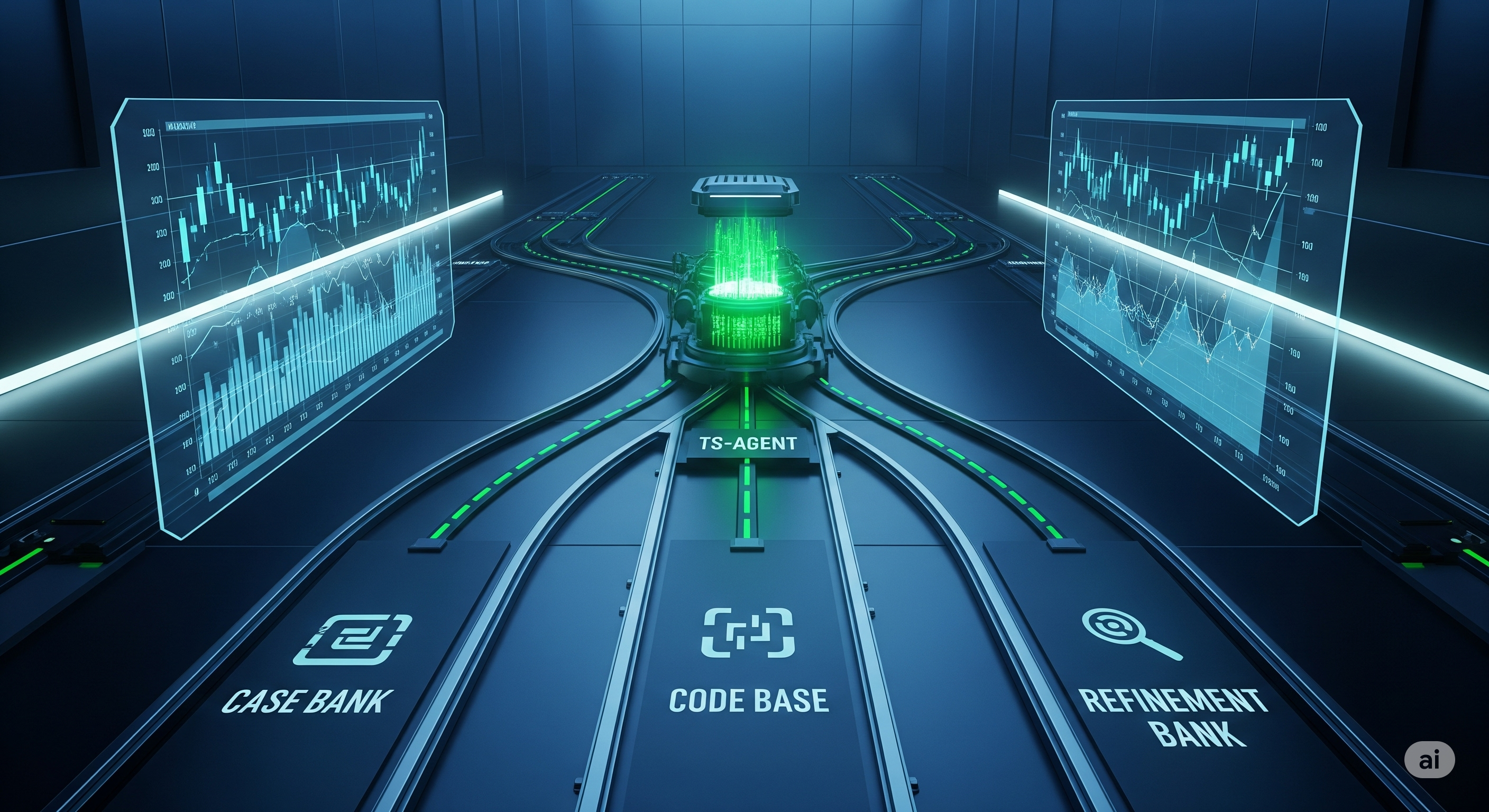

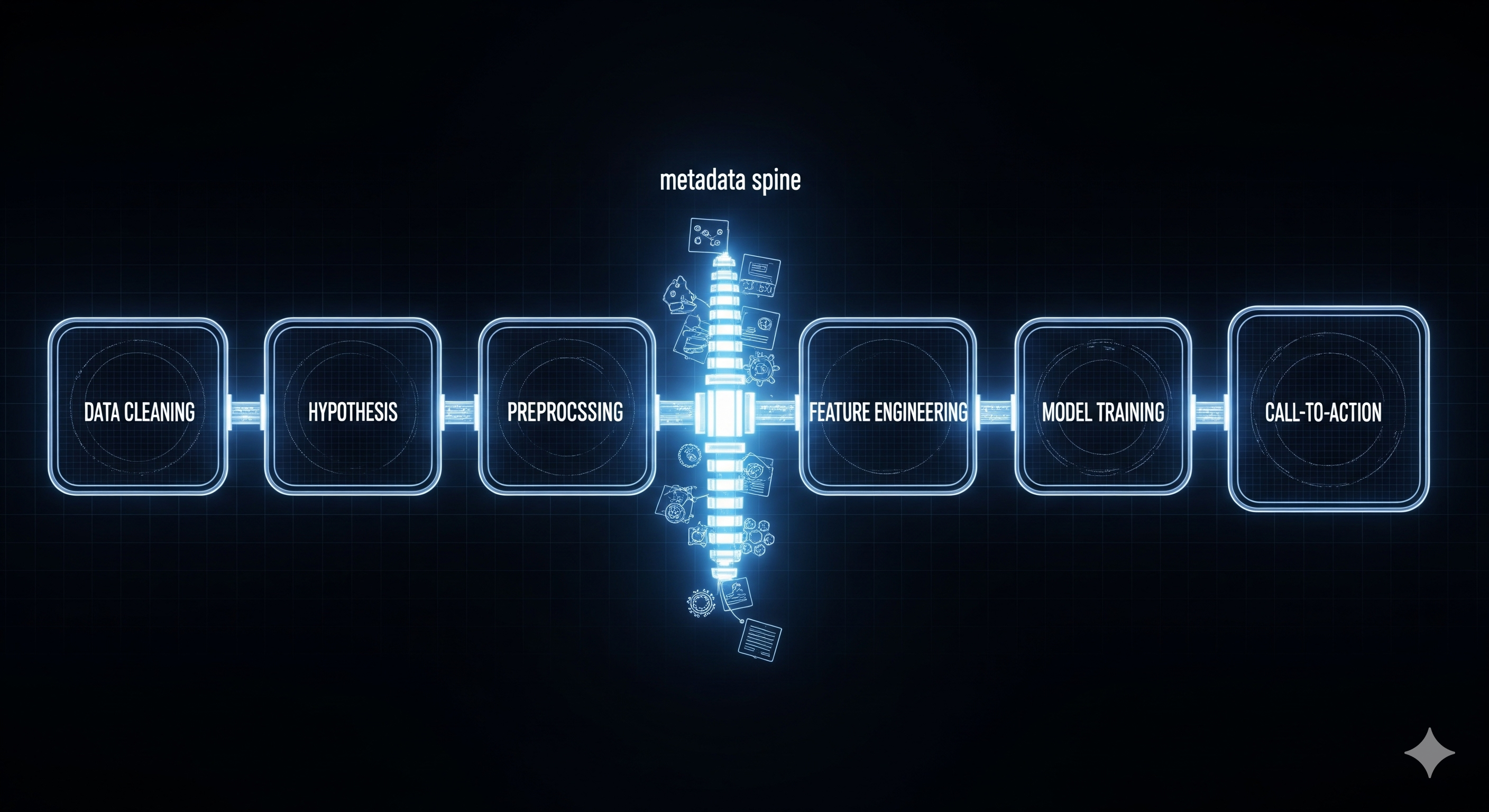

Most “AI for analytics” pitches still orbit model metrics. The more interesting question for executives is: What should we do next, and why? A recent paper proposes an AI Data Scientist—a team of six LLM “subagents” that march from raw tables to clear, time‑boxed recommendations. The twist isn’t just automation; it’s hypothesis‑first reasoning. Instead of blindly optimizing AUC, the system forms crisp, testable claims (e.g., “active members are less likely to churn”), statistically validates them, and only then engineers features and trains models. The output is not merely predictions—it’s an action plan with KPIs, timelines, and rationale. ...