Paths, Not Parrots: When RL Makes LLMs Plan—and When It Doesn’t

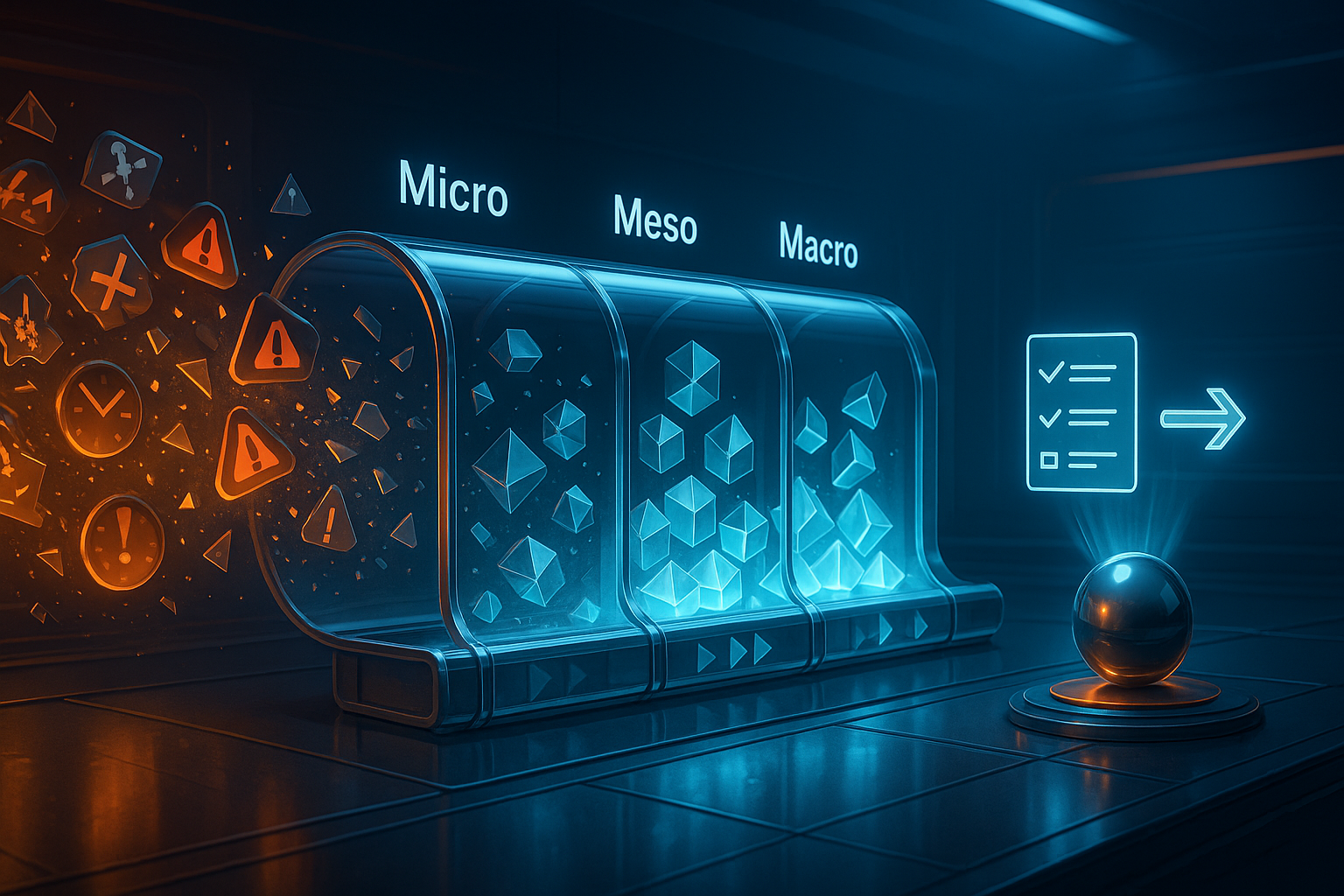

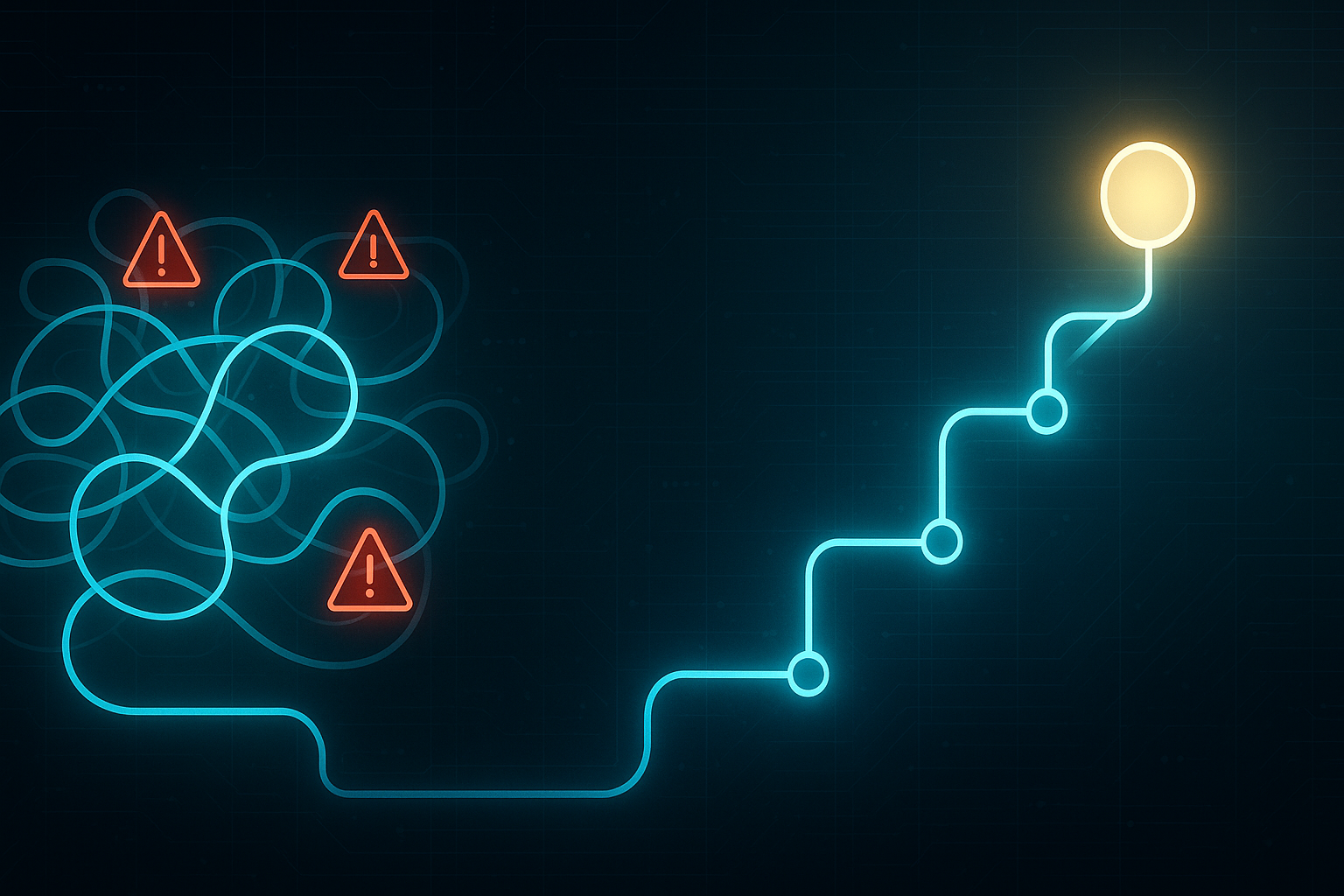

TL;DR SFT memorizes co-occurrences; RL explores. That’s why RL generalizes better on planning tasks. Policy-gradient (PG) can hit 100% training accuracy while silently killing output diversity. KL helps—but caps gains. Q-learning with process rewards preserves diversity and works off‑policy. With outcome‑only rewards, it reward-hacks and collapses. Why this paper matters to builders If you’re shipping agentic features—tool use chains, workflow orchestration, or multi-step retrieval—you’re already relying on planning. The paper models planning as path-finding on a graph and derives learning dynamics for SFT vs RL variants. The results give a crisp blueprint for product choices: which objective to use, when to add KL, and how to avoid brittle one-path agents. ...